William A. Gale

AT&T Bell Labs

Tagging French Without Lexical Probabilities -- Combining Linguistic Knowledge And Statistical Learning

Oct 10, 1997

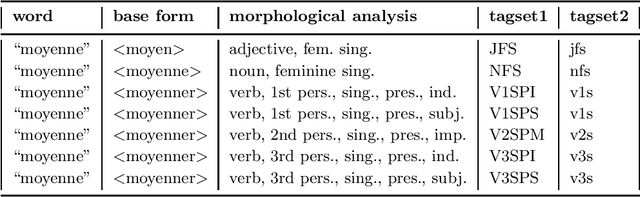

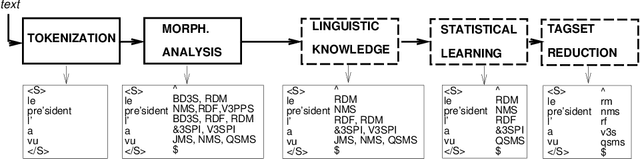

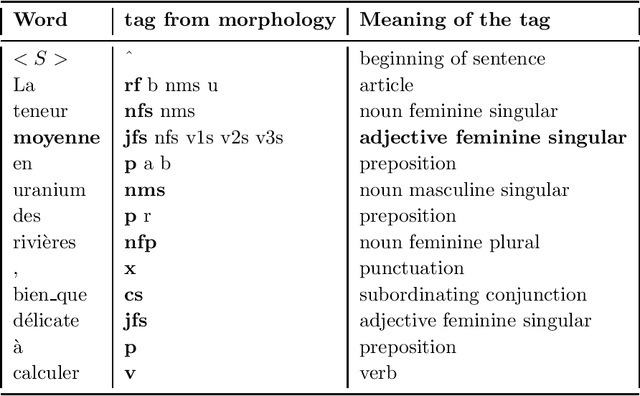

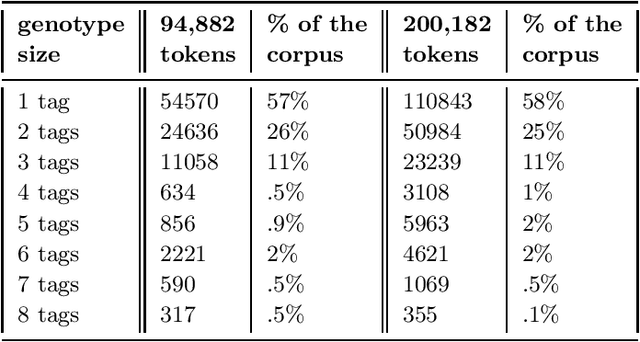

Abstract:This paper explores morpho-syntactic ambiguities for French to develop a strategy for part-of-speech disambiguation that a) reflects the complexity of French as an inflected language, b) optimizes the estimation of probabilities, c) allows the user flexibility in choosing a tagset. The problem in extracting lexical probabilities from a limited training corpus is that the statistical model may not necessarily represent the use of a particular word in a particular context. In a highly morphologically inflected language, this argument is particularly serious since a word can be tagged with a large number of parts of speech. Due to the lack of sufficient training data, we argue against estimating lexical probabilities to disambiguate parts of speech in unrestricted texts. Instead, we use the strength of contextual probabilities along with a feature we call ``genotype'', a set of tags associated with a word. Using this knowledge, we have built a part-of-speech tagger that combines linguistic and statistical approaches: contextual information is disambiguated by linguistic rules and n-gram probabilities on parts of speech only are estimated in order to disambiguate the remaining ambiguous tags.

A Sequential Algorithm for Training Text Classifiers

Jul 25, 1994

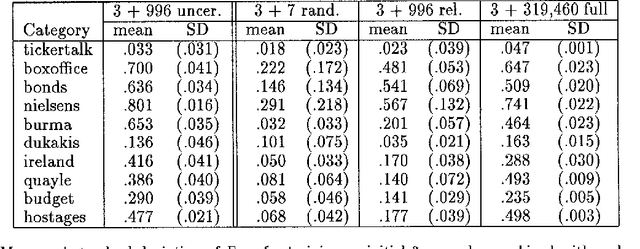

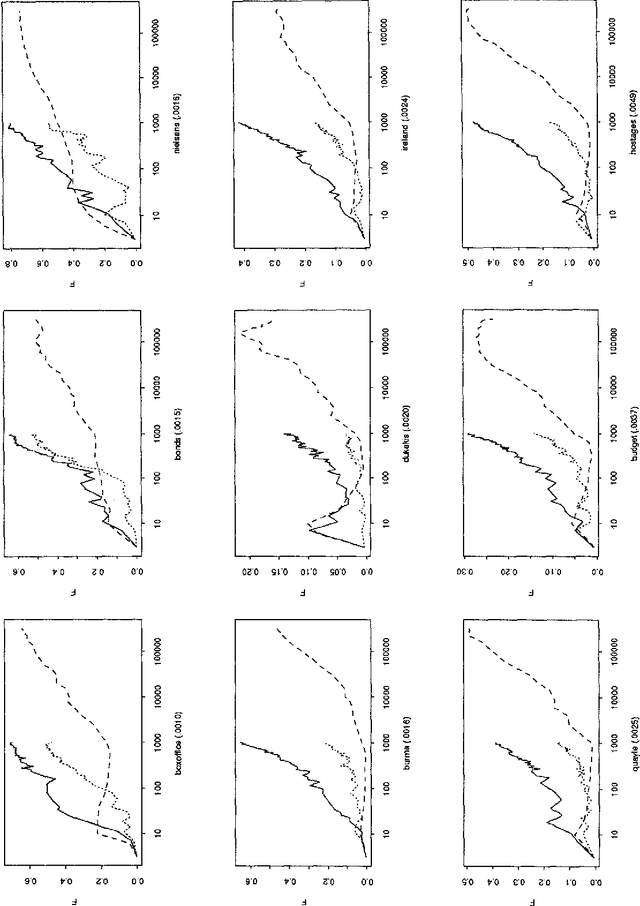

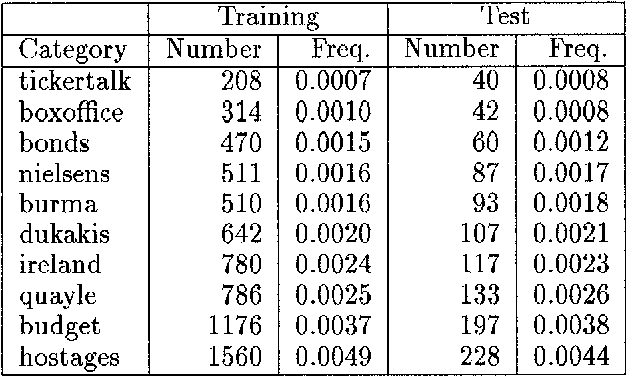

Abstract:The ability to cheaply train text classifiers is critical to their use in information retrieval, content analysis, natural language processing, and other tasks involving data which is partly or fully textual. An algorithm for sequential sampling during machine learning of statistical classifiers was developed and tested on a newswire text categorization task. This method, which we call uncertainty sampling, reduced by as much as 500-fold the amount of training data that would have to be manually classified to achieve a given level of effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge