Wesley Joon-Wie Tann

Mitigating Adversarial Attacks by Distributing Different Copies to Different Users

Nov 30, 2021

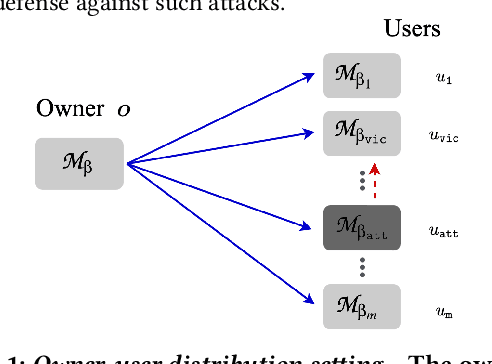

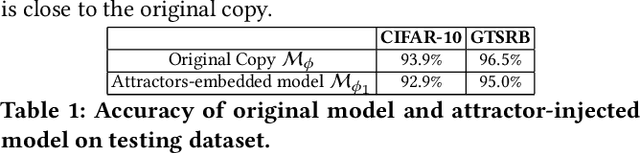

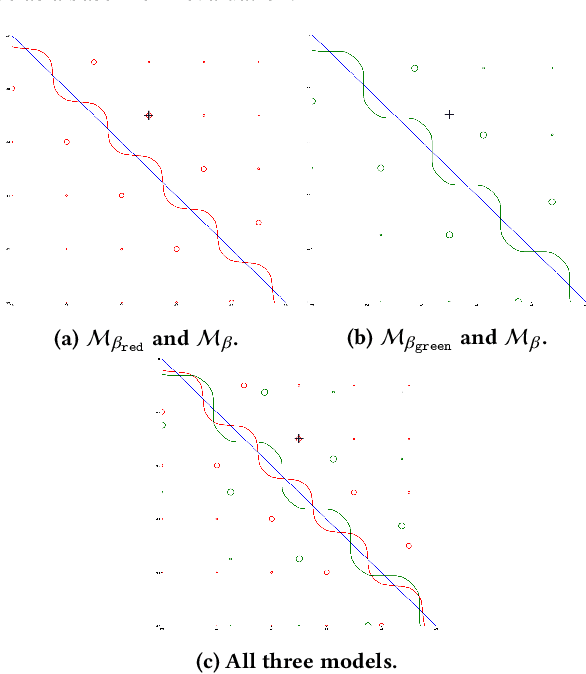

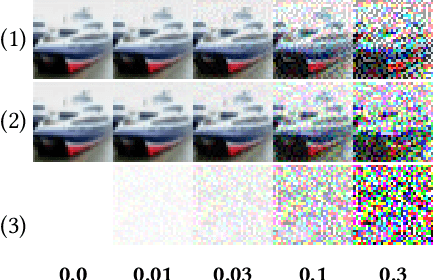

Abstract:Machine learning models are vulnerable to adversarial attacks. In this paper, we consider the scenario where a model is to be distributed to multiple users, among which a malicious user attempts to attack another user. The malicious user probes its copy of the model to search for adversarial samples and then presents the found samples to the victim's model in order to replicate the attack. We point out that by distributing different copies of the model to different users, we can mitigate the attack such that adversarial samples found on one copy would not work on another copy. We first observed that training a model with different randomness indeed mitigates such replication to certain degree. However, there is no guarantee and retraining is computationally expensive. Next, we propose a flexible parameter rewriting method that directly modifies the model's parameters. This method does not require additional training and is able to induce different sets of adversarial samples in different copies in a more controllable manner. Experimentation studies show that our approach can significantly mitigate the attacks while retaining high classification accuracy. From this study, we believe that there are many further directions worth exploring.

Filtering DDoS Attacks from Unlabeled Network Traffic Data Using Online Deep Learning

Dec 12, 2020

Abstract:DDoS attacks are simple, effective, and still pose a significant threat even after more than two decades. Given the recent success in machine learning, it is interesting to investigate how we can leverage deep learning to filter out application layer attack requests. There are challenges in adopting deep learning solutions due to the ever-changing profiles, the lack of labeled data, and constraints in the online setting. Offline unsupervised learning methods can sidestep these hurdles by learning an anomaly detector $N$ from the normal-day traffic ${\mathcal N}$. However, anomaly detection does not exploit information acquired during attacks, and their performance typically is not satisfactory. In this paper, we propose two frameworks that utilize both the historic ${\mathcal N}$ and the mixture ${\mathcal M}$ traffic obtained during attacks, consisting of unlabeled requests. We also introduce a machine learning optimization problem that aims to sift out the attacks using ${\mathcal N}$ and ${\mathcal M}$. First, our proposed approach, inspired by statistical methods, extends an unsupervised anomaly detector $N$ to solve the problem using estimated conditional probability distributions. We adopt transfer learning to apply $N$ on ${\mathcal N}$ and ${\mathcal M}$ separately and efficiently, combining the results to obtain an online learner. Second, we formulate a specific loss function more suited for deep learning and use iterative training to solve it in the online setting. On publicly available datasets, our online learners achieve a $99.3\%$ improvement on false-positive rates compared to the baseline detection methods. In the offline setting, our approaches are competitive with classifiers trained on labeled data.

SHADOWCAST: Controlling Network Properties to Explain Graph Generation

Jun 06, 2020

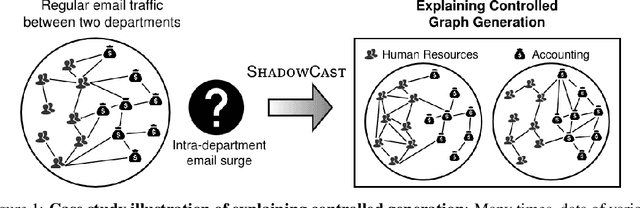

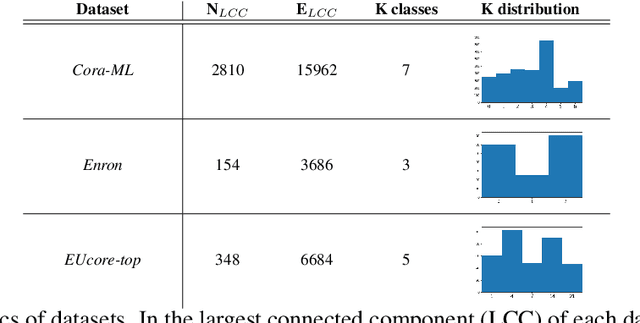

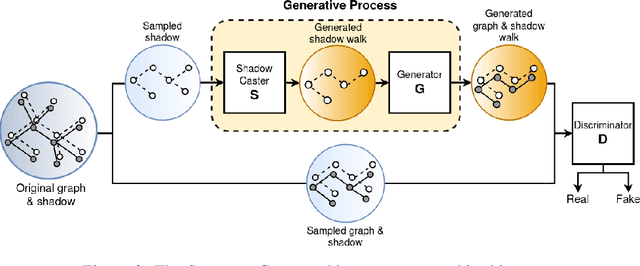

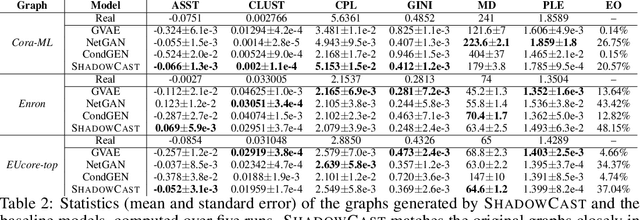

Abstract:We introduce the problem of explaining graph generation, formulated as controlling the generative process to produce graphs of explainable desired structures. By directing this generative process, we can measure and explain the observed outcomes. We propose SHADOWCAST, a controllable generative model capable of mimicking real-world networks and directing the generation, as a novel approach to this problem. The proposed model is based on a conditional generative adversarial network for graph data. We design it with the capability to maintain generation control by managing the conditions. Comprehensive experiments on three real-world network datasets demonstrate our model's competitive performance in the graph generation task. Furthermore, we direct SHADOWCAST to generate explainable graphs of different structures to show its effective controllability. As the first work to pose the problem of explaining generated graphs by controlling the generation, SHADOWCAST paves the way for future research in this exciting area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge