Wenzhi Cao

Deep Neural Networks for Rank-Consistent Ordinal Regression Based On Conditional Probabilities

Nov 17, 2021

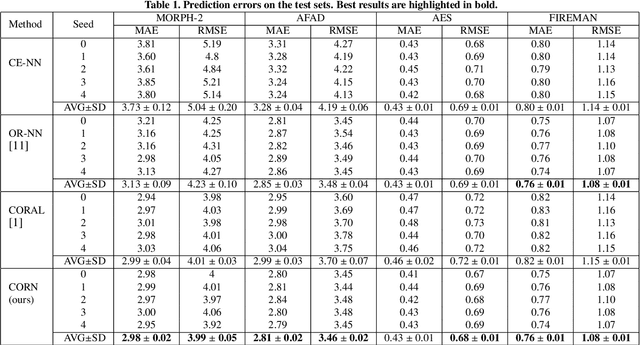

Abstract:In recent times, deep neural networks achieved outstanding predictive performance on various classification and pattern recognition tasks. However, many real-world prediction problems have ordinal response variables, and this ordering information is ignored by conventional classification losses such as the multi-category cross-entropy. Ordinal regression methods for deep neural networks address this. One such method is the CORAL method, which is based on an earlier binary label extension framework and achieves rank consistency among its output layer tasks by imposing a weight-sharing constraint. However, while earlier experiments showed that CORAL's rank consistency is beneficial for performance, the weight-sharing constraint could severely restrict the expressiveness of a deep neural network. In this paper, we propose an alternative method for rank-consistent ordinal regression that does not require a weight-sharing constraint in a neural network's fully connected output layer. We achieve this rank consistency by a novel training scheme using conditional training sets to obtain the unconditional rank probabilities through applying the chain rule for conditional probability distributions. Experiments on various datasets demonstrate the efficacy of the proposed method to utilize the ordinal target information, and the absence of the weight-sharing restriction improves the performance substantially compared to the CORAL reference approach.

Consistent Rank Logits for Ordinal Regression with Convolutional Neural Networks

Jan 24, 2019

Abstract:While extraordinary progress has been made towards developing neural network architectures for classification tasks, commonly used loss functions such as the multi-category cross entropy loss are inadequate for ranking and ordinal regression problems. To address this issue, approaches have been developed that transform ordinal target variables series of binary classification tasks, resulting in robust ranking algorithms with good generalization performance. However, to model ordinal information appropriately, ideally, a rank-monotonic prediction function is required such that confidence scores are ordered and consistent. We propose a new framework (Consistent Rank Logits, CORAL) with theoretical guarantees for rank-monotonicity and consistent confidence scores. Through parameter sharing, our framework benefits from low training complexity and can easily be implemented to extend common convolutional neural network classifiers for ordinal regression tasks. Furthermore, our empirical results support the proposed theory and show a substantial improvement compared to the current state-of-the-art ordinal regression method for age prediction from face images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge