Wenrui Gan

Siamese Labels Auxiliary Network(SiLaNet)

Mar 06, 2021

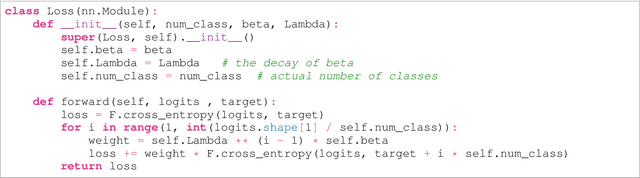

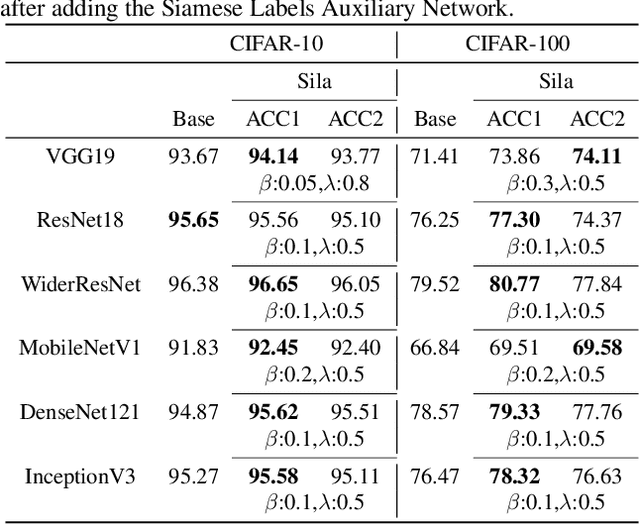

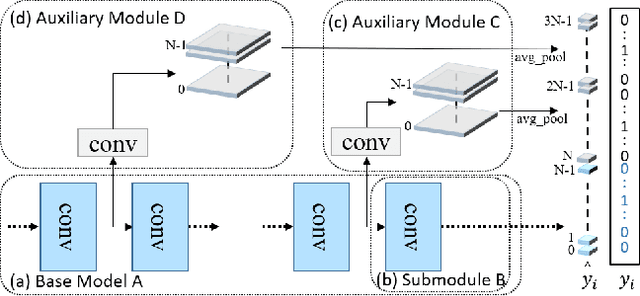

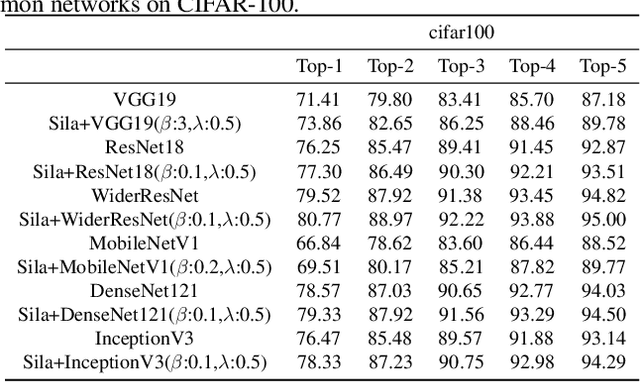

Abstract:Auxiliary information attracts more and more attention in the area of machine learning. Attempts so far to include such auxiliary information in state-of-the-art learning process have often been based on simply appending these auxiliary features to the data level or feature level. In this paper, we intend to propose a novel training method with new options and architectures. Siamese labels, which were used in the training phase as auxiliary modules. While in the testing phase, the auxiliary module should be removed. Siamese label module makes it easier to train and improves the performance in testing process. In general, the main contributions can be summarized as, 1) Siamese Labels are firstly proposed as auxiliary information to improve the learning efficiency; 2) We establish a new architecture, Siamese Labels Auxiliary Network (SilaNet), which is to assist the training of the model; 3) Siamese Labels Auxiliary Network is applied to compress the model parameters by 50% and ensure the high accuracy at the same time. For the purpose of comparison, we tested the network on CIFAR-10 and CIFAR100 using some common models. The proposed SilaNet performs excellent efficiency both on the accuracy and robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge