Wenchao Cao

High-Sensitivity Iodine Imaging by Combining Spectral CT Technologies

Mar 29, 2021

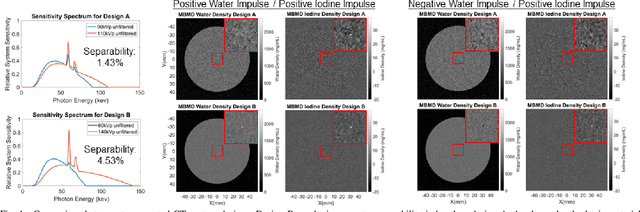

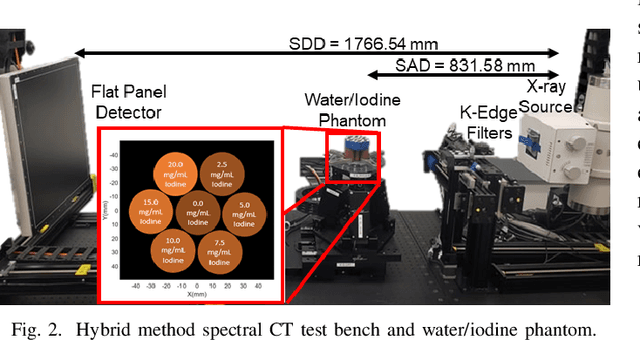

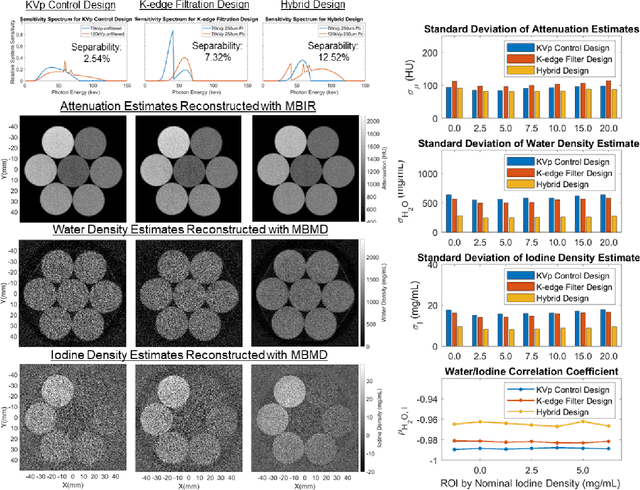

Abstract:Spectral CT offers enhanced material discrimination over single-energy systems and enables quantitative estimation of basis material density images. Water/iodine decomposition in contrast-enhanced CT is one of the most widespread applications of this technology in the clinic. However, low concentrations of iodine can be difficult to estimate accurately, limiting potential clinical applications and/or raising injected contrast agent requirements. We seek high-sensitivity spectral CT system designs which minimize noise in water/iodine density estimates. In this work, we present a model-driven framework for spectral CT system design optimization to maximize material separability. We apply this tool to optimize the sensitivity spectra on a spectral CT test bench using a hybrid design which combines source kVp control and k-edge filtration. Following design optimization, we scanned a water/iodine phantom with the hybrid spectral CT system and performed dose-normalized comparisons to two single-technique designs which use only kVp control or only kedge filtration. The material decomposition results show that the hybrid system reduces both standard deviation and crossmaterial noise correlations compared to the designs where the constituent technologies are used individually.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge