Weiji He

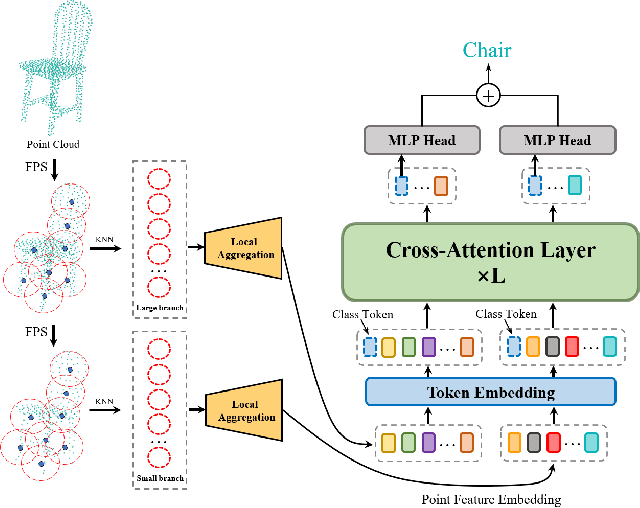

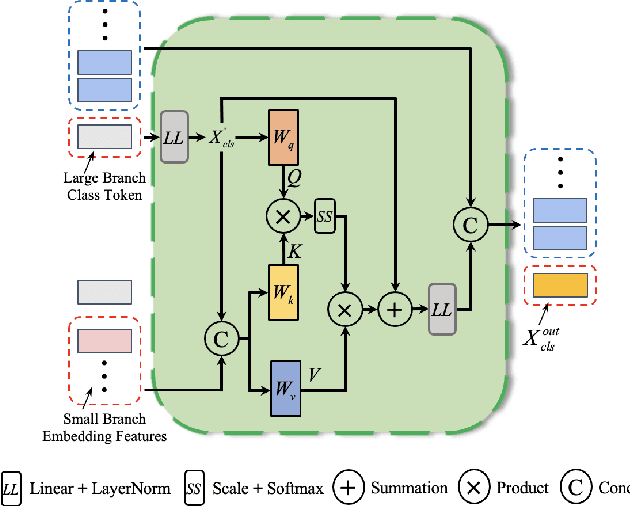

PointCAT: Cross-Attention Transformer for point cloud

Apr 06, 2023

Abstract:Transformer-based models have significantly advanced natural language processing and computer vision in recent years. However, due to the irregular and disordered structure of point cloud data, transformer-based models for 3D deep learning are still in their infancy compared to other methods. In this paper we present Point Cross-Attention Transformer (PointCAT), a novel end-to-end network architecture using cross-attentions mechanism for point cloud representing. Our approach combines multi-scale features via two seprate cross-attention transformer branches. To reduce the computational increase brought by multi-branch structure, we further introduce an efficient model for shape classification, which only process single class token of one branch as a query to calculate attention map with the other. Extensive experiments demonstrate that our method outperforms or achieves comparable performance to several approaches in shape classification, part segmentation and semantic segmentation tasks.

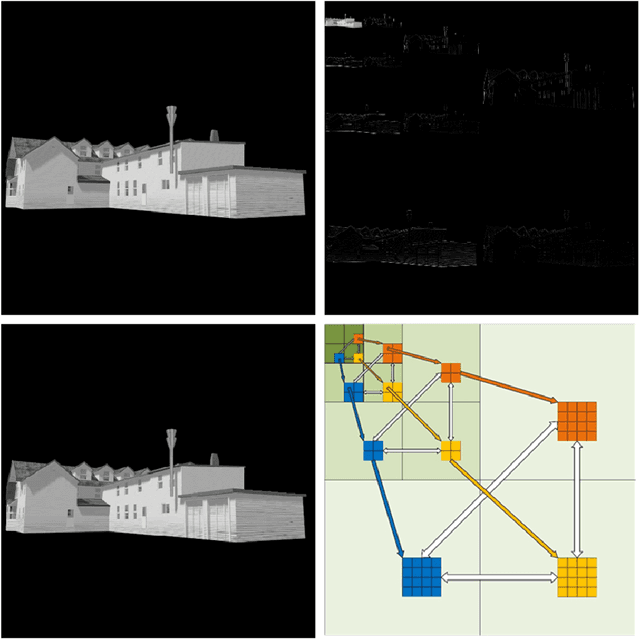

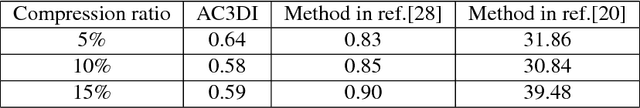

Adaptive compressed 3D imaging based on wavelet trees and Hadamard multiplexing with a single photon counting detector

Sep 15, 2017

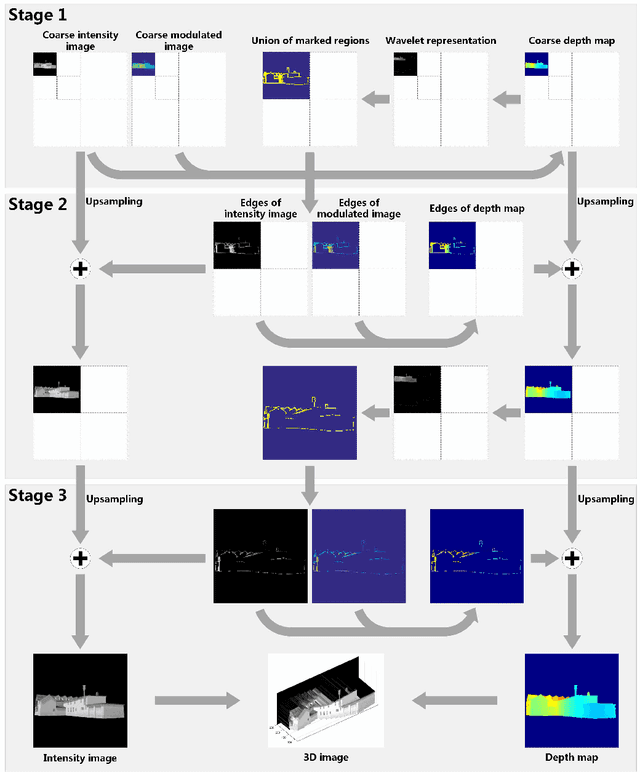

Abstract:Photon counting 3D imaging allows to obtain 3D images with single-photon sensitivity and sub-ns temporal resolution. However, it is challenging to scale to high spatial resolution. In this work, we demonstrate a photon counting 3D imaging technique with short-pulsed structured illumination and a single-pixel photon counting detector. The proposed multi-resolution photon counting 3D imaging technique acquires a high-resolution 3D image from a coarse image and edges at successfully finer resolution sampled by Hadamard multiplexing along the wavelet trees. The detected power is significantly increased thanks to the Hadamard multiplexing. Both the required measurements and the reconstruction time can be significantly reduced by performing wavelet-tree-based regions of edges predication and Hadamard demultiplexing, which makes the proposed technique suitable for scenes with high spatial resolution. The experimental results indicate that a 3D image at resolution up to 512*512 pixels can be acquired and retrieved with practical time as low as 17 seconds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge