Vrizlynn L. L. Thing

CPE-Identifier: Automated CPE identification and CVE summaries annotation with Deep Learning and NLP

May 22, 2024

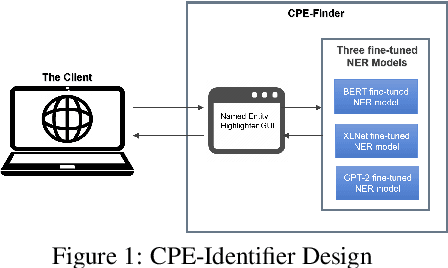

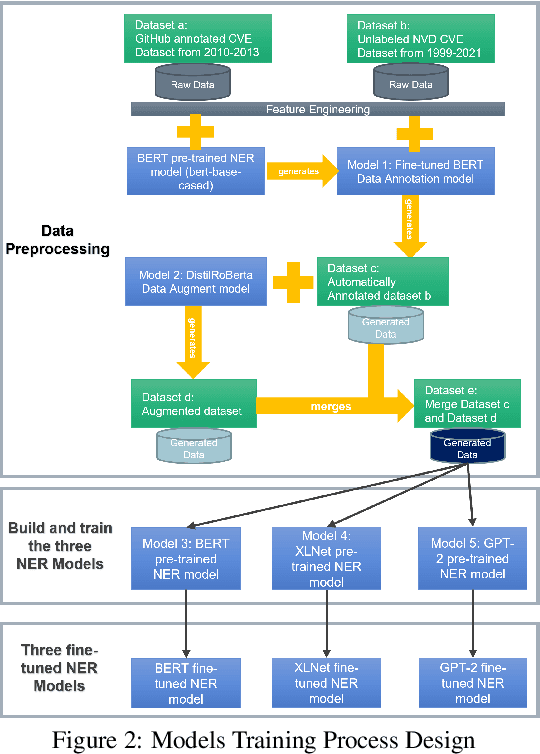

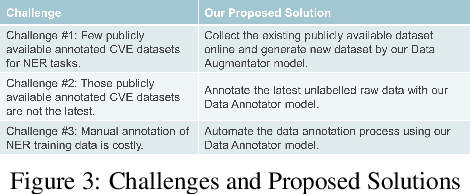

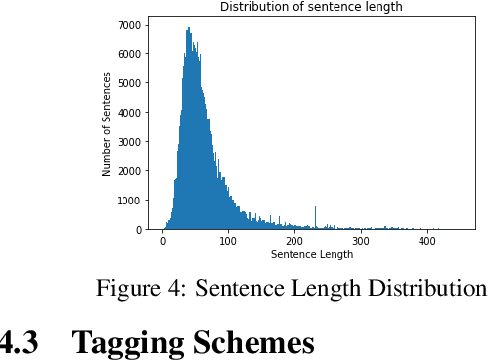

Abstract:With the drastic increase in the number of new vulnerabilities in the National Vulnerability Database (NVD) every year, the workload for NVD analysts to associate the Common Platform Enumeration (CPE) with the Common Vulnerabilities and Exposures (CVE) summaries becomes increasingly laborious and slow. The delay causes organisations, which depend on NVD for vulnerability management and security measurement, to be more vulnerable to zero-day attacks. Thus, it is essential to come out with a technique and tool to extract the CPEs in the CVE summaries accurately and quickly. In this work, we propose the CPE-Identifier system, an automated CPE annotating and extracting system, from the CVE summaries. The system can be used as a tool to identify CPE entities from new CVE text inputs. Moreover, we also automate the data generating and labeling processes using deep learning models. Due to the complexity of the CVE texts, new technical terminologies appear frequently. To identify novel words in future CVE texts, we apply Natural Language Processing (NLP) Named Entity Recognition (NER), to identify new technical jargons in the text. Our proposed model achieves an F1 score of 95.48%, an accuracy score of 99.13%, a precision of 94.83%, and a recall of 96.14%. We show that it outperforms prior works on automated CVE-CPE labeling by more than 9% on all metrics.

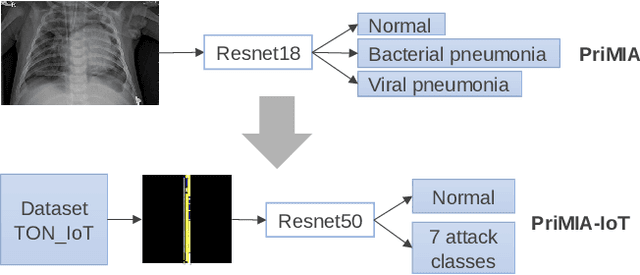

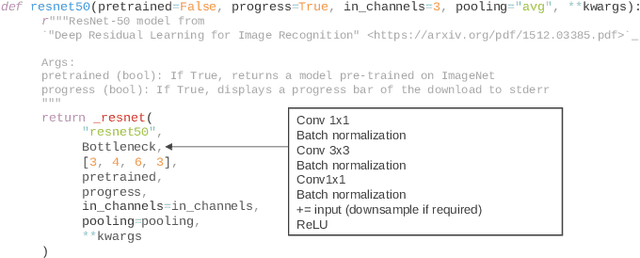

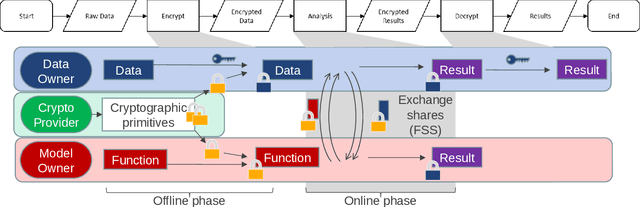

Privacy-Preserving Intrusion Detection using Convolutional Neural Networks

Apr 15, 2024

Abstract:Privacy-preserving analytics is designed to protect valuable assets. A common service provision involves the input data from the client and the model on the analyst's side. The importance of the privacy preservation is fuelled by legal obligations and intellectual property concerns. We explore the use case of a model owner providing an analytic service on customer's private data. No information about the data shall be revealed to the analyst and no information about the model shall be leaked to the customer. Current methods involve costs: accuracy deterioration and computational complexity. The complexity, in turn, results in a longer processing time, increased requirement on computing resources, and involves data communication between the client and the server. In order to deploy such service architecture, we need to evaluate the optimal setting that fits the constraints. And that is what this paper addresses. In this work, we enhance an attack detection system based on Convolutional Neural Networks with privacy-preserving technology based on PriMIA framework that is initially designed for medical data.

An adversarial attack approach for eXplainable AI evaluation on deepfake detection models

Dec 08, 2023Abstract:With the rising concern on model interpretability, the application of eXplainable AI (XAI) tools on deepfake detection models has been a topic of interest recently. In image classification tasks, XAI tools highlight pixels influencing the decision given by a model. This helps in troubleshooting the model and determining areas that may require further tuning of parameters. With a wide range of tools available in the market, choosing the right tool for a model becomes necessary as each one may highlight different sets of pixels for a given image. There is a need to evaluate different tools and decide the best performing ones among them. Generic XAI evaluation methods like insertion or removal of salient pixels/segments are applicable for general image classification tasks but may produce less meaningful results when applied on deepfake detection models due to their functionality. In this paper, we perform experiments to show that generic removal/insertion XAI evaluation methods are not suitable for deepfake detection models. We also propose and implement an XAI evaluation approach specifically suited for deepfake detection models.

Enhancing Cyber-Resilience in Self-Healing Cyber-Physical Systems with Implicit Guarantees

May 15, 2023

Abstract:Self-Healing Cyber-Physical Systems (SH-CPS) effectively recover from system perceived failures without human intervention. They ensure a level of resilience and tolerance to unforeseen situations that arise from intrinsic system and component degradation, errors, or malicious attacks. Implicit redundancy can be exploited in SH-CPS to structurally adapt without the need to explicitly duplicate components. However, implicitly redundant components do not guarantee the same level of dependability as the primary component used to provide for a given function. Additional processes are needed to restore critical system functionalities as desired. This work introduces implicit guarantees to ensure the dependability of implicitly redundant components and processes. Implicit guarantees can be obtained through inheritance and decomposition. Therefore, a level of dependability can be guaranteed in SH-CPS after adaptation and recovery while complying with requirements. We demonstrate compliance with the requirement guarantees while ensuring resilience in SH-CPS.

Few-shot Weakly-supervised Cybersecurity Anomaly Detection

Apr 15, 2023

Abstract:With increased reliance on Internet based technologies, cyberattacks compromising users' sensitive data are becoming more prevalent. The scale and frequency of these attacks are escalating rapidly, affecting systems and devices connected to the Internet. The traditional defense mechanisms may not be sufficiently equipped to handle the complex and ever-changing new threats. The significant breakthroughs in the machine learning methods including deep learning, had attracted interests from the cybersecurity research community for further enhancements in the existing anomaly detection methods. Unfortunately, collecting labelled anomaly data for all new evolving and sophisticated attacks is not practical. Training and tuning the machine learning model for anomaly detection using only a handful of labelled data samples is a pragmatic approach. Therefore, few-shot weakly supervised anomaly detection is an encouraging research direction. In this paper, we propose an enhancement to an existing few-shot weakly-supervised deep learning anomaly detection framework. This framework incorporates data augmentation, representation learning and ordinal regression. We then evaluated and showed the performance of our implemented framework on three benchmark datasets: NSL-KDD, CIC-IDS2018, and TON_IoT.

Feature Mining for Encrypted Malicious Traffic Detection with Deep Learning and Other Machine Learning Algorithms

Apr 07, 2023

Abstract:The popularity of encryption mechanisms poses a great challenge to malicious traffic detection. The reason is traditional detection techniques cannot work without the decryption of encrypted traffic. Currently, research on encrypted malicious traffic detection without decryption has focused on feature extraction and the choice of machine learning or deep learning algorithms. In this paper, we first provide an in-depth analysis of traffic features and compare different state-of-the-art traffic feature creation approaches, while proposing a novel concept for encrypted traffic feature which is specifically designed for encrypted malicious traffic analysis. In addition, we propose a framework for encrypted malicious traffic detection. The framework is a two-layer detection framework which consists of both deep learning and traditional machine learning algorithms. Through comparative experiments, it outperforms classical deep learning and traditional machine learning algorithms, such as ResNet and Random Forest. Moreover, to provide sufficient training data for the deep learning model, we also curate a dataset composed entirely of public datasets. The composed dataset is more comprehensive than using any public dataset alone. Lastly, we discuss the future directions of this research.

* Computers & Security, Volume 128, No. 103143, 2023

Deepfake Detection with Deep Learning: Convolutional Neural Networks versus Transformers

Apr 07, 2023

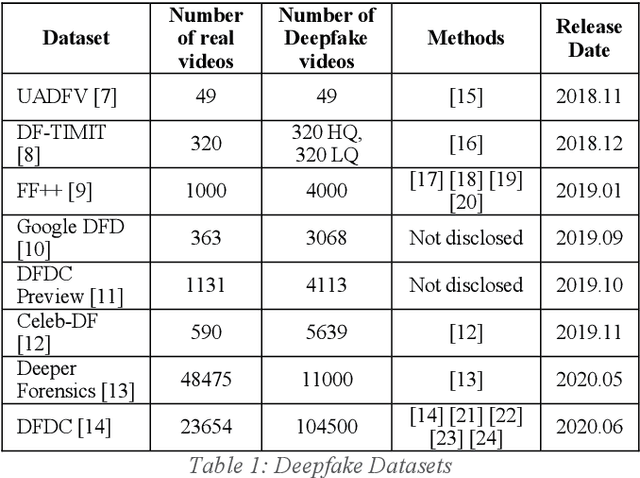

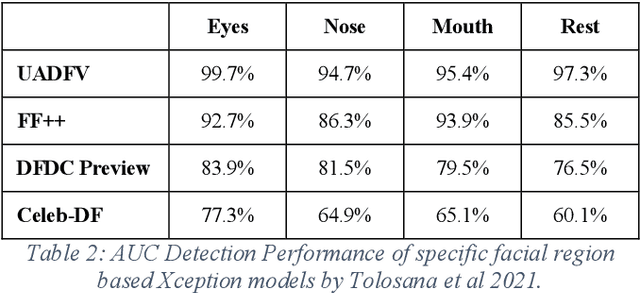

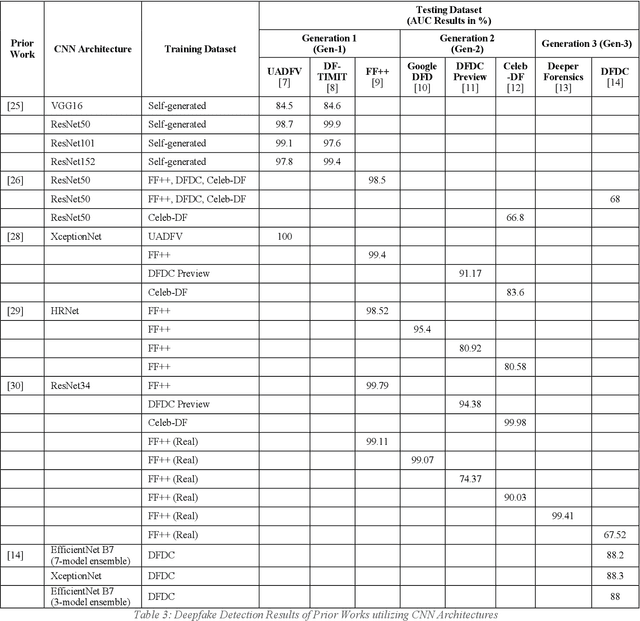

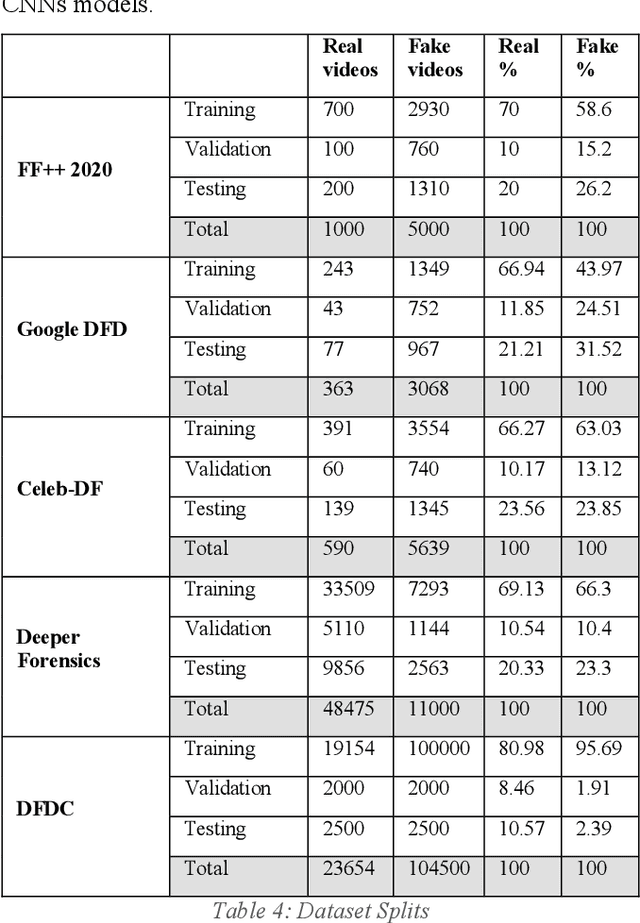

Abstract:The rapid evolvement of deepfake creation technologies is seriously threating media information trustworthiness. The consequences impacting targeted individuals and institutions can be dire. In this work, we study the evolutions of deep learning architectures, particularly CNNs and Transformers. We identified eight promising deep learning architectures, designed and developed our deepfake detection models and conducted experiments over well-established deepfake datasets. These datasets included the latest second and third generation deepfake datasets. We evaluated the effectiveness of our developed single model detectors in deepfake detection and cross datasets evaluations. We achieved 88.74%, 99.53%, 97.68%, 99.73% and 92.02% accuracy and 99.95%, 100%, 99.88%, 99.99% and 97.61% AUC, in the detection of FF++ 2020, Google DFD, Celeb-DF, Deeper Forensics and DFDC deepfakes, respectively. We also identified and showed the unique strengths of CNNs and Transformers models and analysed the observed relationships among the different deepfake datasets, to aid future developments in this area.

A Hybrid Deep Learning Anomaly Detection Framework for Intrusion Detection

Dec 02, 2022

Abstract:Cyber intrusion attacks that compromise the users' critical and sensitive data are escalating in volume and intensity, especially with the growing connections between our daily life and the Internet. The large volume and high complexity of such intrusion attacks have impeded the effectiveness of most traditional defence techniques. While at the same time, the remarkable performance of the machine learning methods, especially deep learning, in computer vision, had garnered research interests from the cyber security community to further enhance and automate intrusion detections. However, the expensive data labeling and limitation of anomalous data make it challenging to train an intrusion detector in a fully supervised manner. Therefore, intrusion detection based on unsupervised anomaly detection is an important feature too. In this paper, we propose a three-stage deep learning anomaly detection based network intrusion attack detection framework. The framework comprises an integration of unsupervised (K-means clustering), semi-supervised (GANomaly) and supervised learning (CNN) algorithms. We then evaluated and showed the performance of our implemented framework on three benchmark datasets: NSL-KDD, CIC-IDS2018, and TON_IoT.

* Keywords: Cybersecurity, Anomaly Detection, Intrusion Detection, Deep Learning, Unsupervised Learning, Neural Networks; https://ieeexplore.ieee.org/document/9799486

IEEE Big Data Cup 2022: Privacy Preserving Matching of Encrypted Images with Deep Learning

Nov 18, 2022Abstract:Smart sensors, devices and systems deployed in smart cities have brought improved physical protections to their citizens. Enhanced crime prevention, and fire and life safety protection are achieved through these technologies that perform motion detection, threat and actors profiling, and real-time alerts. However, an important requirement in these increasingly prevalent deployments is the preservation of privacy and enforcement of protection of personal identifiable information. Thus, strong encryption and anonymization techniques should be applied to the collected data. In this IEEE Big Data Cup 2022 challenge, different masking, encoding and homomorphic encryption techniques were applied to the images to protect the privacy of their contents. Participants are required to develop detection solutions to perform privacy preserving matching of these images. In this paper, we describe our solution which is based on state-of-the-art deep convolutional neural networks and various data augmentation techniques. Our solution achieved 1st place at the IEEE Big Data Cup 2022: Privacy Preserving Matching of Encrypted Images Challenge.

* Keywords: privacy preservation, privacy enhancing, masking, encoding, homomorphic encryption, deep learning, convolutional neural networks

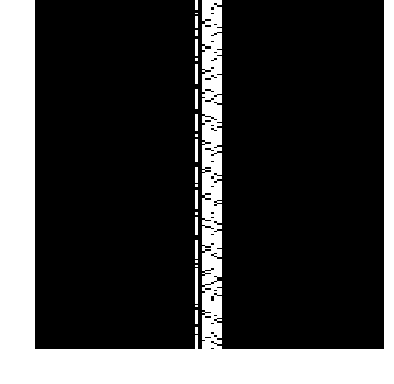

Intrusion Detection in Internet of Things using Convolutional Neural Networks

Nov 18, 2022

Abstract:Internet of Things (IoT) has become a popular paradigm to fulfil needs of the industry such as asset tracking, resource monitoring and automation. As security mechanisms are often neglected during the deployment of IoT devices, they are more easily attacked by complicated and large volume intrusion attacks using advanced techniques. Artificial Intelligence (AI) has been used by the cyber security community in the past decade to automatically identify such attacks. However, deep learning methods have yet to be extensively explored for Intrusion Detection Systems (IDS) specifically for IoT. Most recent works are based on time sequential models like LSTM and there is short of research in CNNs as they are not naturally suited for this problem. In this article, we propose a novel solution to the intrusion attacks against IoT devices using CNNs. The data is encoded as the convolutional operations to capture the patterns from the sensors data along time that are useful for attacks detection by CNNs. The proposed method is integrated with two classical CNNs: ResNet and EfficientNet, where the detection performance is evaluated. The experimental results show significant improvement in both true positive rate and false positive rate compared to the baseline using LSTM.

* Keywords: Cybersecurity, Intrusion Detection, IoT, Deep Learning, Convolutional Neural Networks; https://ieeexplore.ieee.org/abstract/document/9647828

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge