Vinit Shah

Low Latency Real-Time Seizure Detection Using Transfer Deep Learning

Feb 16, 2022

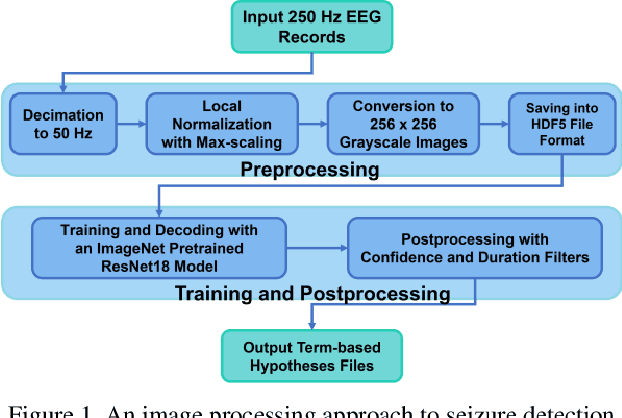

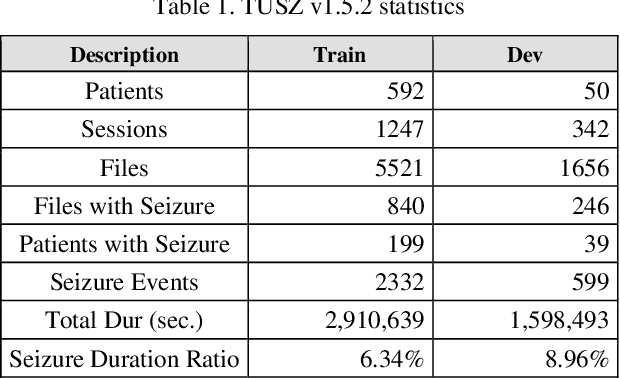

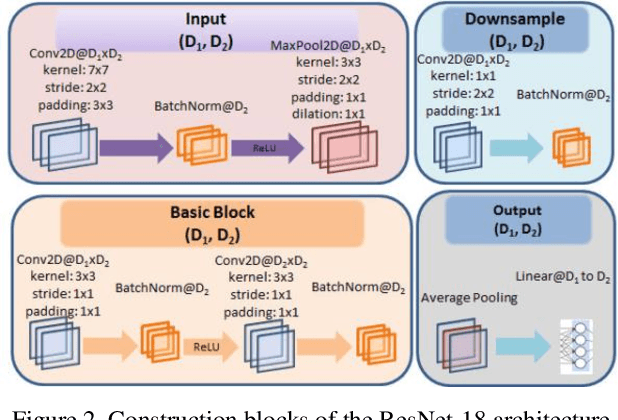

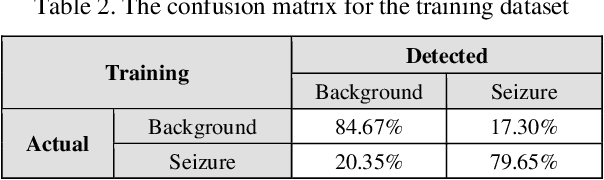

Abstract:Scalp electroencephalogram (EEG) signals inherently have a low signal-to-noise ratio due to the way the signal is electrically transduced. Temporal and spatial information must be exploited to achieve accurate detection of seizure events. Most popular approaches to seizure detection using deep learning do not jointly model this information or require multiple passes over the signal, which makes the systems inherently non-causal. In this paper, we exploit both simultaneously by converting the multichannel signal to a grayscale image and using transfer learning to achieve high performance. The proposed system is trained end-to-end with only very simple pre- and postprocessing operations which are computationally lightweight and have low latency, making them conducive to clinical applications that require real-time processing. We have achieved a performance of 42.05% sensitivity with 5.78 false alarms per 24 hours on the development dataset of v1.5.2 of the Temple University Hospital Seizure Detection Corpus. On a single-core CPU operating at 1.7 GHz, the system runs faster than real-time (0.58 xRT), uses 16 Gbytes of memory, and has a latency of 300 msec.

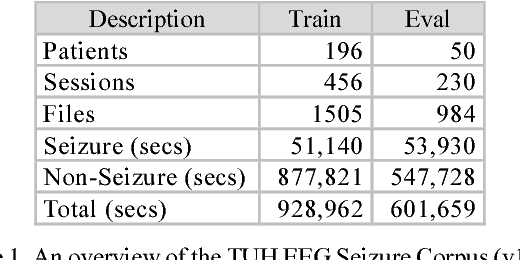

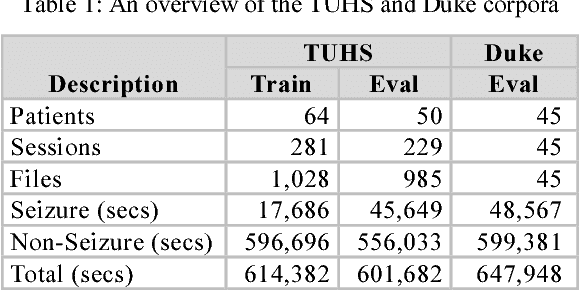

The Temple University Hospital Seizure Detection Corpus

Jan 03, 2018

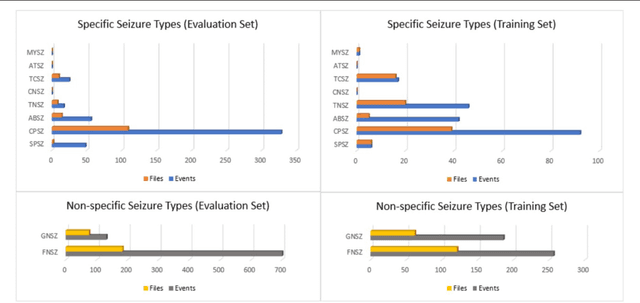

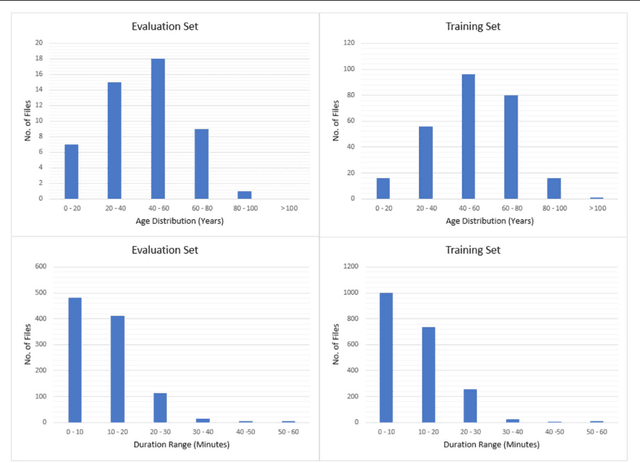

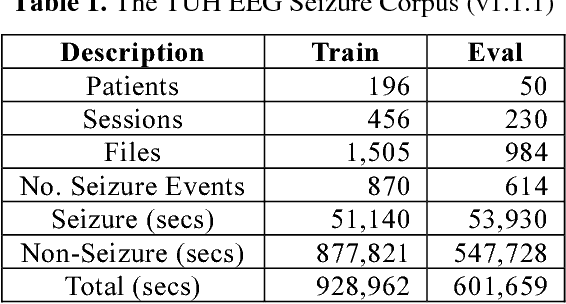

Abstract:We introduce the TUH EEG Seizure Corpus (TUSZ), which is the largest open source corpus of its type, and represents an accurate characterization of clinical conditions. In this paper, we describe the techniques used to develop TUSZ, evaluate their effectiveness, and present some descriptive statistics on the resulting corpus.

Optimizing Channel Selection for Seizure Detection

Jan 03, 2018

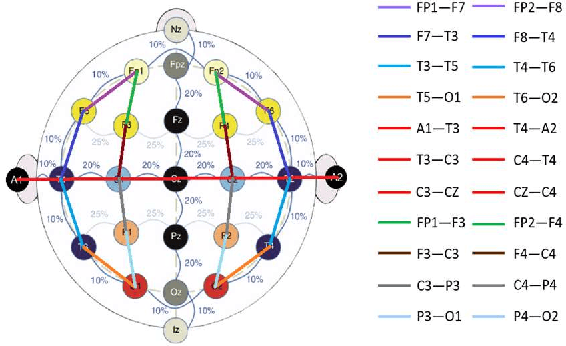

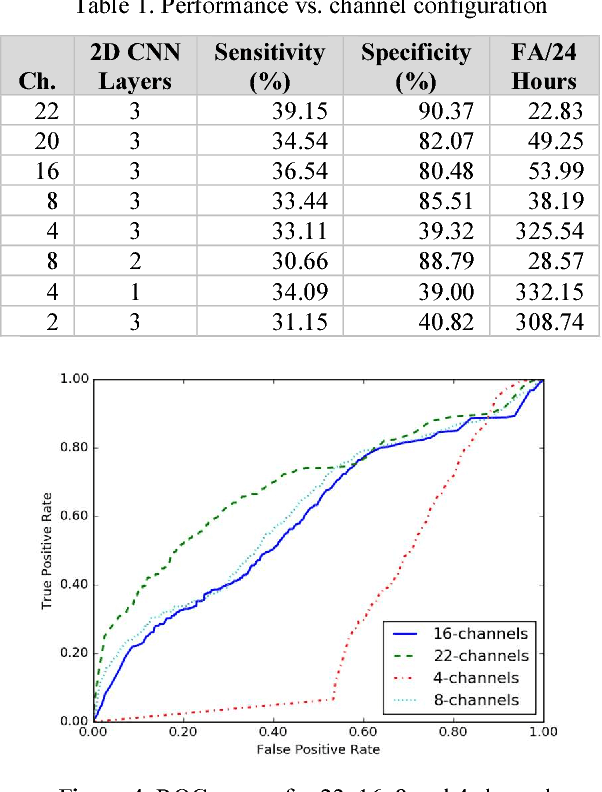

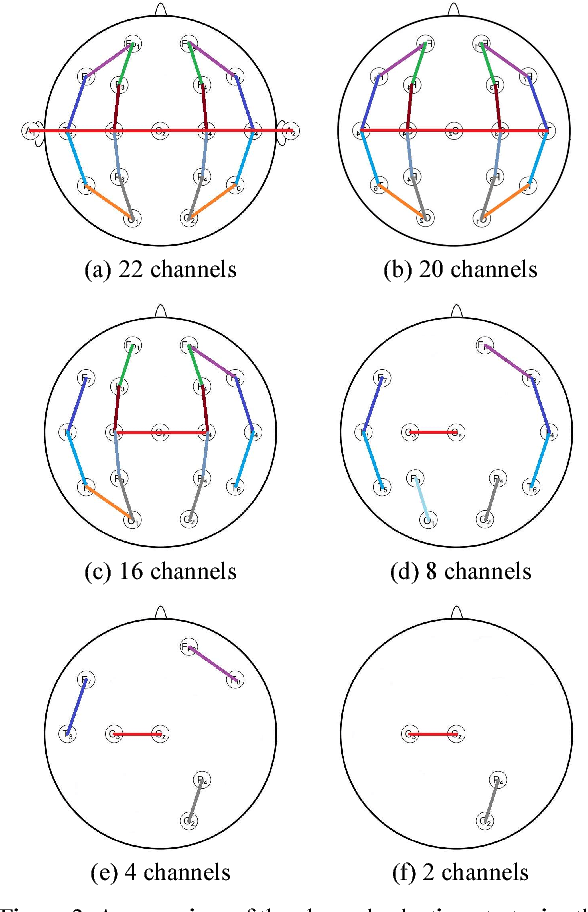

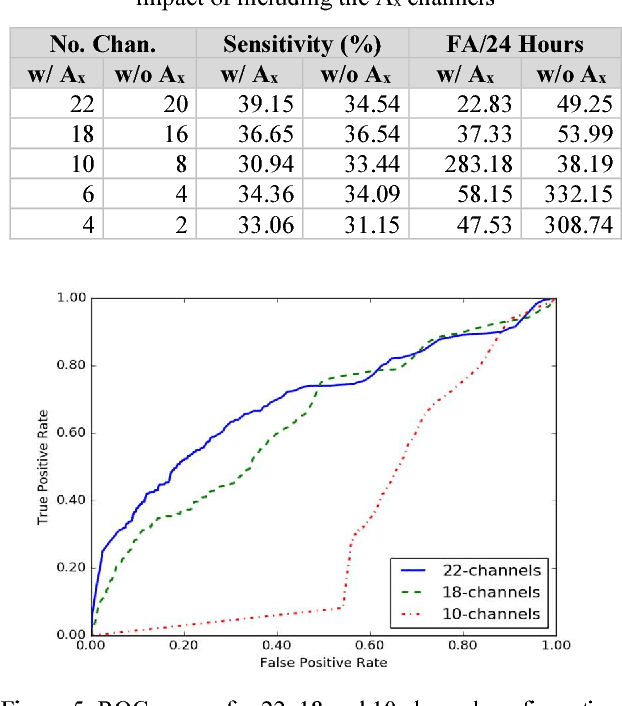

Abstract:Interpretation of electroencephalogram (EEG) signals can be complicated by obfuscating artifacts. Artifact detection plays an important role in the observation and analysis of EEG signals. Spatial information contained in the placement of the electrodes can be exploited to accurately detect artifacts. However, when fewer electrodes are used, less spatial information is available, making it harder to detect artifacts. In this study, we investigate the performance of a deep learning algorithm, CNN-LSTM, on several channel configurations. Each configuration was designed to minimize the amount of spatial information lost compared to a standard 22-channel EEG. Systems using a reduced number of channels ranging from 8 to 20 achieved sensitivities between 33% and 37% with false alarms in the range of [38, 50] per 24 hours. False alarms increased dramatically (e.g., over 300 per 24 hours) when the number of channels was further reduced. Baseline performance of a system that used all 22 channels was 39% sensitivity with 23 false alarms. Since the 22-channel system was the only system that included referential channels, the rapid increase in the false alarm rate as the number of channels was reduced underscores the importance of retaining referential channels for artifact reduction. This cautionary result is important because one of the biggest differences between various types of EEGs administered is the type of referential channel used.

Gated Recurrent Networks for Seizure Detection

Jan 03, 2018

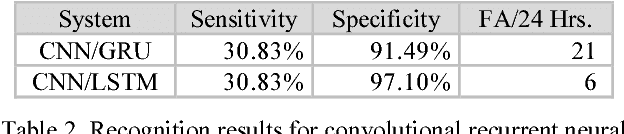

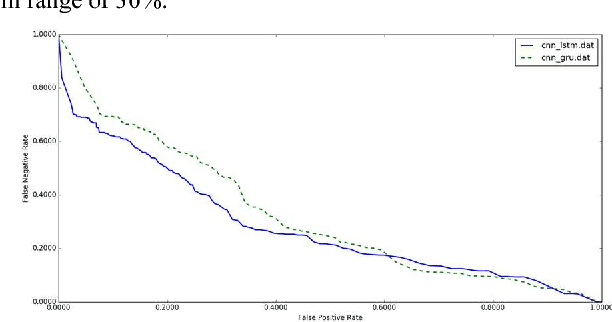

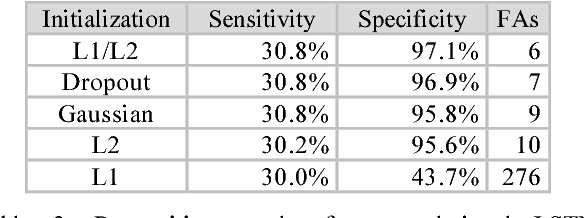

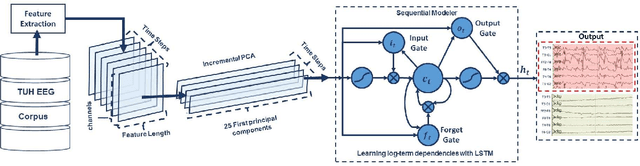

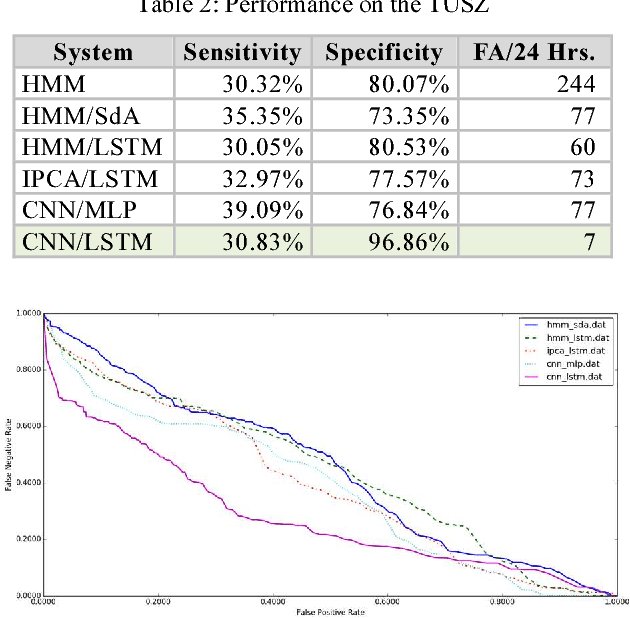

Abstract:Recurrent Neural Networks (RNNs) with sophisticated units that implement a gating mechanism have emerged as powerful technique for modeling sequential signals such as speech or electroencephalography (EEG). The latter is the focus on this paper. A significant big data resource, known as the TUH EEG Corpus (TUEEG), has recently become available for EEG research, creating a unique opportunity to evaluate these recurrent units on the task of seizure detection. In this study, we compare two types of recurrent units: long short-term memory units (LSTM) and gated recurrent units (GRU). These are evaluated using a state of the art hybrid architecture that integrates Convolutional Neural Networks (CNNs) with RNNs. We also investigate a variety of initialization methods and show that initialization is crucial since poorly initialized networks cannot be trained. Furthermore, we explore regularization of these convolutional gated recurrent networks to address the problem of overfitting. Our experiments revealed that convolutional LSTM networks can achieve significantly better performance than convolutional GRU networks. The convolutional LSTM architecture with proper initialization and regularization delivers 30% sensitivity at 6 false alarms per 24 hours.

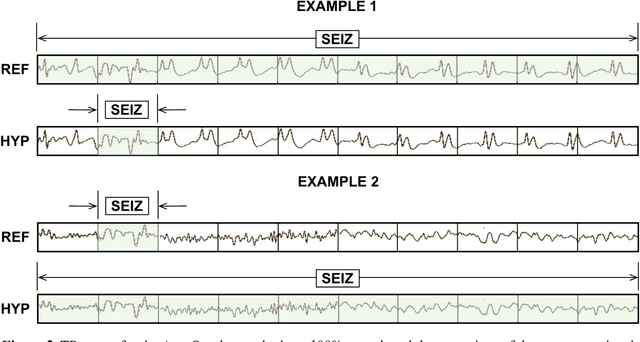

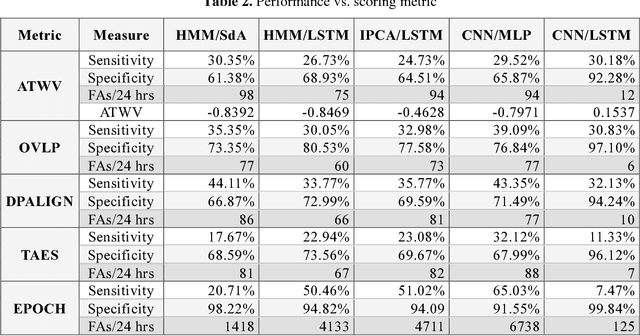

Objective evaluation metrics for automatic classification of EEG events

Dec 29, 2017

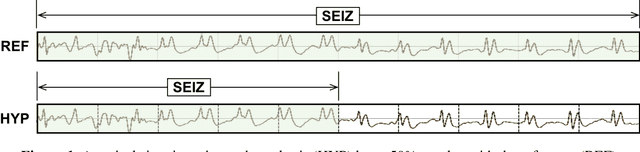

Abstract:The evaluation of machine learning algorithms in biomedical fields for applications involving sequential data lacks standardization. Common quantitative scalar evaluation metrics such as sensitivity and specificity can often be misleading depending on the requirements of the application. Evaluation metrics must ultimately reflect the needs of users yet be sufficiently sensitive to guide algorithm development. Feedback from critical care clinicians who use automated event detection software in clinical applications has been overwhelmingly emphatic that a low false alarm rate, typically measured in units of the number of errors per 24 hours, is the single most important criterion for user acceptance. Though using a single metric is not often as insightful as examining performance over a range of operating conditions, there is a need for a single scalar figure of merit. In this paper, we discuss the deficiencies of existing metrics for a seizure detection task and propose several new metrics that offer a more balanced view of performance. We demonstrate these metrics on a seizure detection task based on the TUH EEG Corpus. We show that two promising metrics are a measure based on a concept borrowed from the spoken term detection literature, Actual Term-Weighted Value, and a new metric, Time-Aligned Event Scoring (TAES), that accounts for the temporal alignment of the hypothesis to the reference annotation. We also demonstrate that state of the art technology based on deep learning, though impressive in its performance, still needs significant improvement before it will meet very strict user acceptance guidelines.

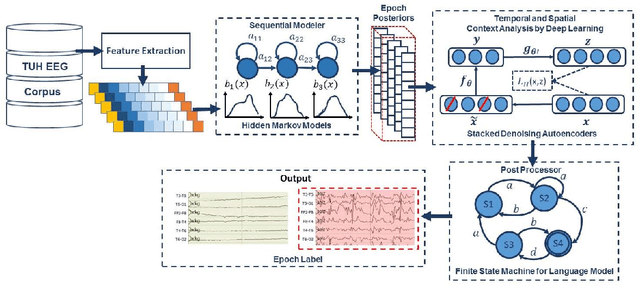

Deep Architectures for Automated Seizure Detection in Scalp EEGs

Dec 28, 2017

Abstract:Automated seizure detection using clinical electroencephalograms is a challenging machine learning problem because the multichannel signal often has an extremely low signal to noise ratio. Events of interest such as seizures are easily confused with signal artifacts (e.g, eye movements) or benign variants (e.g., slowing). Commercially available systems suffer from unacceptably high false alarm rates. Deep learning algorithms that employ high dimensional models have not previously been effective due to the lack of big data resources. In this paper, we use the TUH EEG Seizure Corpus to evaluate a variety of hybrid deep structures including Convolutional Neural Networks and Long Short-Term Memory Networks. We introduce a novel recurrent convolutional architecture that delivers 30% sensitivity at 7 false alarms per 24 hours. We have also evaluated our system on a held-out evaluation set based on the Duke University Seizure Corpus and demonstrate that performance trends are similar to the TUH EEG Seizure Corpus. This is a significant finding because the Duke corpus was collected with different instrumentation and at different hospitals. Our work shows that deep learning architectures that integrate spatial and temporal contexts are critical to achieving state of the art performance and will enable a new generation of clinically-acceptable technology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge