Vincent A. Stadelmann

Acoustical Features as Knee Health Biomarkers: A Critical Analysis

May 23, 2024

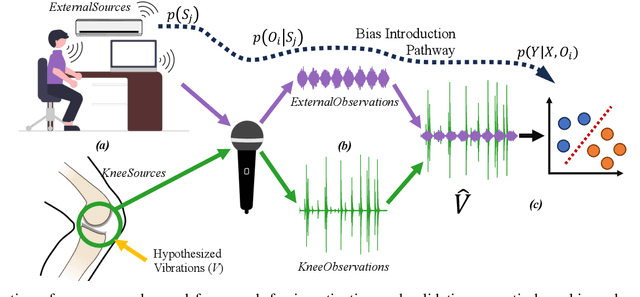

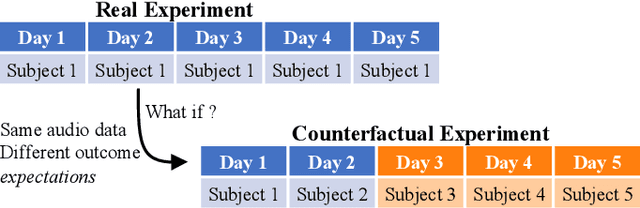

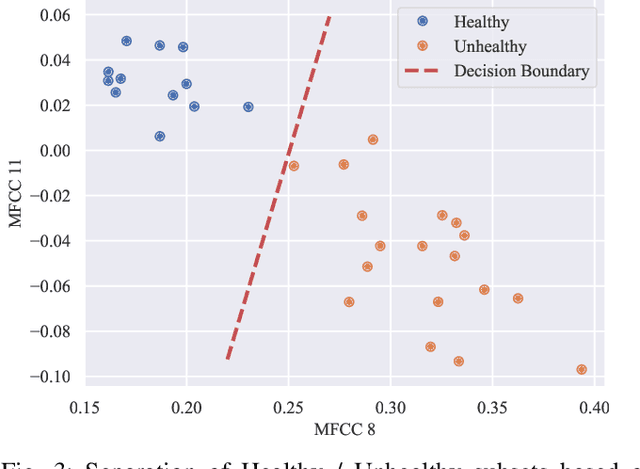

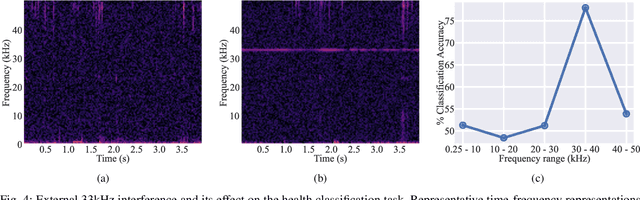

Abstract:Acoustical knee health assessment has long promised an alternative to clinically available medical imaging tools, but this modality has yet to be adopted in medical practice. The field is currently led by machine learning models processing acoustical features, which have presented promising diagnostic performances. However, these methods overlook the intricate multi-source nature of audio signals and the underlying mechanisms at play. By addressing this critical gap, the present paper introduces a novel causal framework for validating knee acoustical features. We argue that current machine learning methodologies for acoustical knee diagnosis lack the required assurances and thus cannot be used to classify acoustic features as biomarkers. Our framework establishes a set of essential theoretical guarantees necessary to validate this claim. We apply our methodology to three real-world experiments investigating the effect of researchers' expectations, the experimental protocol and the wearable employed sensor. This investigation reveals latent issues such as underlying shortcut learning and performance inflation. This study is the first independent result reproduction study in the field of acoustical knee health evaluation. We conclude with actionable insights from our findings, offering valuable guidance to navigate these crucial limitations in future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge