Vida Esmaeili

COVID-19 Diagnosis: ULGFBP-ResNet51 approach on the CT and the Chest X-ray Images Classification

Dec 20, 2023Abstract:The contagious and pandemic COVID-19 disease is currently considered as the main health concern and posed widespread panic across human-beings. It affects the human respiratory tract and lungs intensely. So that it has imposed significant threats for premature death. Although, its early diagnosis can play a vital role in revival phase, the radiography tests with the manual intervention are a time-consuming process. Time is also limited for such manual inspecting of numerous patients in the hospitals. Thus, the necessity of automatic diagnosis on the chest X-ray or the CT images with a high efficient performance is urgent. Toward this end, we propose a novel method, named as the ULGFBP-ResNet51 to tackle with the COVID-19 diagnosis in the images. In fact, this method includes Uniform Local Binary Pattern (ULBP), Gabor Filter (GF), and ResNet51. According to our results, this method could offer superior performance in comparison with the other methods, and attain maximum accuracy.

Automatic Micro-Expression Apex Frame Spotting using Local Binary Pattern from Six Intersection Planes

Apr 05, 2021

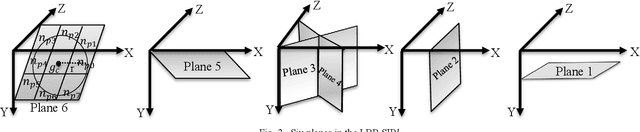

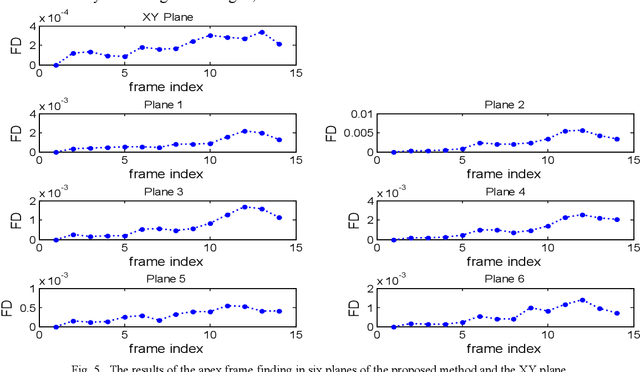

Abstract:Facial expressions are one of the most effective ways for non-verbal communications, which can be expressed as the Micro-Expression (ME) in the high-stake situations. The MEs are involuntary, rapid, and, subtle, and they can reveal real human intentions. However, their feature extraction is very challenging due to their low intensity and very short duration. Although Local Binary Pattern from Three Orthogonal Plane (LBP-TOP) feature extractor is useful for the ME analysis, it does not consider essential information. To address this problem, we propose a new feature extractor called Local Binary Pattern from Six Intersection Planes (LBP-SIPl). This method extracts LBP code on six intersection planes, and then it combines them. Results show that the proposed method has superior performance in apex frame spotting automatically in comparison with the relevant methods on the CASME database. Simulation results show that, using the proposed method, the apex frame has been spotted in 43% of subjects in the CASME database, automatically. Also, the mean absolute error of 1.76 is achieved, using our novel proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge