Victoria Blanes-Vidal

An Explainable Attention Model for Cervical Precancer Risk Classification using Colposcopic Images

Nov 14, 2024

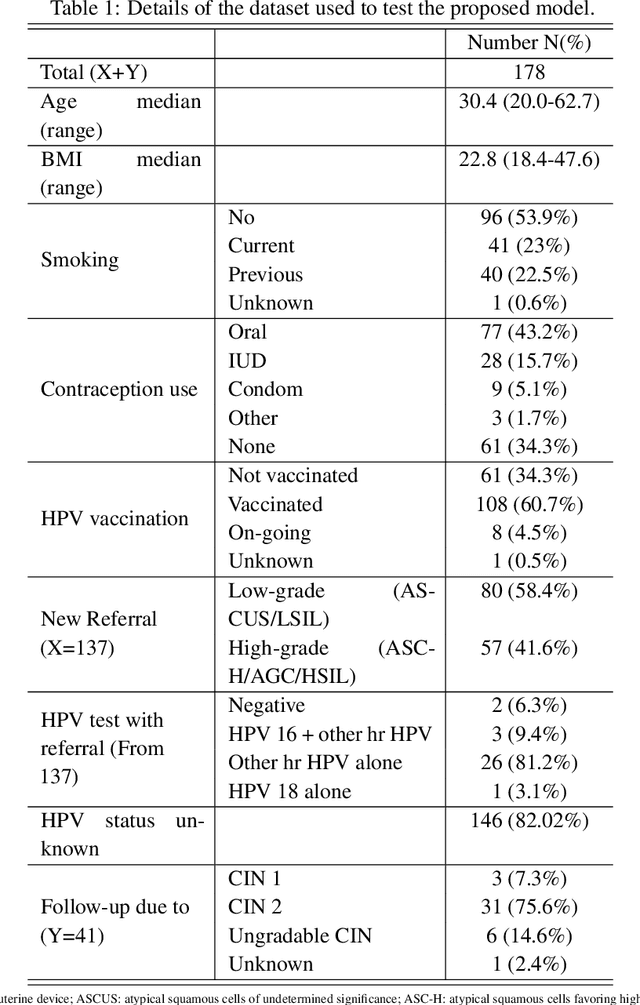

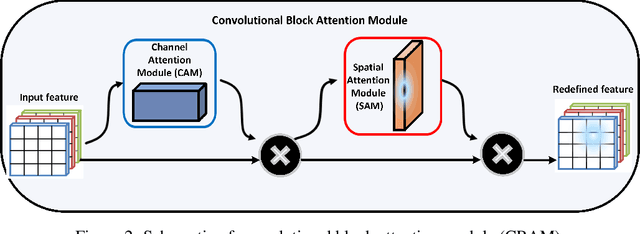

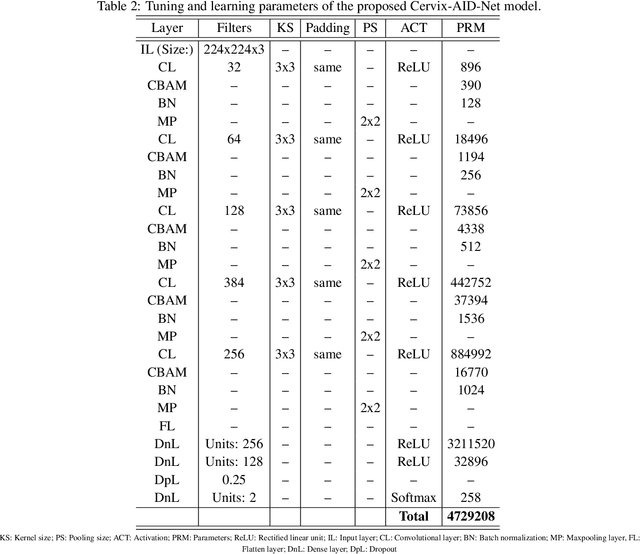

Abstract:Cervical cancer remains a major worldwide health issue, with early identification and risk assessment playing critical roles in effective preventive interventions. This paper presents the Cervix-AID-Net model for cervical precancer risk classification. The study designs and evaluates the proposed Cervix-AID-Net model based on patients colposcopy images. The model comprises a Convolutional Block Attention Module (CBAM) and convolutional layers that extract interpretable and representative features of colposcopic images to distinguish high-risk and low-risk cervical precancer. In addition, the proposed Cervix-AID-Net model integrates four explainable techniques, namely gradient class activation maps, Local Interpretable Model-agnostic Explanations, CartoonX, and pixel rate distortion explanation based on output feature maps and input features. The evaluation using holdout and ten-fold cross-validation techniques yielded a classification accuracy of 99.33\% and 99.81\%. The analysis revealed that CartoonX provides meticulous explanations for the decision of the Cervix-AID-Net model due to its ability to provide the relevant piece-wise smooth part of the image. The effect of Gaussian noise and blur on the input shows that the performance remains unchanged up to Gaussian noise of 3\% and blur of 10\%, while the performance reduces thereafter. A comparison study of the proposed model's performance compared to other deep learning approaches highlights the Cervix-AID-Net model's potential as a supplemental tool for increasing the effectiveness of cervical precancer risk assessment. The proposed method, which incorporates the CBAM and explainable artificial integration, has the potential to influence cervical cancer prevention and early detection, improving patient outcomes and lowering the worldwide burden of this preventable disease.

Towards Full Integration of Artificial Intelligence in Colon Capsule Endoscopy's Pathway

Jun 14, 2024Abstract:Despite recent surge of interest in deploying colon capsule endoscopy (CCE) for early diagnosis of colorectal diseases, there remains a large gap between the current state of CCE in clinical practice, and the state of its counterpart optical colonoscopy (OC). Our study is aimed at closing this gap, by focusing on the full integration of AI in CCE's pathway, where image processing steps linked to the detection, localization and characterisation of important findings are carried out autonomously using various AI algorithms. We developed a recognition network, that with an impressive sensitivity of 99.9%, a specificity of 99.4%, and a negative predictive value (NPV) of 99.8%, detected colorectal polyps. After recognising a polyp within a sequence of images, only those images containing polyps were fed into two parallel independent networks for characterisation, and estimation of the size of those important findings. The characterisation network reached a sensitivity of 82% and a specificity of 80% in classifying polyps to two groups, namely neoplastic vs. non-neoplastic. The size estimation network reached an accuracy of 88% in correctly segmenting the polyps. By automatically incorporating this crucial information into CCE's pathway, we moved a step closer towards the full integration of AI in CCE's routine clinical practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge