Victor F. Monteiro

Beam Squinting Compensation: An NCR-Assisted Scenario

Feb 15, 2024Abstract:Millimeter wave (mmWave) and sub-THz communications, foreseen for sixth generation (6G), suffer from high propagation losses which affect the network coverage. To address this point, smart entities such as network-controlled repeaters (NCRs) have been considered as cost-efficient solutions for coverage extension. NCRs, which have been standardized in 3rd generation partnership project Release 18, are radio frequency repeaters with beamforming capability controlled by the network through side control information. Another challenge raised by the adoption of high frequency bands is the use of large bandwidths. Here, a common configuration is to divide a large frequency band into multiple smaller subbands. In this context, we consider a scenario with NCRs where signaling related to measurements used for radio resource management is transmitted in one subband centered at frequency $f_c$ and data transmission is performed at a different frequency $f_c + \Delta f$ based on the measurements taken at $f_c$. Here, a challenge is that the array radiation pattern can be frequency dependent and, therefore, lead to beam misalignment, called beam squinting. We characterize beam squinting in the context of subband operation and propose a solution where the beam patterns to be employed at a given subband can be adjusted/compensated to mitigate beam squinting. Our results show that, without compensation, the perceived signal to interference-plus-noise ratio (SINR) and so the throughput can be substantially decreased due to beam squinting. However, with our proposed compensation method, the system is able to support NCR subband signaling operation with similar performance as if signaling and data were transmitted at the same frequency.

Deep Reinforcement Learning for QoS-Constrained Resource Allocation in Multiservice Networks

Mar 03, 2020

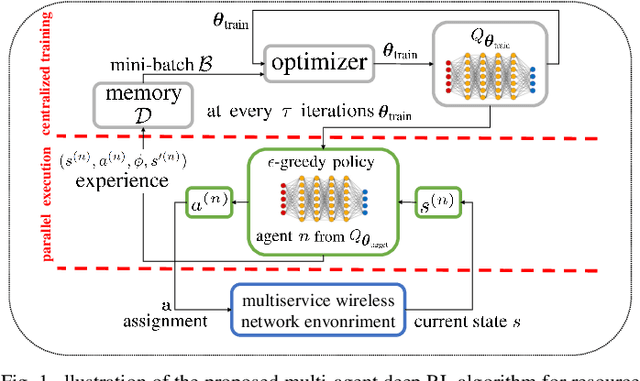

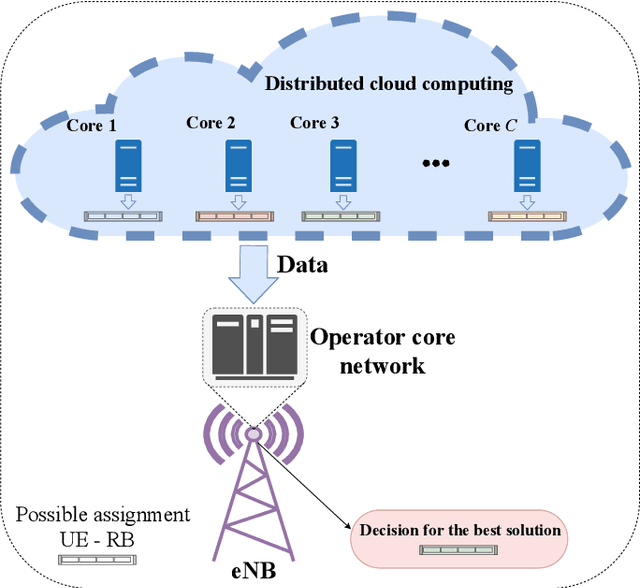

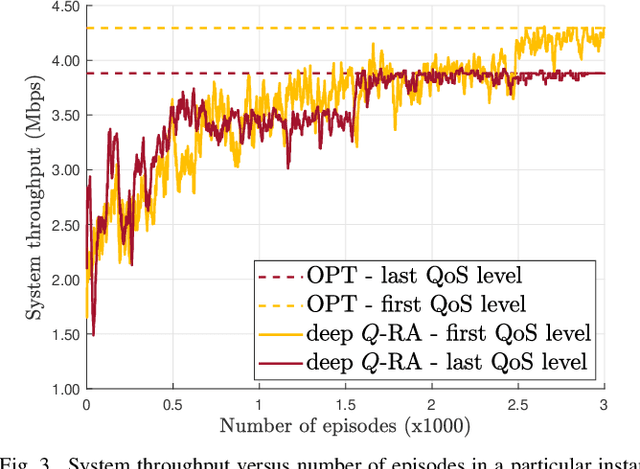

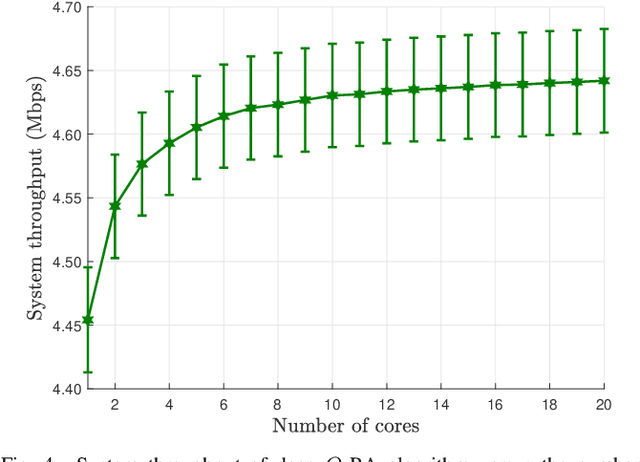

Abstract:In this article, we study a Radio Resource Allocation (RRA) that was formulated as a non-convex optimization problem whose main aim is to maximize the spectral efficiency subject to satisfaction guarantees in multiservice wireless systems. This problem has already been previously investigated in the literature and efficient heuristics have been proposed. However, in order to assess the performance of Machine Learning (ML) algorithms when solving optimization problems in the context of RRA, we revisit that problem and propose a solution based on a Reinforcement Learning (RL) framework. Specifically, a distributed optimization method based on multi-agent deep RL is developed, where each agent makes its decisions to find a policy by interacting with the local environment, until reaching convergence. Thus, this article focuses on an application of RL and our main proposal consists in a new deep RL based approach to jointly deal with RRA, satisfaction guarantees and Quality of Service (QoS) constraints in multiservice celular networks. Lastly, through computational simulations we compare the state-of-art solutions of the literature with our proposal and we show a near optimal performance of the latter in terms of throughput and outage rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge