Vasudev Awatramani

Neural Collapse: A Review on Modelling Principles and Generalization

Jun 08, 2022

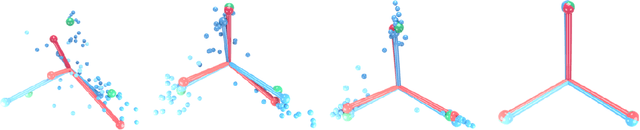

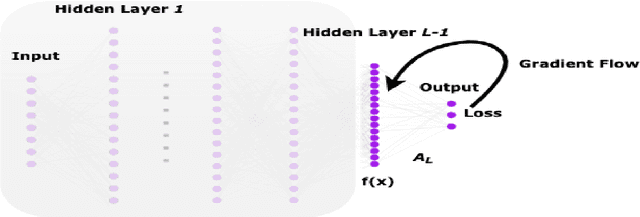

Abstract:With a recent observation of the "Neural Collapse (NC)" phenomena by Papyan et al., various efforts have been made to model it and analyse the implications. Neural collapse describes that in deep classifier networks, the class features of the final hidden layer associated with training data tend to collapse to the respective class feature means. Thus, simplifying the behaviour of the last layer classifier to that of a nearest-class center decision rule. In this work, we analyse the principles which aid in modelling such a phenomena from the ground up and show how they can build a common understanding of the recently proposed models that try to explain NC. We hope that our analysis presents a multifaceted perspective on modelling NC and aids in forming connections with the generalization capabilities of neural networks. Finally, we conclude by discussing the avenues for further research and propose potential research problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge