Valerii Baianov

Non-contrastive approaches to similarity learning: positive examples are all you need

Sep 28, 2022

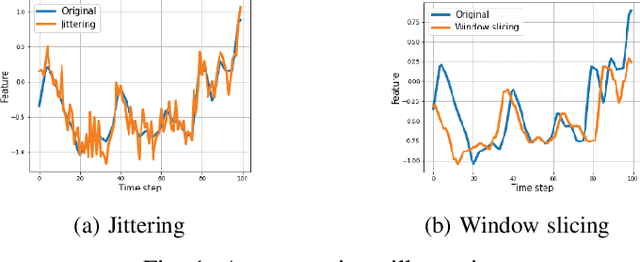

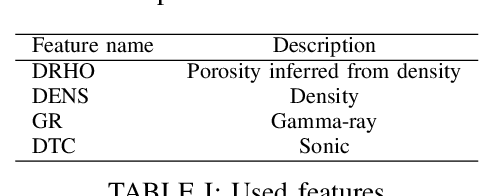

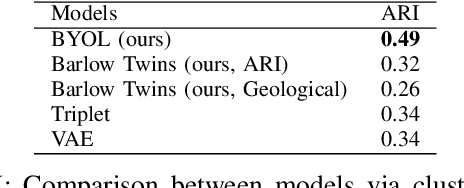

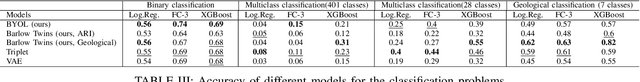

Abstract:The similarity learning problem in the oil \& gas industry aims to construct a model that estimates similarity between interval measurements for logging data. Previous attempts are mostly based on empirical rules, so our goal is to automate this process and exclude expensive and time-consuming expert labelling. One of the approaches for similarity learning is self-supervised learning (SSL). In contrast to the supervised paradigm, this one requires little or no labels for the data. Thus, we can learn such models even if the data labelling is absent or scarce. Nowadays, most SSL approaches are contrastive and non-contrastive. However, due to possible wrong labelling of positive and negative samples, contrastive methods don't scale well with the number of objects. Non-contrastive methods don't rely on negative samples. Such approaches are actively used in the computer vision. We introduce non-contrastive SSL for time series data. In particular, we build on top of BYOL and Barlow Twins methods that avoid using negative pairs and focus only on matching positive pairs. The crucial part of these methods is an augmentation strategy. Different augmentations of time series exist, while their effect on the performance can be both positive and negative. Our augmentation strategies and adaption for BYOL and Barlow Twins together allow us to achieve a higher quality (ARI $= 0.49$) than other self-supervised methods (ARI $= 0.34$ only), proving usefulness of the proposed non-contrastive self-supervised approach for the interval similarity problem and time series representation learning in general.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge