V. Kavyasree

Two-stream Fusion Model for Dynamic Hand Gesture Recognition using 3D-CNN and 2D-CNN Optical Flow guided Motion Template

Jul 17, 2020

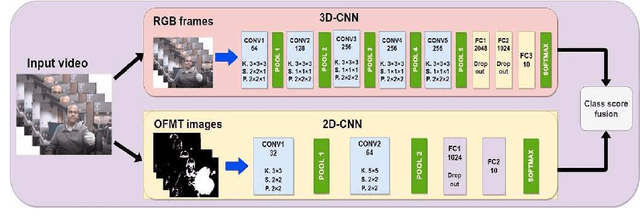

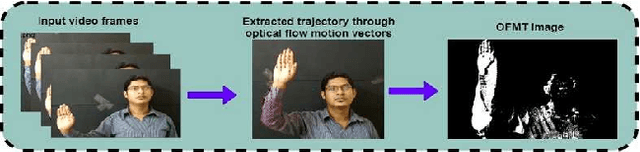

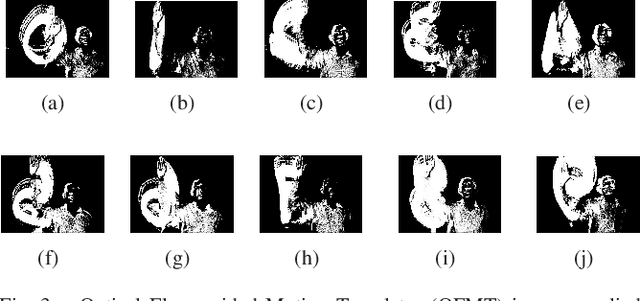

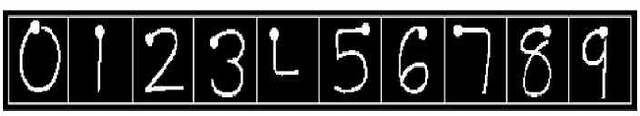

Abstract:The use of hand gestures can be a useful tool for many applications in the human-computer interaction community. In a broad range of areas hand gesture techniques can be applied specifically in sign language recognition, robotic surgery, etc. In the process of hand gesture recognition, proper detection, and tracking of the moving hand become challenging due to the varied shape and size of the hand. Here the objective is to track the movement of the hand irrespective of the shape, size, and color of the hand. And, for this, a motion template guided by optical flow (OFMT) is proposed. OFMT is a compact representation of the motion information of a gesture encoded into a single image. In the experimentation, different datasets using bare hand with an open palm, and folded palm wearing green-glove are used, and in both cases, we could generate the OFMT images with equal precision. Recently, deep network-based techniques have shown impressive improvements as compared to conventional hand-crafted feature-based techniques. Moreover, in the literature, it is seen that the use of different streams with informative input data helps to increase the performance in the recognition accuracy. This work basically proposes a two-stream fusion model for hand gesture recognition and a compact yet efficient motion template based on optical flow. Specifically, the two-stream network consists of two layers: a 3D convolutional neural network (C3D) that takes gesture videos as input and a 2D-CNN that takes OFMT images as input. C3D has shown its efficiency in capturing spatio-temporal information of a video. Whereas OFMT helps to eliminate irrelevant gestures providing additional motion information. Though each stream can work independently, they are combined with a fusion scheme to boost the recognition results. We have shown the efficiency of the proposed two-stream network on two databases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge