Tsugumasa Yutani

Wavetable Synthesis Using CVAE for Timbre Control Based on Semantic Label

Oct 24, 2024

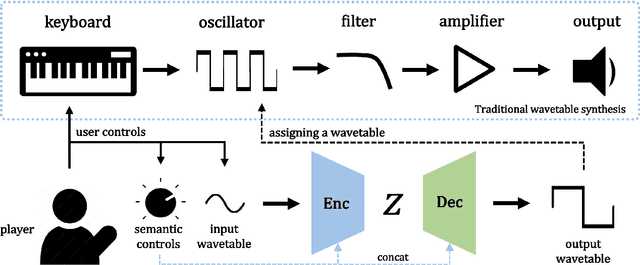

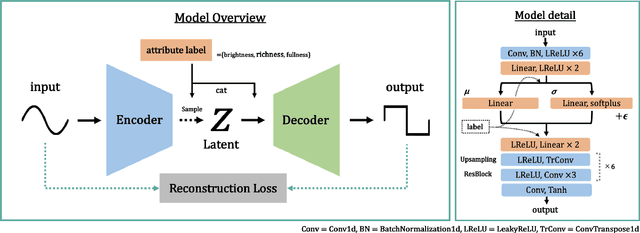

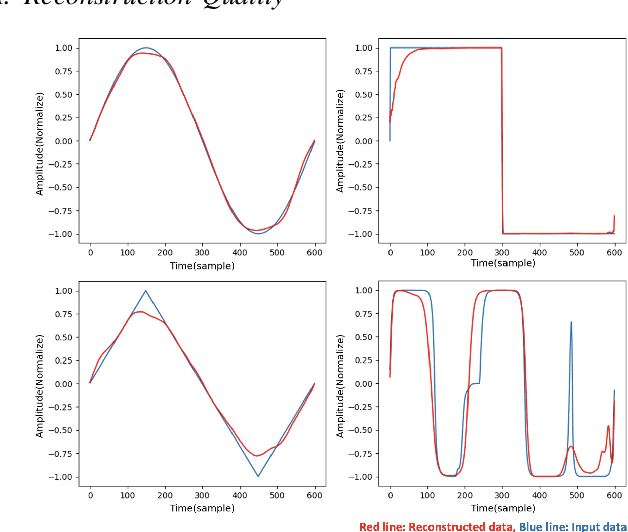

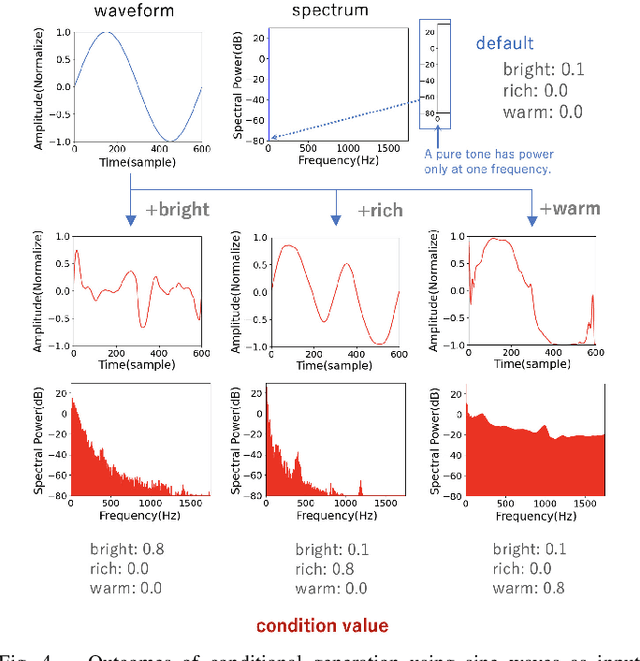

Abstract:Synthesizers are essential in modern music production. However, their complex timbre parameters, often filled with technical terms, require expertise. This research introduces a method of timbre control in wavetable synthesis that is intuitive and sensible and utilizes semantic labels. Using a conditional variational autoencoder (CVAE), users can select a wavetable and define the timbre with labels such as bright, warm, and rich. The CVAE model, featuring convolutional and upsampling layers, effectively captures the wavetable nuances, ensuring real-time performance owing to their processing in the time domain. Experiments demonstrate that this approach allows for real-time, effective control of the timbre of the wavetable using semantic inputs and aims for intuitive timbre control through data-based semantic control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge