Travis S. Humble

Quantum Hybrid Support Vector Machines for Stress Detection in Older Adults

Jan 08, 2025Abstract:Stress can increase the possibility of cognitive impairment and decrease the quality of life in older adults. Smart healthcare can deploy quantum machine learning to enable preventive and diagnostic support. This work introduces a unique technique to address stress detection as an anomaly detection problem that uses quantum hybrid support vector machines. With the help of a wearable smartwatch, we mapped baseline sensor reading as normal data and stressed sensor reading as anomaly data using cortisol concentration as the ground truth. We have used quantum computing techniques to explore the complex feature spaces with kernel-based preprocessing. We illustrate the usefulness of our method by doing experimental validation on 40 older adults with the help of the TSST protocol. Our findings highlight that using a limited number of features, quantum machine learning provides improved accuracy compared to classical methods. We also observed that the recall value using quantum machine learning is higher compared to the classical method. The higher recall value illustrates the potential of quantum machine learning in healthcare, as missing anomalies could result in delayed diagnostics or treatment.

Adaptive mitigation of time-varying quantum noise

Aug 16, 2023Abstract:Current quantum computers suffer from non-stationary noise channels with high error rates, which undermines their reliability and reproducibility. We propose a Bayesian inference-based adaptive algorithm that can learn and mitigate quantum noise in response to changing channel conditions. Our study emphasizes the need for dynamic inference of critical channel parameters to improve program accuracy. We use the Dirichlet distribution to model the stochasticity of the Pauli channel. This allows us to perform Bayesian inference, which can improve the performance of probabilistic error cancellation (PEC) under time-varying noise. Our work demonstrates the importance of characterizing and mitigating temporal variations in quantum noise, which is crucial for developing more accurate and reliable quantum technologies. Our results show that Bayesian PEC can outperform non-adaptive approaches by a factor of 4.5x when measured using Hellinger distance from the ideal distribution.

Quantum Annealing for Automated Feature Selection in Stress Detection

Jun 09, 2021

Abstract:We present a novel methodology for automated feature subset selection from a pool of physiological signals using Quantum Annealing (QA). As a case study, we will investigate the effectiveness of QA-based feature selection techniques in selecting the optimal feature subset for stress detection. Features are extracted from four signal sources: foot EDA, hand EDA, ECG, and respiration. The proposed method embeds the feature variables extracted from the physiological signals in a binary quadratic model. The bias of the feature variable is calculated using the Pearson correlation coefficient between the feature variable and the target variable. The weight of the edge connecting the two feature variables is calculated using the Pearson correlation coefficient between two feature variables in the binary quadratic model. Subsequently, D-Wave's clique sampler is used to sample cliques from the binary quadratic model. The underlying solution is then re-sampled to obtain multiple good solutions and the clique with the lowest energy is returned as the optimal solution. The proposed method is compared with commonly used feature selection techniques for stress detection. Results indicate that QA-based feature subset selection performed equally as that of classical techniques. However, under data uncertainty conditions such as limited training data, the performance of quantum annealing for selecting optimum features remained unaffected, whereas a significant decrease in performance is observed with classical feature selection techniques. Preliminary results show the promise of quantum annealing in optimizing the training phase of a machine learning classifier, especially under data uncertainty conditions.

A Review of Machine Learning Classification Using Quantum Annealing for Real-world Applications

Jun 05, 2021

Abstract:Optimizing the training of a machine learning pipeline helps in reducing training costs and improving model performance. One such optimizing strategy is quantum annealing, which is an emerging computing paradigm that has shown potential in optimizing the training of a machine learning model. The implementation of a physical quantum annealer has been realized by D-Wave systems and is available to the research community for experiments. Recent experimental results on a variety of machine learning applications using quantum annealing have shown interesting results where the performance of classical machine learning techniques is limited by limited training data and high dimensional features. This article explores the application of D-Wave's quantum annealer for optimizing machine learning pipelines for real-world classification problems. We review the application domains on which a physical quantum annealer has been used to train machine learning classifiers. We discuss and analyze the experiments performed on the D-Wave quantum annealer for applications such as image recognition, remote sensing imagery, computational biology, and particle physics. We discuss the possible advantages and the problems for which quantum annealing is likely to be advantageous over classical computation.

* 13 Pages

Training a quantum annealing based restricted Boltzmann machine on cybersecurity data

Nov 24, 2020

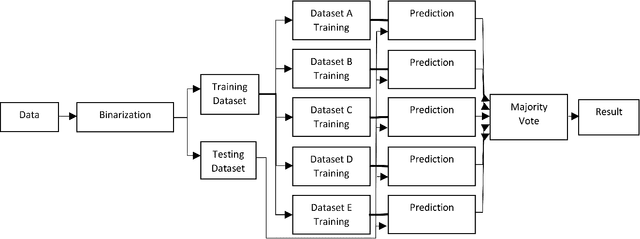

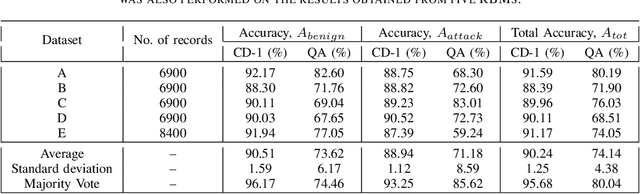

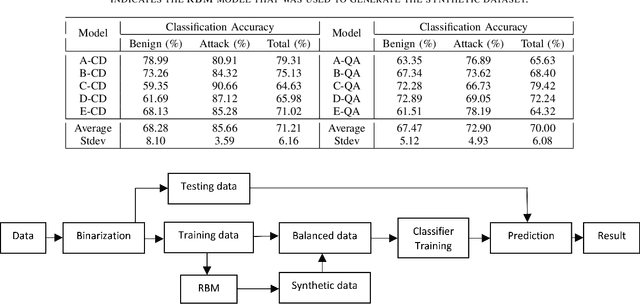

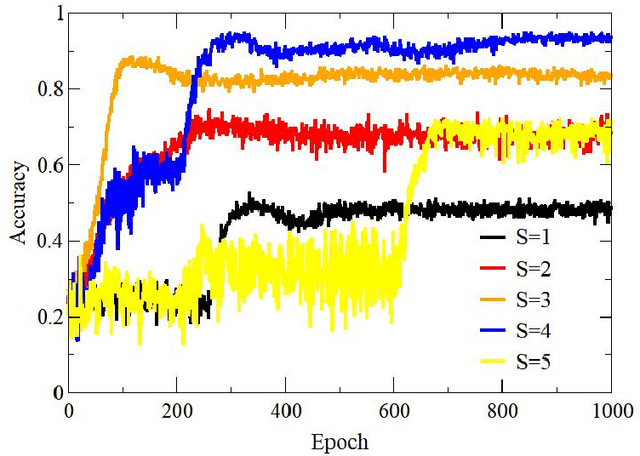

Abstract:We present a real-world application that uses a quantum computer. Specifically, we trained a Restricted Boltzmann Machine (RBM) using quantum annealing (QA) to develop an intrusion detection system. RBMs were trained on the ISCX data, which is a benchmark dataset for cybersecurity. For comparison, RBMs were also trained using contrastive divergence (CD) which is a classical method. D-Wave's 2000Q quantum annealer has been used to implement QA. Our analysis of the ISCX data shows that the dataset is imbalanced and we present two different schemes to balance the training dataset before feeding it to a classifier. The first scheme is based on the oversampling of attack instances. The imbalanced training dataset was divided into five sub-datasets that were trained separately. A majority voting was performed to get the final result. Our results show the majority vote increased the classification accuracy up from 90.24% to 95.68% in the case of CD. For the case of QA, the classification accuracy increased from 74.14% to 80.04%. In the second scheme, an RBM was used to generate synthetic data to balance the training dataset. The RBMs trained on synthetic data generated from a CD-trained RBM performed comparably to the RBMs trained on synthetic data generated from a QA-trained RBM. Balanced training data was used to evaluate several classifiers. Among the classifiers investigated, K-Nearest Neighbor (KNN) and Neural Network (NN) performed better than other classifiers. They both showed an accuracy of 93%. Our results show a proof of concept that a QA-based RBM can be trained on a binary dataset, with 64-bit records. The illustrative example suggests the possibility to migrate many practical classification problems to QA-based techniques.

Training and Classification using a Restricted Boltzmann Machine on the D-Wave 2000Q

May 07, 2020

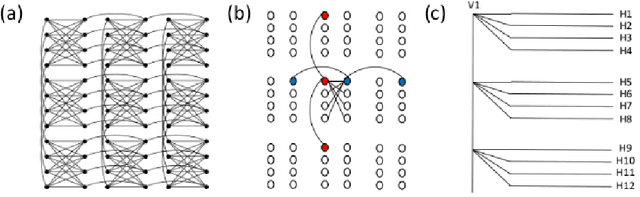

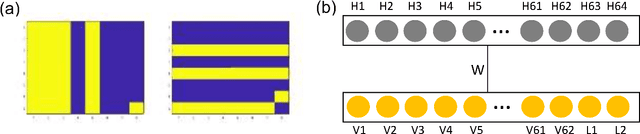

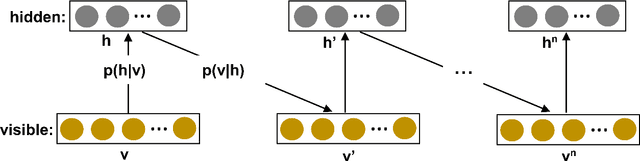

Abstract:Restricted Boltzmann Machine (RBM) is an energy based, undirected graphical model. It is commonly used for unsupervised and supervised machine learning. Typically, RBM is trained using contrastive divergence (CD). However, training with CD is slow and does not estimate exact gradient of log-likelihood cost function. In this work, the model expectation of gradient learning for RBM has been calculated using a quantum annealer (D-Wave 2000Q), which is much faster than Markov chain Monte Carlo (MCMC) used in CD. Training and classification results are compared with CD. The classification accuracy results indicate similar performance of both methods. Image reconstruction as well as log-likelihood calculations are used to compare the performance of quantum and classical algorithms for RBM training. It is shown that the samples obtained from quantum annealer can be used to train a RBM on a 64-bit `bars and stripes' data set with classification performance similar to a RBM trained with CD. Though training based on CD showed improved learning performance, training using a quantum annealer eliminates computationally expensive MCMC steps of CD.

Community detection with spiking neural networks for neuromorphic hardware

Nov 20, 2017

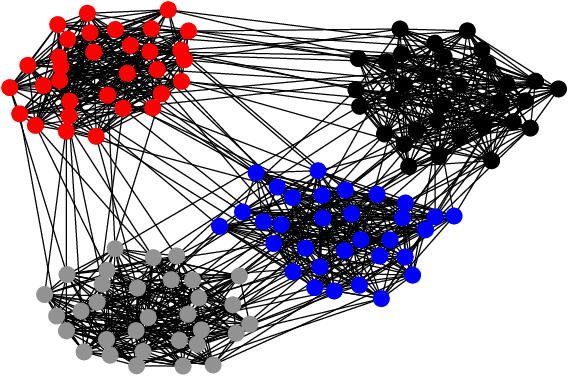

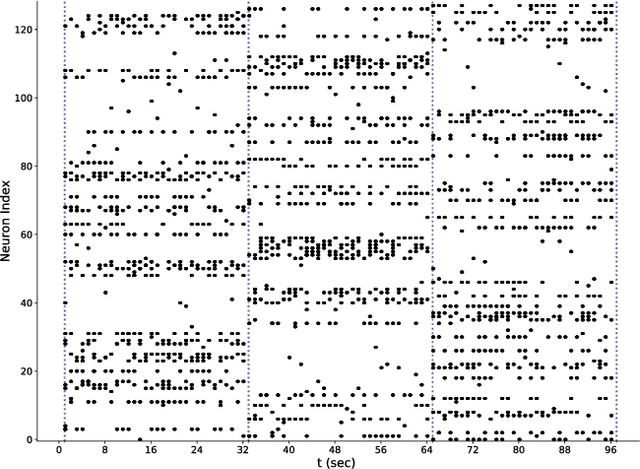

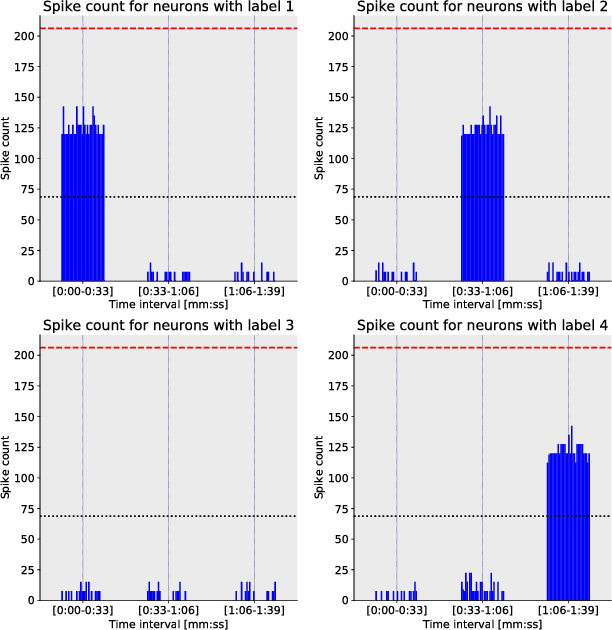

Abstract:We present results related to the performance of an algorithm for community detection which incorporates event-driven computation. We define a mapping which takes a graph G to a system of spiking neurons. Using a fully connected spiking neuron system, with both inhibitory and excitatory synaptic connections, the firing patterns of neurons within the same community can be distinguished from firing patterns of neurons in different communities. On a random graph with 128 vertices and known community structure we show that by using binary decoding and a Hamming-distance based metric, individual communities can be identified from spike train similarities. Using bipolar decoding and finite rate thresholding, we verify that inhibitory connections prevent the spread of spiking patterns.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge