Toshihiro Tanizawa

Left-Right Symmetry Breaking in CLIP-style Vision-Language Models Trained on Synthetic Spatial-Relation Data

Jan 19, 2026Abstract:Spatial understanding remains a key challenge in vision-language models. Yet it is still unclear whether such understanding is truly acquired, and if so, through what mechanisms. We present a controllable 1D image-text testbed to probe how left-right relational understanding emerges in Transformer-based vision and text encoders trained with a CLIP-style contrastive objective. We train lightweight Transformer-based vision and text encoders end-to-end on paired descriptions of one- and two-object scenes and evaluate generalization to unseen object pairs while systematically varying label and layout diversity. We find that contrastive training learns left-right relations and that label diversity, more than layout diversity, is the primary driver of generalization in this setting. To gain the mechanistic understanding, we perform an attention decomposition and show that interactions between positional and token embeddings induce a horizontal attention gradient that breaks left-right symmetry in the encoders; ablating this contribution substantially reduces left-right discrimination. Our results provide a mechanistic insight of when and how CLIP-style models acquire relational competence.

Ego-Vehicle Action Recognition based on Semi-Supervised Contrastive Learning

Mar 02, 2023

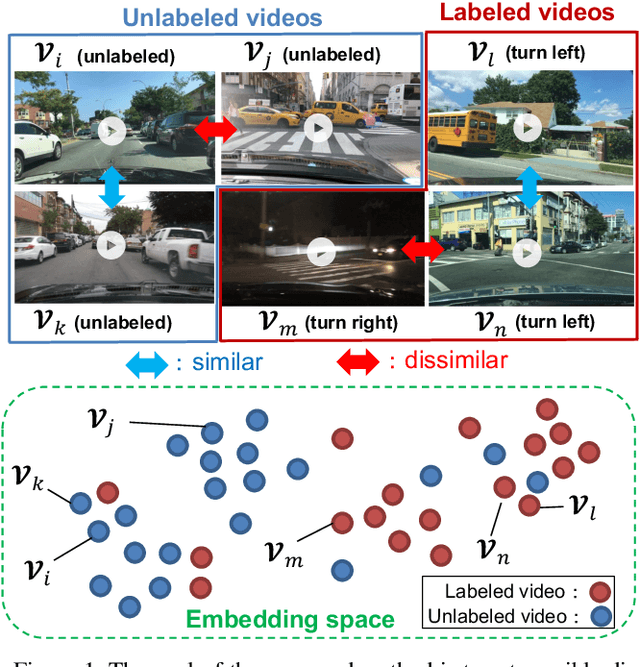

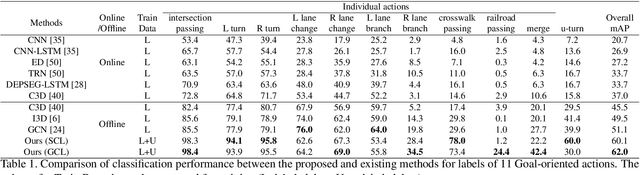

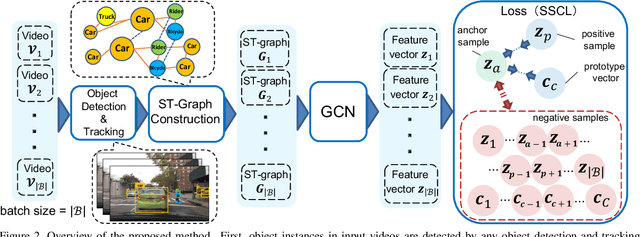

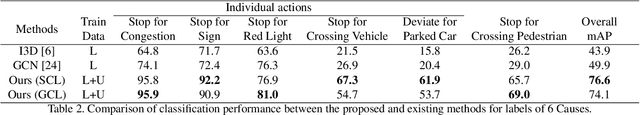

Abstract:In recent years, many automobiles have been equipped with cameras, which have accumulated an enormous amount of video footage of driving scenes. Autonomous driving demands the highest level of safety, for which even unimaginably rare driving scenes have to be collected in training data to improve the recognition accuracy for specific scenes. However, it is prohibitively costly to find very few specific scenes from an enormous amount of videos. In this article, we show that proper video-to-video distances can be defined by focusing on ego-vehicle actions. It is well known that existing methods based on supervised learning cannot handle videos that do not fall into predefined classes, though they work well in defining video-to-video distances in the embedding space between labeled videos. To tackle this problem, we propose a method based on semi-supervised contrastive learning. We consider two related but distinct contrastive learning: standard graph contrastive learning and our proposed SOIA-based contrastive learning. We observe that the latter approach can provide more sensible video-to-video distances between unlabeled videos. Next, the effectiveness of our method is quantified by evaluating the classification performance of the ego-vehicle action recognition using HDD dataset, which shows that our method including unlabeled data in training significantly outperforms the existing methods using only labeled data in training.

* 19 pages, 17 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge