Tom Schmiedlechner

Lipizzaner: A System That Scales Robust Generative Adversarial Network Training

Nov 30, 2018

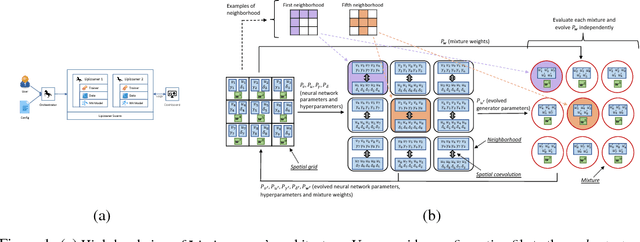

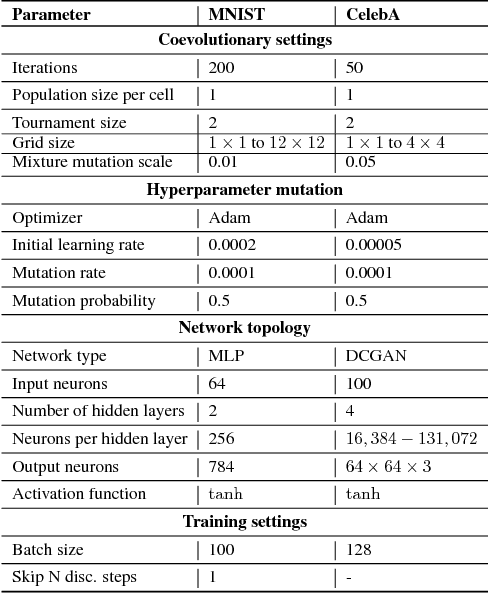

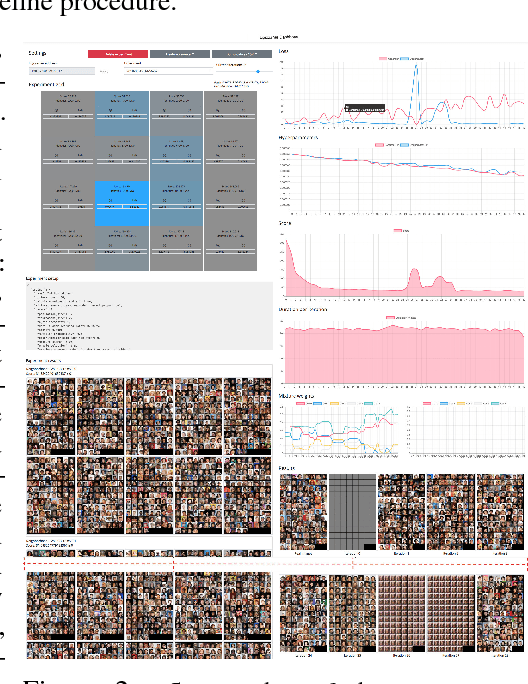

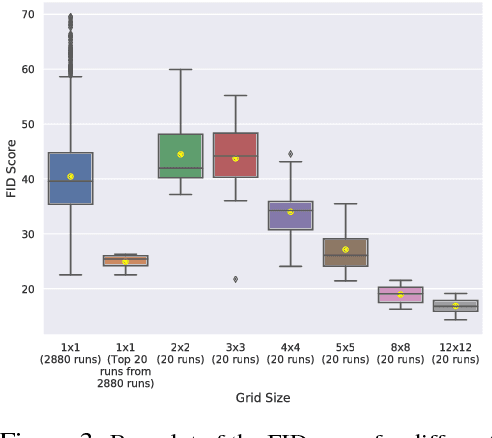

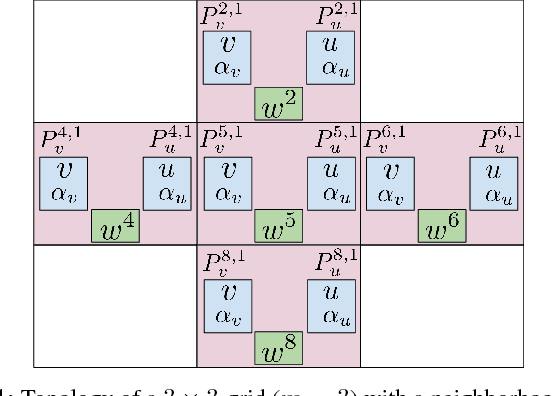

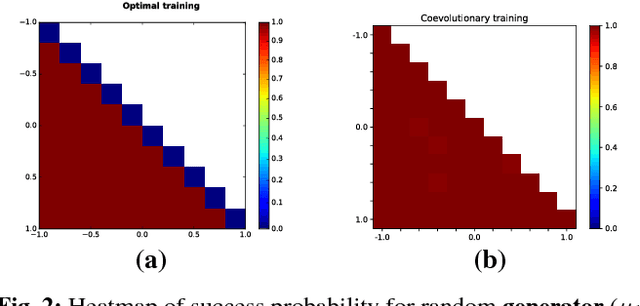

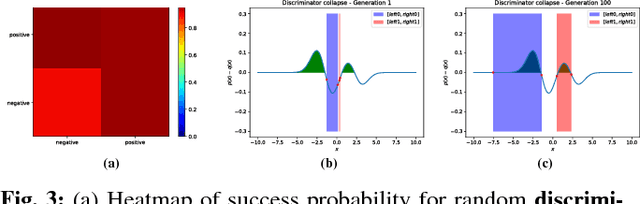

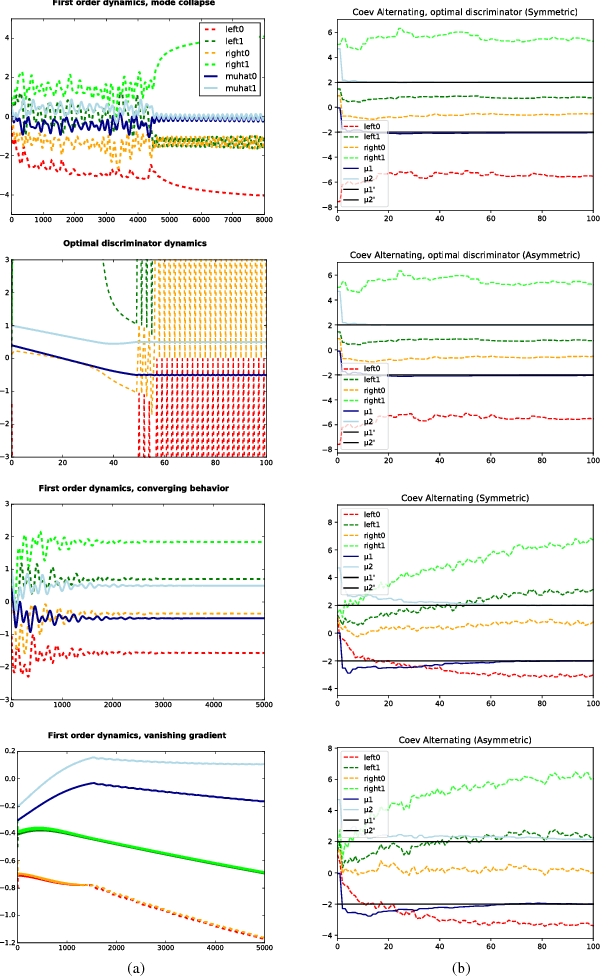

Abstract:GANs are difficult to train due to convergence pathologies such as mode and discriminator collapse. We introduce Lipizzaner, an open source software system that allows machine learning engineers to train GANs in a distributed and robust way. Lipizzaner distributes a competitive coevolutionary algorithm which, by virtue of dual, adapting, generator and discriminator populations, is robust to collapses. The algorithm is well suited to efficient distribution because it uses a spatial grid abstraction. Training is local to each cell and strong intermediate training results are exchanged among overlapping neighborhoods allowing high performing solutions to propagate and improve with more rounds of training. Experiments on common image datasets overcome critical collapses. Communication overhead scales linearly when increasing the number of compute instances and we observe that increasing scale leads to improved model performance.

Towards Distributed Coevolutionary GANs

Aug 31, 2018

Abstract:Generative Adversarial Networks (GANs) have become one of the dominant methods for deep generative modeling. Despite their demonstrated success on multiple vision tasks, GANs are difficult to train and much research has been dedicated towards understanding and improving their gradient-based learning dynamics. Here, we investigate the use of coevolution, a class of black-box (gradient-free) co-optimization techniques and a powerful tool in evolutionary computing, as a supplement to gradient-based GAN training techniques. Experiments on a simple model that exhibits several of the GAN gradient-based dynamics (e.g., mode collapse, oscillatory behavior, and vanishing gradients) show that coevolution is a promising framework for escaping degenerate GAN training behaviors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge