Todor Kolev

Efficient Task-Oriented Dialogue Systems with Response Selection as an Auxiliary Task

Aug 15, 2022

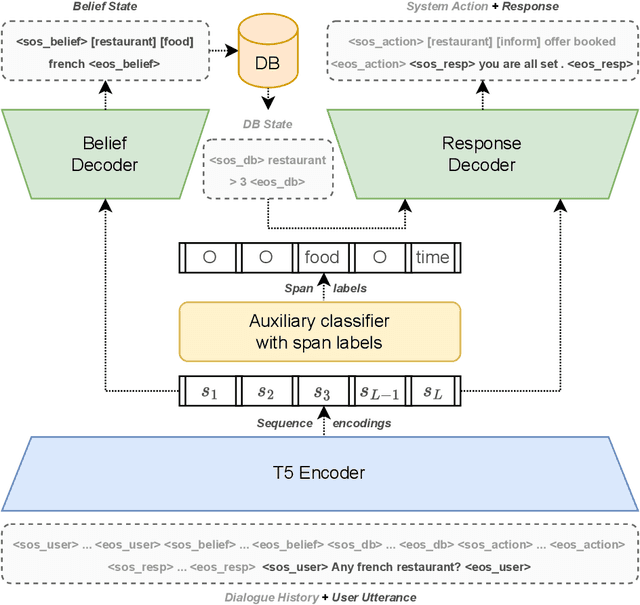

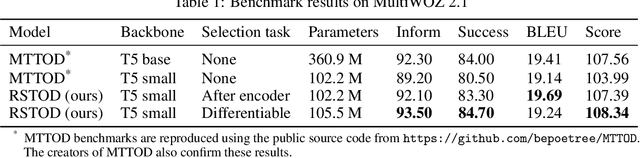

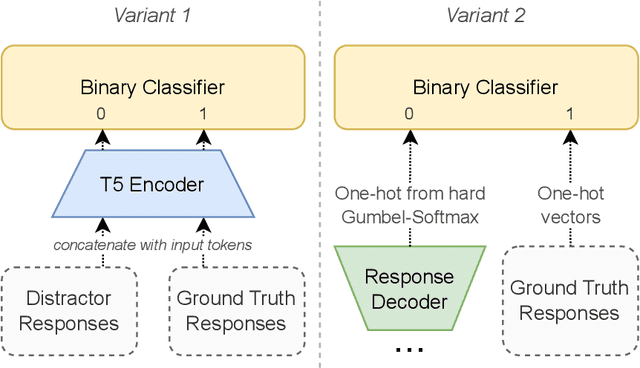

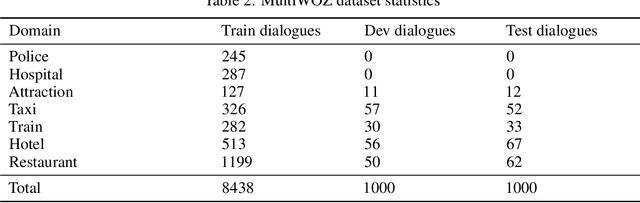

Abstract:The adoption of pre-trained language models in task-oriented dialogue systems has resulted in significant enhancements of their text generation abilities. However, these architectures are slow to use because of the large number of trainable parameters and can sometimes fail to generate diverse responses. To address these limitations, we propose two models with auxiliary tasks for response selection - (1) distinguishing distractors from ground truth responses and (2) distinguishing synthetic responses from ground truth labels. They achieve state-of-the-art results on the MultiWOZ 2.1 dataset with combined scores of 107.5 and 108.3 and outperform a baseline with three times more parameters. We publish reproducible code and checkpoints and discuss the effects of applying auxiliary tasks to T5-based architectures.

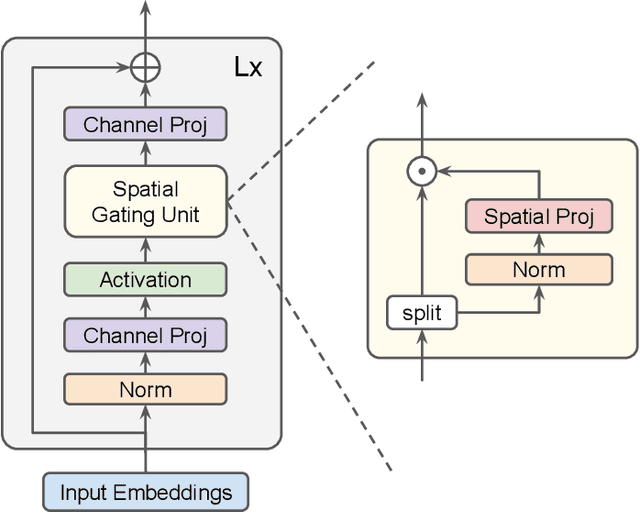

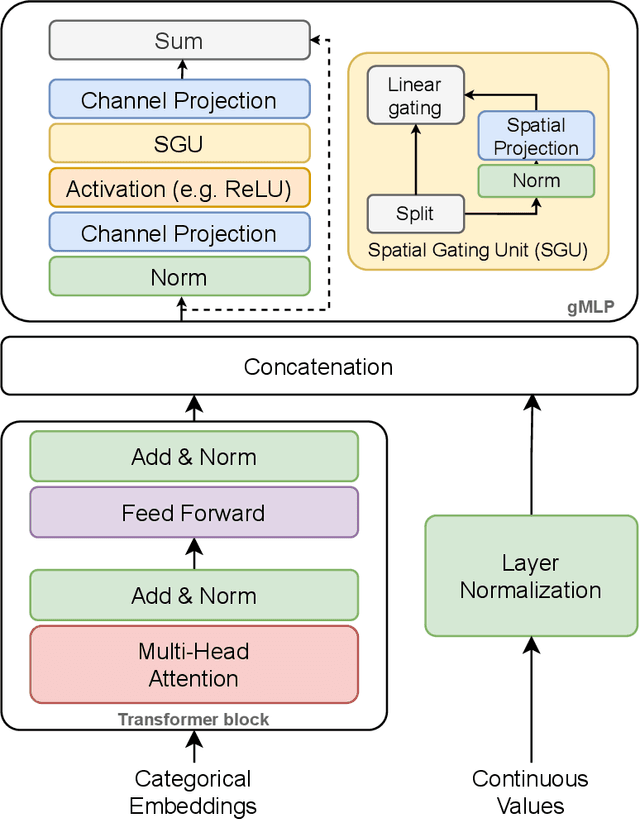

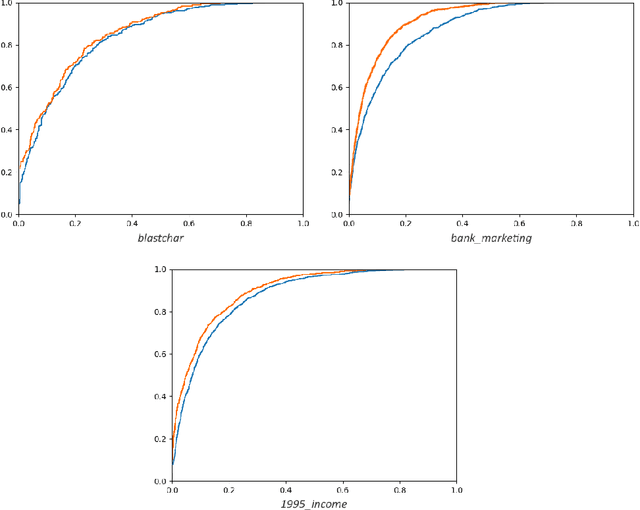

The GatedTabTransformer. An enhanced deep learning architecture for tabular modeling

Jan 01, 2022

Abstract:There is an increasing interest in the application of deep learning architectures to tabular data. One of the state-of-the-art solutions is TabTransformer which incorporates an attention mechanism to better track relationships between categorical features and then makes use of a standard MLP to output its final logits. In this paper we propose multiple modifications to the original TabTransformer performing better on binary classification tasks for three separate datasets with more than 1% AUROC gains. Inspired by gated MLP, linear projections are implemented in the MLP block and multiple activation functions are tested. We also evaluate the importance of specific hyper parameters during training.

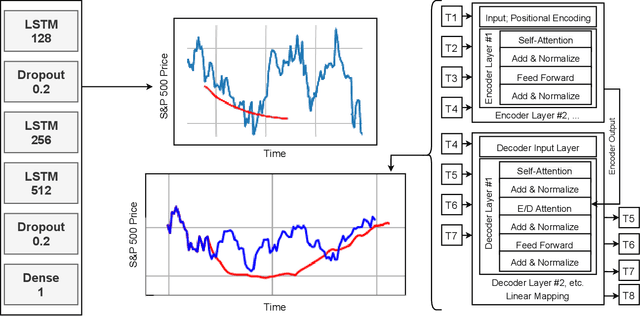

Transformers predicting the future. Applying attention in next-frame and time series forecasting

Aug 18, 2021

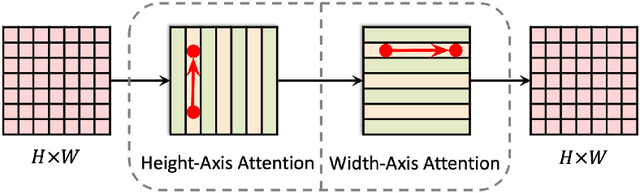

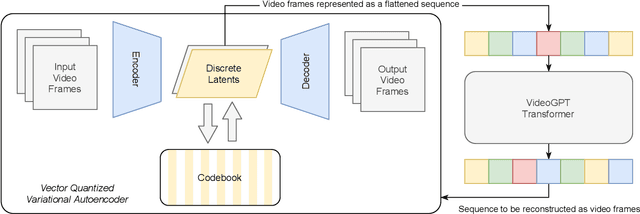

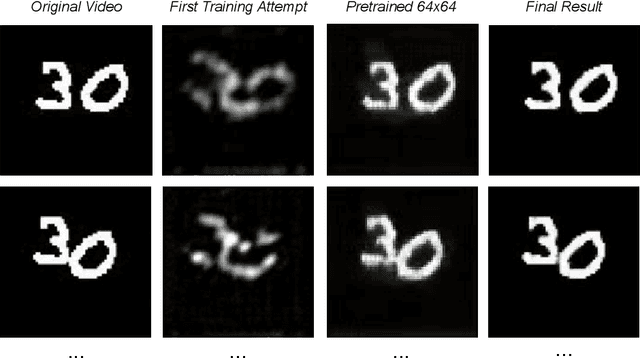

Abstract:Recurrent Neural Networks were, until recently, one of the best ways to capture the timely dependencies in sequences. However, with the introduction of the Transformer, it has been proven that an architecture with only attention-mechanisms without any RNN can improve on the results in various sequence processing tasks (e.g. NLP). Multiple studies since then have shown that similar approaches can be applied for images, point clouds, video, audio or time series forecasting. Furthermore, solutions such as the Perceiver or the Informer have been introduced to expand on the applicability of the Transformer. Our main objective is testing and evaluating the effectiveness of applying Transformer-like models on time series data, tackling susceptibility to anomalies, context awareness and space complexity by fine-tuning the hyperparameters, preprocessing the data, applying dimensionality reduction or convolutional encodings, etc. We are also looking at the problem of next-frame prediction and exploring ways to modify existing solutions in order to achieve higher performance and learn generalized knowledge.

Trustless parallel local search for effective distributed algorithm discovery

Apr 02, 2020

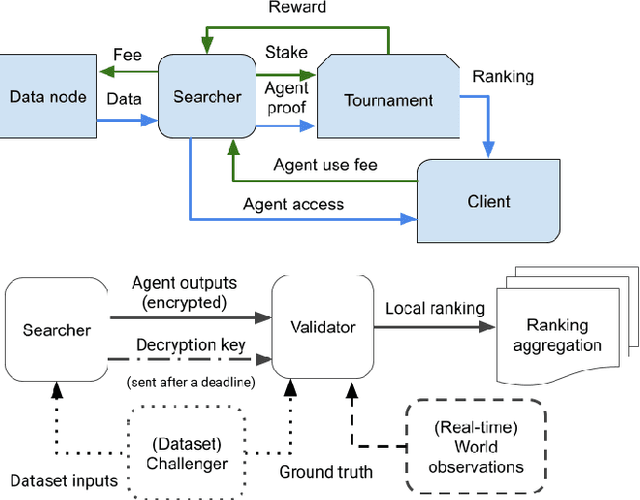

Abstract:Metaheuristic search strategies have proven their effectiveness against man-made solutions in various contexts. They are generally effective in local search area exploitation, and their overall performance is largely impacted by the balance between exploration and exploitation. Recent developments in parallel local search explore methods to take advantage of the efficient local exploitation of searches and reach impressive results. This however restricts the scaling potential to nodes within a private, trusted computer cluster. In this research we propose a novel blockchain protocol that allows parallel local search to scale to untrusted and anonymous computational nodes. The protocol introduces publicly verifiable performance evaluation of the local optima reported by each node, creating a competitive environment between the local searches. That is strengthened with economical stimuli for producing good solutions, that provide coordination between the nodes, as every node tries to explore different sections of the search space to beat their competition.

Distributed creation of Machine learning agents for Blockchain analysis

Sep 06, 2019

Abstract:Creating efficient deep neural networks involves repetitive manual optimization of the topology and the hyperparameters. This human intervention significantly inhibits the process. Recent publications propose various Neural Architecture Search (NAS) algorithms that automate this work. We have applied a customized NAS algorithm with network morphism and Bayesian optimization to the problem of cryptocurrency predictions, where it achieved results on par with our best manually designed models. This is consistent with the findings of other teams, while several known experiments suggest that given enough computing power, NAS algorithms can surpass state-of-the-art neural network models designed by humans. In this paper, we propose a blockchain network protocol that incentivises independent computing nodes to run NAS algorithms and compete in finding better neural network models for a particular task. If implemented, such network can be an autonomous and self-improving source of machine learning models, significantly boosting and democratizing the access to AI capabilities for many industries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge