Tianzhang Cai

Multi-agent Reinforcement Learning for Energy Saving in Multi-Cell Massive MIMO Systems

Feb 05, 2024

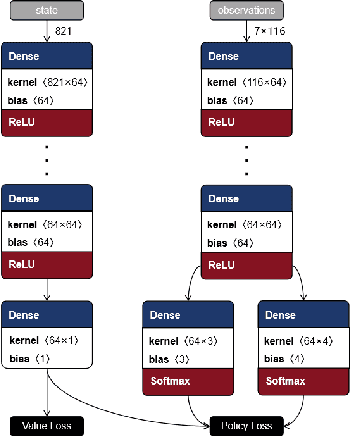

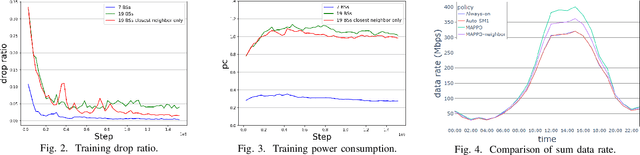

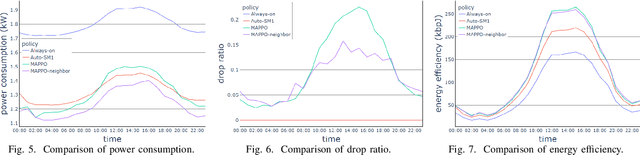

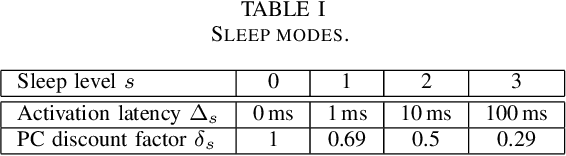

Abstract:We develop a multi-agent reinforcement learning (MARL) algorithm to minimize the total energy consumption of multiple massive MIMO (multiple-input multiple-output) base stations (BSs) in a multi-cell network while preserving the overall quality-of-service (QoS) by making decisions on the multi-level advanced sleep modes (ASMs) and antenna switching of these BSs. The problem is modeled as a decentralized partially observable Markov decision process (DEC-POMDP) to enable collaboration between individual BSs, which is necessary to tackle inter-cell interference. A multi-agent proximal policy optimization (MAPPO) algorithm is designed to learn a collaborative BS control policy. To enhance its scalability, a modified version called MAPPO-neighbor policy is further proposed. Simulation results demonstrate that the trained MAPPO agent achieves better performance compared to baseline policies. Specifically, compared to the auto sleep mode 1 (symbol-level sleeping) algorithm, the MAPPO-neighbor policy reduces power consumption by approximately 8.7% during low-traffic hours and improves energy efficiency by approximately 19% during high-traffic hours, respectively.

Food Odor Recognition via Multi-step Classification

Oct 13, 2021

Abstract:Predicting food labels and freshness from its odor remains a decades-old task that requires a complicated algorithm combined with high sensitivity sensors. In this paper, we initiate a multi-step classifier, which firstly clusters food into four categories, then classifies the food label concerning the predicted category, and finally identifies the freshness. We use BME688 gas sensors packed with BME AI studio for data collection and feature extraction. The normalized dataset was preprocessed with PCA and LDA. We evaluated the effectiveness of algorithms such as tree methods, MLP, and CNN through assessment indexes at each stage. We also carried out an ablation experiment to show the necessity and feasibility of the multi-step classifier. The results demonstrated the robustness and adaptability of the multi-step classifier.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge