Tianyou Wang

Zero-shot Reconstruction of In-Scene Object Manipulation from Video

Dec 22, 2025

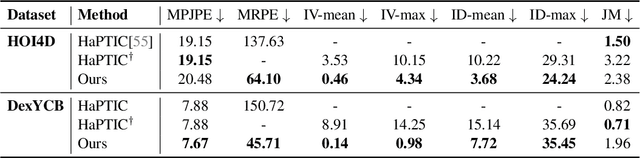

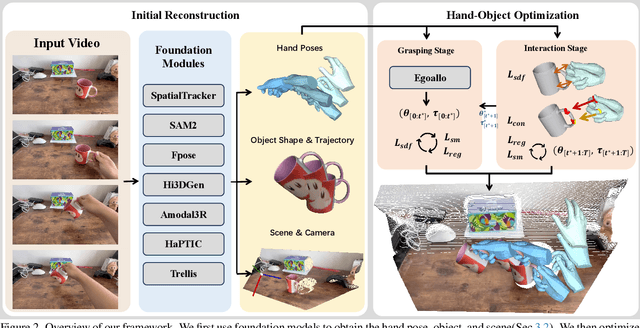

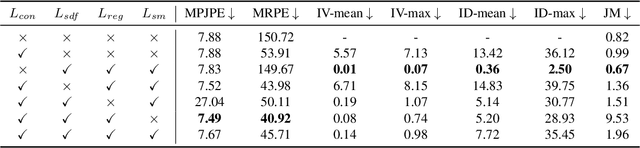

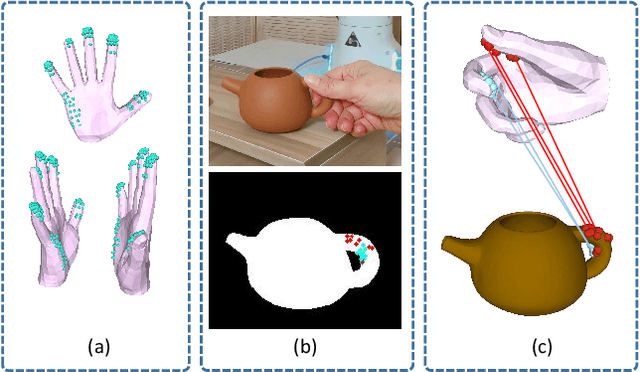

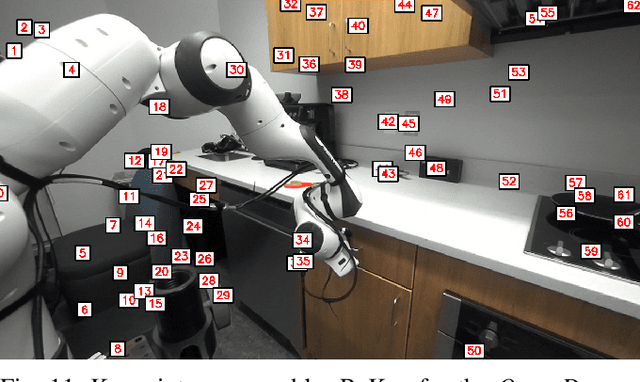

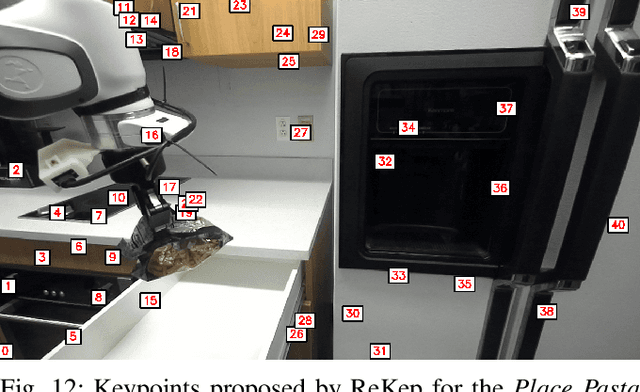

Abstract:We build the first system to address the problem of reconstructing in-scene object manipulation from a monocular RGB video. It is challenging due to ill-posed scene reconstruction, ambiguous hand-object depth, and the need for physically plausible interactions. Existing methods operate in hand centric coordinates and ignore the scene, hindering metric accuracy and practical use. In our method, we first use data-driven foundation models to initialize the core components, including the object mesh and poses, the scene point cloud, and the hand poses. We then apply a two-stage optimization that recovers a complete hand-object motion from grasping to interaction, which remains consistent with the scene information observed in the input video.

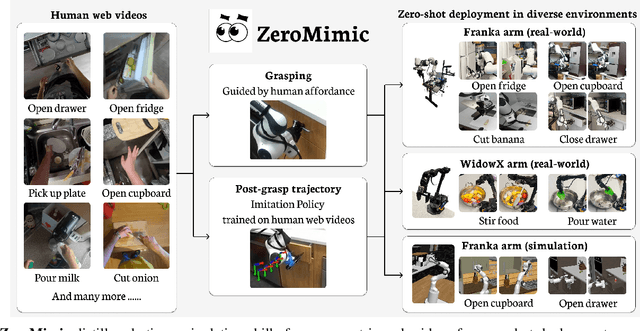

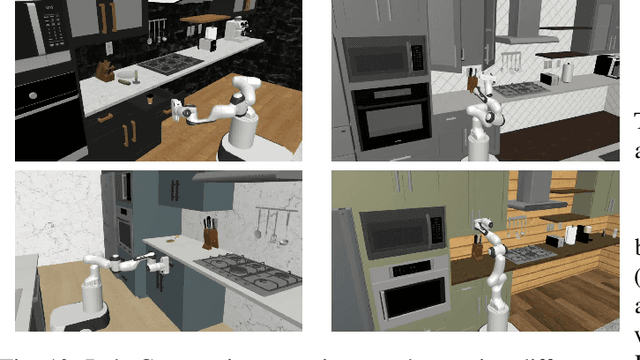

ZeroMimic: Distilling Robotic Manipulation Skills from Web Videos

Mar 31, 2025

Abstract:Many recent advances in robotic manipulation have come through imitation learning, yet these rely largely on mimicking a particularly hard-to-acquire form of demonstrations: those collected on the same robot in the same room with the same objects as the trained policy must handle at test time. In contrast, large pre-recorded human video datasets demonstrating manipulation skills in-the-wild already exist, which contain valuable information for robots. Is it possible to distill a repository of useful robotic skill policies out of such data without any additional requirements on robot-specific demonstrations or exploration? We present the first such system ZeroMimic, that generates immediately deployable image goal-conditioned skill policies for several common categories of manipulation tasks (opening, closing, pouring, pick&place, cutting, and stirring) each capable of acting upon diverse objects and across diverse unseen task setups. ZeroMimic is carefully designed to exploit recent advances in semantic and geometric visual understanding of human videos, together with modern grasp affordance detectors and imitation policy classes. After training ZeroMimic on the popular EpicKitchens dataset of ego-centric human videos, we evaluate its out-of-the-box performance in varied real-world and simulated kitchen settings with two different robot embodiments, demonstrating its impressive abilities to handle these varied tasks. To enable plug-and-play reuse of ZeroMimic policies on other task setups and robots, we release software and policy checkpoints of our skill policies.

A Method for Arbitrary Instance Style Transfer

Dec 13, 2019

Abstract:The ability to synthesize style and content of different images to form a visually coherent image holds great promise in various applications such as stylistic painting, design prototyping, image editing, and augmented reality. However, the majority of works in image style transfer have focused on transferring the style of an image to the entirety of another image, and only a very small number of works have experimented on methods to transfer style to an instance of another image. Researchers have proposed methods to circumvent the difficulty of transferring style to an instance in an arbitrary shape. In this paper, we propose a topologically inspired algorithm called Forward Stretching to tackle this problem by transforming an instance into a tensor representation, which allows us to transfer style to this instance itself directly. Forward Stretching maps pixels to specific positions and interpolate values between pixels to transform an instance to a tensor. This algorithm allows us to introduce a method to transfer arbitrary style to an instance in an arbitrary shape. We showcase the results of our method in this paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge