Tiago Fernandes Tavares

Question-Driven Analysis and Synthesis: Building Interpretable Thematic Trees with LLMs for Text Clustering and Controllable Generation

Sep 26, 2025Abstract:Unsupervised analysis of text corpora is challenging, especially in data-scarce domains where traditional topic models struggle. While these models offer a solution, they typically describe clusters with lists of keywords that require significant manual effort to interpret and often lack semantic coherence. To address this critical interpretability gap, we introduce Recursive Thematic Partitioning (RTP), a novel framework that leverages Large Language Models (LLMs) to interactively build a binary tree. Each node in the tree is a natural language question that semantically partitions the data, resulting in a fully interpretable taxonomy where the logic of each cluster is explicit. Our experiments demonstrate that RTP's question-driven hierarchy is more interpretable than the keyword-based topics from a strong baseline like BERTopic. Furthermore, we establish the quantitative utility of these clusters by showing they serve as powerful features in downstream classification tasks, particularly when the data's underlying themes correlate with the task labels. RTP introduces a new paradigm for data exploration, shifting the focus from statistical pattern discovery to knowledge-driven thematic analysis. Furthermore, we demonstrate that the thematic paths from the RTP tree can serve as structured, controllable prompts for generative models. This transforms our analytical framework into a powerful tool for synthesis, enabling the consistent imitation of specific characteristics discovered in the source corpus.

Multi-label Cross-lingual automatic music genre classification from lyrics with Sentence BERT

Jan 07, 2025

Abstract:Music genres are shaped by both the stylistic features of songs and the cultural preferences of artists' audiences. Automatic classification of music genres using lyrics can be useful in several applications such as recommendation systems, playlist creation, and library organization. We present a multi-label, cross-lingual genre classification system based on multilingual sentence embeddings generated by sBERT. Using a bilingual Portuguese-English dataset with eight overlapping genres, we demonstrate the system's ability to train on lyrics in one language and predict genres in another. Our approach outperforms the baseline approach of translating lyrics and using a bag-of-words representation, improving the genrewise average F1-Score from 0.35 to 0.69. The classifier uses a one-vs-all architecture, enabling it to assign multiple genre labels to a single lyric. Experimental results reveal that dataset centralization notably improves cross-lingual performance. This approach offers a scalable solution for genre classification across underrepresented languages and cultural domains, advancing the capabilities of music information retrieval systems.

Segment Relevance Estimation for Audio Analysis and Weakly-Labelled Classification

Nov 12, 2019

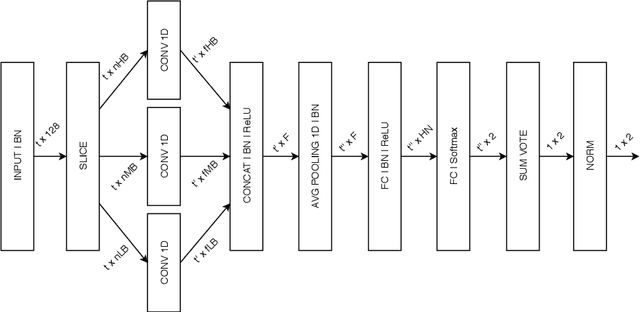

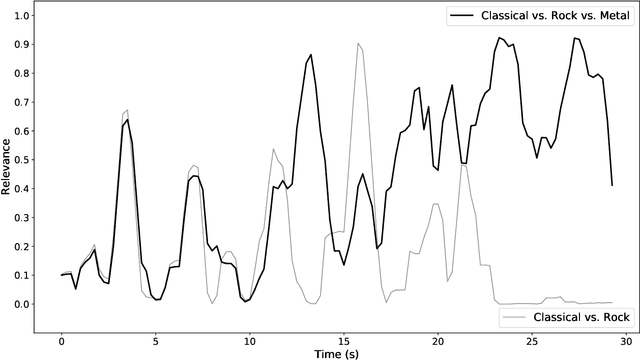

Abstract:We propose a method that quantifies the importance, namely relevance, of audio segments for classification in weakly-labelled problems. It works by drawing information from a set of class-wise one-vs-all classifiers. By selecting the classifiers used in each specific classification problem, the relevance measure adapts to different user-defined viewpoints without requiring additional neural network training. This characteristic allows the relevance measure to highlight audio segments that quickly adapt to user-defined criteria. Such functionality can be used for computer-assisted audio analysis. Also, we propose a neural network architecture, namely RELNET, that leverages the relevance measure for weakly-labelled audio classification problems. RELNET was evaluated in the DCASE2018 dataset and achieved competitive classification results when compared to previous attention-based proposals.

Random Projections of Mel-Spectrograms as Low-Level Features for Automatic Music Genre Classification

Nov 12, 2019

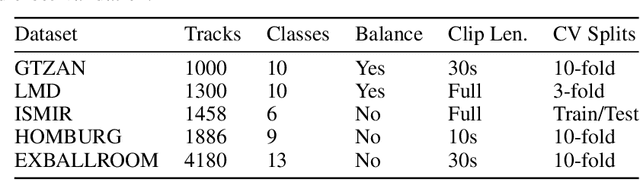

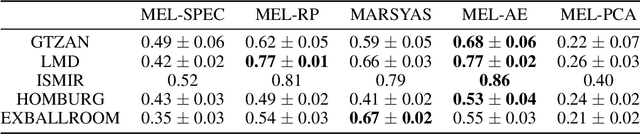

Abstract:In this work, we analyse the random projections of Mel-spectrograms as low-level features for music genre classification. This approach was compared to handcrafted features, features learned using an auto-encoder and features obtained from a transfer learning setting. Tests in five different well-known, publicly available datasets show that random projections leads to results comparable to learned features and outperforms features obtained via transfer learning in a shallow learning scenario. Random projections do not require using extensive specialist knowledge and, simultaneously, requires less computational power for training than other projection-based low-level features. Therefore, they can be are a viable choice for usage in shallow learning content-based music genre classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge