Segment Relevance Estimation for Audio Analysis and Weakly-Labelled Classification

Paper and Code

Nov 12, 2019

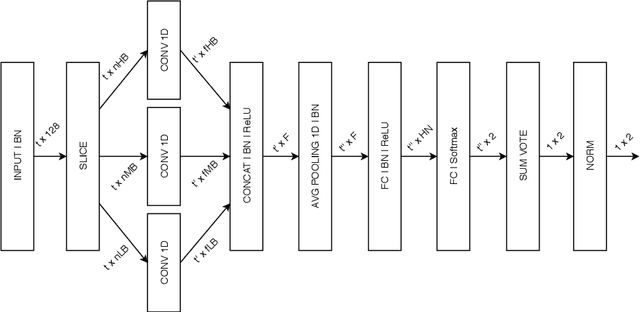

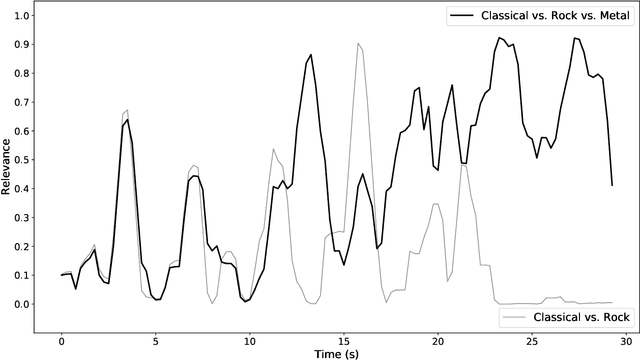

We propose a method that quantifies the importance, namely relevance, of audio segments for classification in weakly-labelled problems. It works by drawing information from a set of class-wise one-vs-all classifiers. By selecting the classifiers used in each specific classification problem, the relevance measure adapts to different user-defined viewpoints without requiring additional neural network training. This characteristic allows the relevance measure to highlight audio segments that quickly adapt to user-defined criteria. Such functionality can be used for computer-assisted audio analysis. Also, we propose a neural network architecture, namely RELNET, that leverages the relevance measure for weakly-labelled audio classification problems. RELNET was evaluated in the DCASE2018 dataset and achieved competitive classification results when compared to previous attention-based proposals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge