Thorsten Laude

HEVC Inter Coding Using Deep Recurrent Neural Networks and Artificial Reference Pictures

Dec 05, 2018

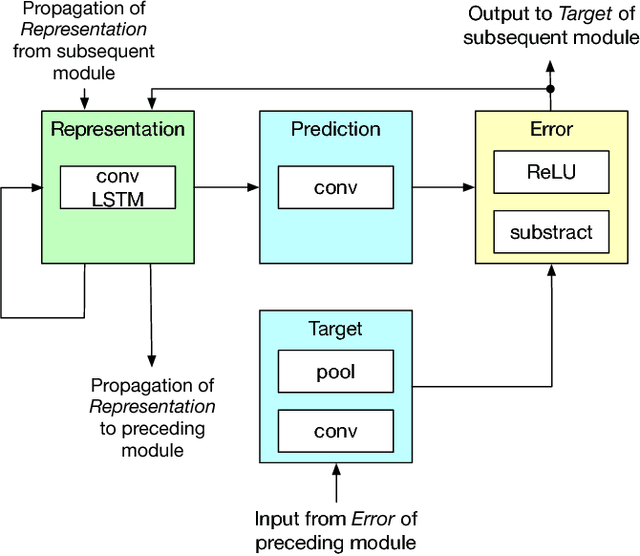

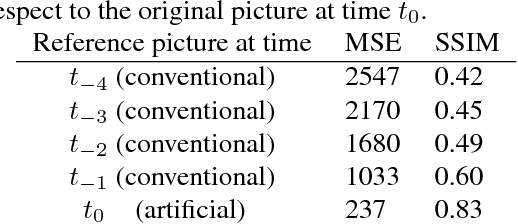

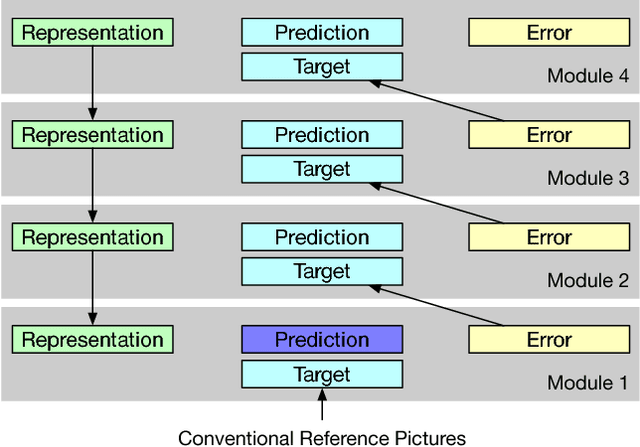

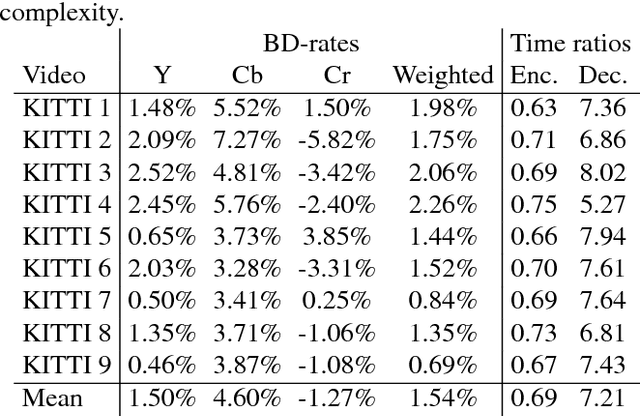

Abstract:The efficiency of motion compensated prediction in modern video codecs highly depends on the available reference pictures. Occlusions and non-linear motion pose challenges for the motion compensation and often result in high bit rates for the prediction error. We propose the generation of artificial reference pictures using deep recurrent neural networks. Conceptually, a reference picture at the time instance of the currently coded picture is generated from previously reconstructed conventional reference pictures. Based on these artificial reference pictures, we propose a complete coding pipeline based on HEVC. By using the artificial reference pictures for motion compensated prediction, average BD-rate gains of 1.5% over HEVC are achieved.

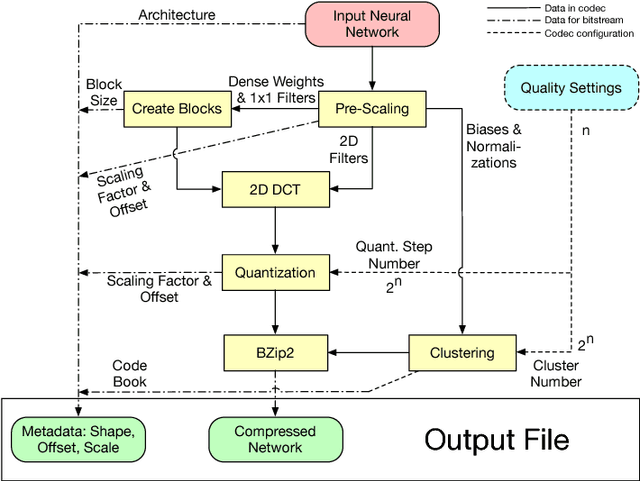

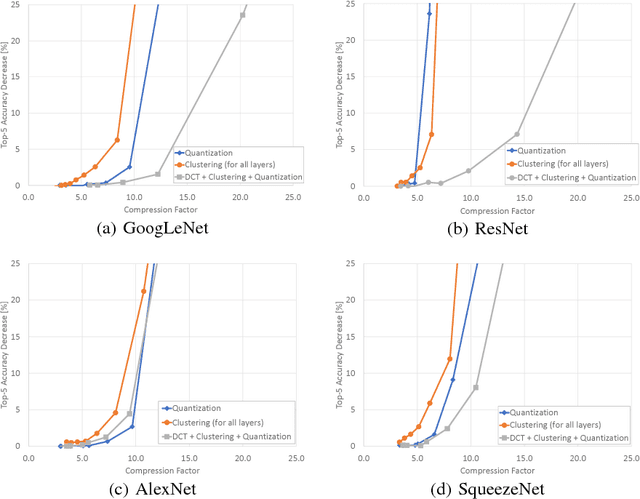

Neural Network Compression using Transform Coding and Clustering

May 18, 2018

Abstract:With the deployment of neural networks on mobile devices and the necessity of transmitting neural networks over limited or expensive channels, the file size of the trained model was identified as bottleneck. In this paper, we propose a codec for the compression of neural networks which is based on transform coding for convolutional and dense layers and on clustering for biases and normalizations. By using this codec, we achieve average compression factors between 7.9-9.3 while the accuracy of the compressed networks for image classification decreases only by 1%-2%, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge