Thompson Chyrikov

A CT Image Denoising Method with Residual Encoder-Decoder Network

Apr 02, 2024

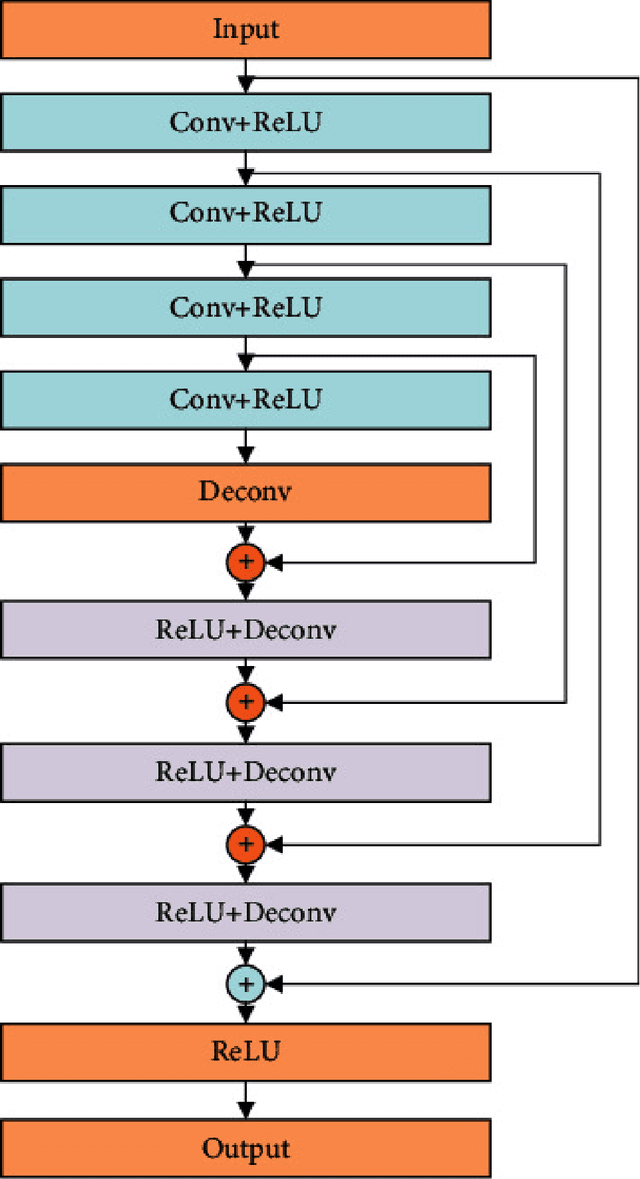

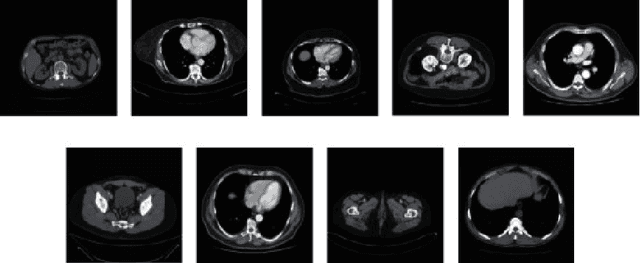

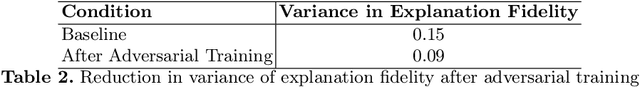

Abstract:Utilizing a low-dose CT approach significantly reduces the radiation exposure for patients, yet it introduces challenges, such as increased noise and artifacts in the resultant images, which can hinder accurate medical diagnostics. Traditional methods for noise reduction struggle with preserving image textures due to the complexity of modeling statistical properties directly within the image domain. To address these limitations, this study introduces an enhanced noise-reduction technique centered around an advanced residual encoder-decoder network. By incorporating recursive processing into the foundational network, this method reduces computational complexity and enhances the effectiveness of noise reduction. Furthermore, the introduction of a root-mean-square error and perceptual loss functions aims to retain the integrity of the images' textural details. The enhanced technique also includes optimized tissue segmentation, improving artifact management post-improvement. Validation using the TCGA-COAD clinical dataset demonstrates superior performance in both noise reduction and image quality, as measured by post-denoising PSNR and SSIM, compared to the existing WGAN approach. This advancement in CT image processing offers a practical solution for clinical applications, achieving lower computational demands and faster processing times without compromising image quality.

The Limits of Perception: Analyzing Inconsistencies in Saliency Maps in XAI

Mar 23, 2024

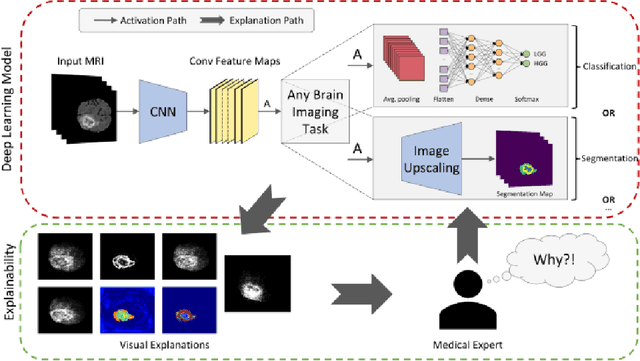

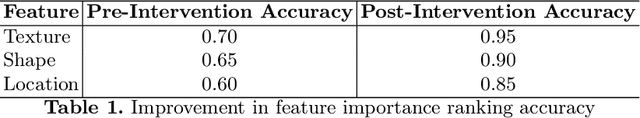

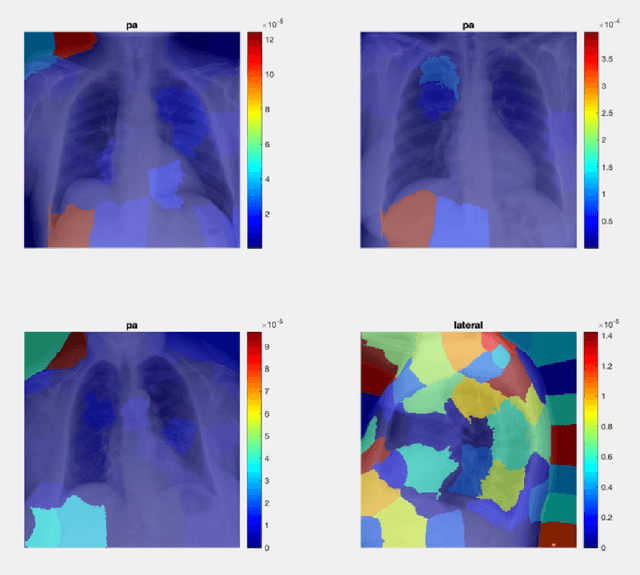

Abstract:Explainable artificial intelligence (XAI) plays an indispensable role in demystifying the decision-making processes of AI, especially within the healthcare industry. Clinicians rely heavily on detailed reasoning when making a diagnosis, often CT scans for specific features that distinguish between benign and malignant lesions. A comprehensive diagnostic approach includes an evaluation of imaging results, patient observations, and clinical tests. The surge in deploying deep learning models as support systems in medical diagnostics has been significant, offering advances that traditional methods could not. However, the complexity and opacity of these models present a double-edged sword. As they operate as "black boxes," with their reasoning obscured and inaccessible, there's an increased risk of misdiagnosis, which can lead to patient harm. Hence, there is a pressing need to cultivate transparency within AI systems, ensuring that the rationale behind an AI's diagnostic recommendations is clear and understandable to medical practitioners. This shift towards transparency is not just beneficial -- it's a critical step towards responsible AI integration in healthcare, ensuring that AI aids rather than hinders medical professionals in their crucial work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge