Thomas W. Rogers

Evaluation of an AI system for the automated detection of glaucoma from stereoscopic optic disc photographs: the European Optic Disc Assessment Study

Jun 04, 2019

Abstract:Objectives: To evaluate the performance of a deep learning based Artificial Intelligence (AI) software for detection of glaucoma from stereoscopic optic disc photographs, and to compare this performance to the performance of a large cohort of ophthalmologists and optometrists. Methods: A retrospective study evaluating the diagnostic performance of an AI software (Pegasus v1.0, Visulytix Ltd., London UK) and comparing it to that of 243 European ophthalmologists and 208 British optometrists, as determined in previous studies, for the detection of glaucomatous optic neuropathy from 94 scanned stereoscopic photographic slides scanned into digital format. Results: Pegasus was able to detect glaucomatous optic neuropathy with an accuracy of 83.4% (95% CI: 77.5-89.2). This is comparable to an average ophthalmologist accuracy of 80.5% (95% CI: 67.2-93.8) and average optometrist accuracy of 80% (95% CI: 67-88) on the same images. In addition, the AI system had an intra-observer agreement (Cohen's Kappa, $\kappa$) of 0.74 (95% CI: 0.63-0.85), compared to 0.70 (range: -0.13-1.00; 95% CI: 0.67-0.73) and 0.71 (range: 0.08-1.00) for ophthalmologists and optometrists, respectively. There was no statistically significant difference between the performance of the deep learning system and ophthalmologists or optometrists. There was no statistically significant difference between the performance of the deep learning system and ophthalmologists or optometrists. Conclusion: The AI system obtained a diagnostic performance and repeatability comparable to that of the ophthalmologists and optometrists. We conclude that deep learning based AI systems, such as Pegasus, demonstrate significant promise in the assisted detection of glaucomatous optic neuropathy.

Automated detection of smuggled high-risk security threats using Deep Learning

Sep 09, 2016

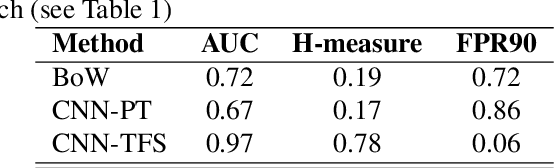

Abstract:The security infrastructure is ill-equipped to detect and deter the smuggling of non-explosive devices that enable terror attacks such as those recently perpetrated in western Europe. The detection of so-called "small metallic threats" (SMTs) in cargo containers currently relies on statistical risk analysis, intelligence reports, and visual inspection of X-ray images by security officers. The latter is very slow and unreliable due to the difficulty of the task: objects potentially spanning less than 50 pixels have to be detected in images containing more than 2 million pixels against very complex and cluttered backgrounds. In this contribution, we demonstrate for the first time the use of Convolutional Neural Networks (CNNs), a type of Deep Learning, to automate the detection of SMTs in fullsize X-ray images of cargo containers. Novel approaches for dataset augmentation allowed to train CNNs from-scratch despite the scarcity of data available. We report fewer than 6% false alarms when detecting 90% SMTs synthetically concealed in stream-of-commerce images, which corresponds to an improvement of over an order of magnitude over conventional approaches such as Bag-of-Words (BoWs). The proposed scheme offers potentially super-human performance for a fraction of the time it would take for a security officers to carry out visual inspection (processing time is approximately 3.5s per container image).

Detection of concealed cars in complex cargo X-ray imagery using Deep Learning

Sep 09, 2016

Abstract:Non-intrusive inspection systems based on X-ray radiography techniques are routinely used at transport hubs to ensure the conformity of cargo content with the supplied shipping manifest. As trade volumes increase and regulations become more stringent, manual inspection by trained operators is less and less viable due to low throughput. Machine vision techniques can assist operators in their task by automating parts of the inspection workflow. Since cars are routinely involved in trafficking, export fraud, and tax evasion schemes, they represent an attractive target for automated detection and flagging for subsequent inspection by operators. In this contribution, we describe a method for the detection of cars in X-ray cargo images based on trained-from-scratch Convolutional Neural Networks. By introducing an oversampling scheme that suitably addresses the low number of car images available for training, we achieved 100% car image classification rate for a false positive rate of 1-in-454. Cars that were partially or completely obscured by other goods, a modus operandi frequently adopted by criminals, were correctly detected. We believe that this level of performance suggests that the method is suitable for deployment in the field. It is expected that the generic object detection workflow described can be extended to other object classes given the availability of suitable training data.

Automated X-ray Image Analysis for Cargo Security: Critical Review and Future Promise

Aug 02, 2016

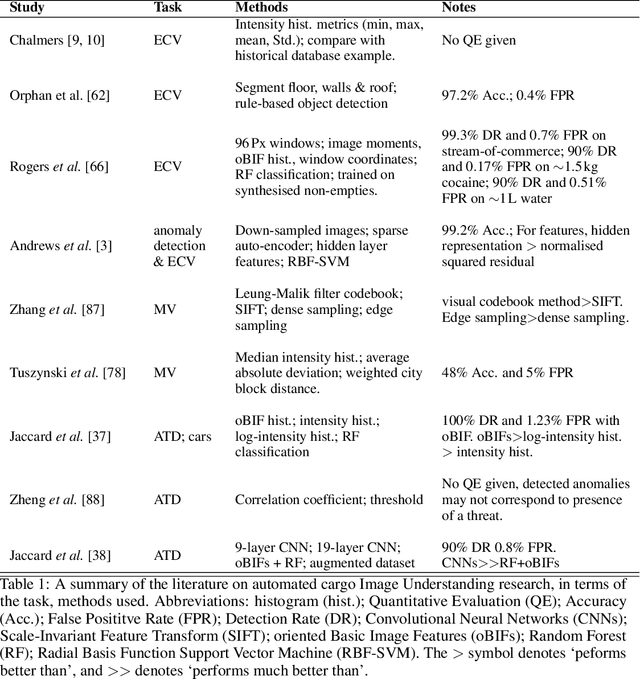

Abstract:We review the relatively immature field of automated image analysis for X-ray cargo imagery. There is increasing demand for automated analysis methods that can assist in the inspection and selection of containers, due to the ever-growing volumes of traded cargo and the increasing concerns that customs- and security-related threats are being smuggled across borders by organised crime and terrorist networks. We split the field into the classical pipeline of image preprocessing and image understanding. Preprocessing includes: image manipulation; quality improvement; Threat Image Projection (TIP); and material discrimination and segmentation. Image understanding includes: Automated Threat Detection (ATD); and Automated Contents Verification (ACV). We identify several gaps in the literature that need to be addressed and propose ideas for future research. Where the current literature is sparse we borrow from the single-view, multi-view, and CT X-ray baggage domains, which have some characteristics in common with X-ray cargo.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge