Thomas Hollis

Catch Me If You Can

Apr 18, 2019

Abstract:As advances in signature recognition have reached a new plateau of performance at around 2% error rate, it is interesting to investigate alternative approaches. The approach detailed in this paper looks at using Variational Auto-Encoders (VAEs) to learn a latent space representation of genuine signatures. This is then used to pass unlabelled signatures such that only the genuine ones will successfully be reconstructed by the VAE. This latent space representation and the reconstruction loss is subsequently used by random forest and kNN classifiers for prediction. Subsequently, VAE disentanglement and the possibility of posterior collapse are ascertained and analysed. The final results suggest that while this method performs less well than existing alternatives, further work may allow this to be used as part of an ensemble for future models.

A Comparison of LSTMs and Attention Mechanisms for Forecasting Financial Time Series

Dec 18, 2018

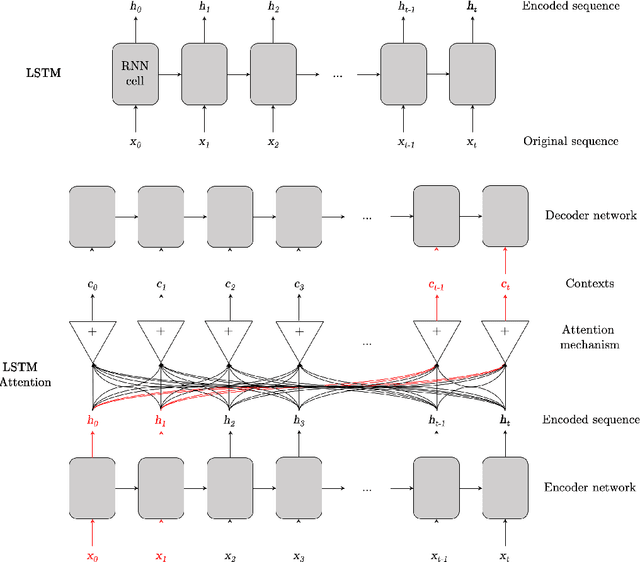

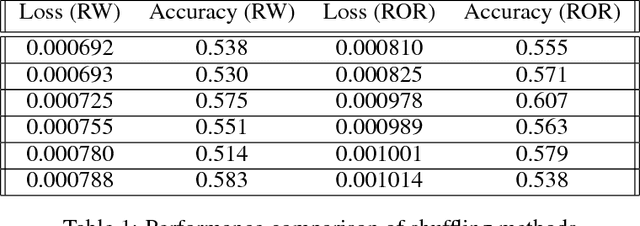

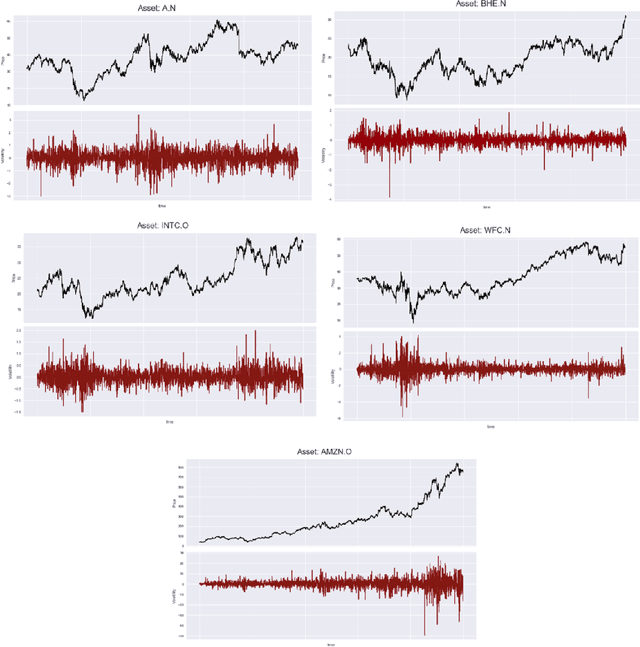

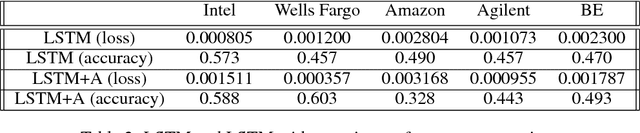

Abstract:While LSTMs show increasingly promising results for forecasting Financial Time Series (FTS), this paper seeks to assess if attention mechanisms can further improve performance. The hypothesis is that attention can help prevent long-term dependencies experienced by LSTM models. To test this hypothesis, the main contribution of this paper is the implementation of an LSTM with attention. Both the benchmark LSTM and the LSTM with attention were compared and both achieved reasonable performances of up to 60% on five stocks from Kaggle's Two Sigma dataset. This comparative analysis demonstrates that an LSTM with attention can indeed outperform standalone LSTMs but further investigation is required as issues do arise with such model architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge