Thomas Donaldson

Taking Principles Seriously: A Hybrid Approach to Value Alignment

Dec 21, 2020

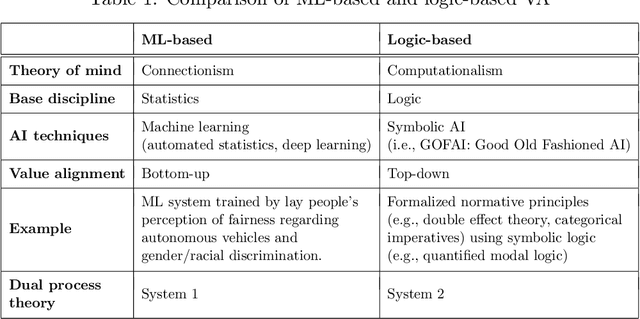

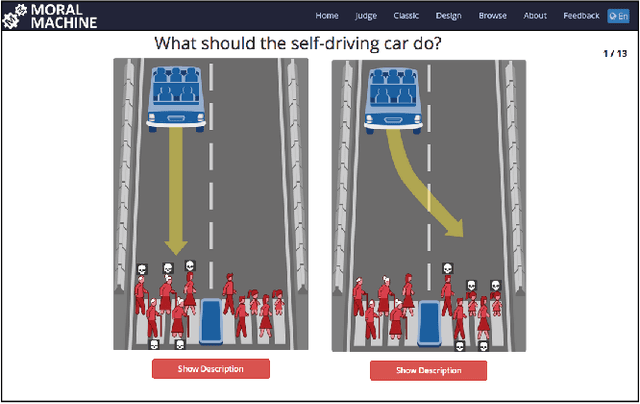

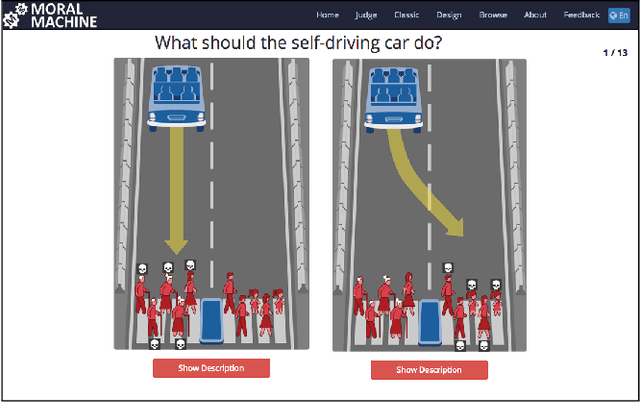

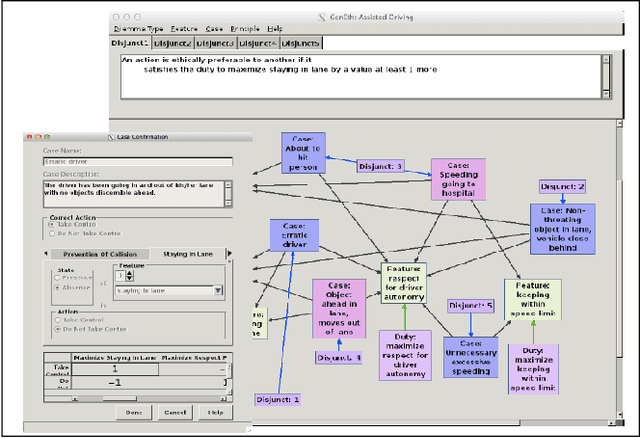

Abstract:An important step in the development of value alignment (VA) systems in AI is understanding how VA can reflect valid ethical principles. We propose that designers of VA systems incorporate ethics by utilizing a hybrid approach in which both ethical reasoning and empirical observation play a role. This, we argue, avoids committing the "naturalistic fallacy," which is an attempt to derive "ought" from "is," and it provides a more adequate form of ethical reasoning when the fallacy is not committed. Using quantified model logic, we precisely formulate principles derived from deontological ethics and show how they imply particular "test propositions" for any given action plan in an AI rule base. The action plan is ethical only if the test proposition is empirically true, a judgment that is made on the basis of empirical VA. This permits empirical VA to integrate seamlessly with independently justified ethical principles.

Grounding Value Alignment with Ethical Principles

Jul 11, 2019

Abstract:An important step in the development of value alignment (VA) systems in AI is understanding how values can interrelate with facts. Designers of future VA systems will need to utilize a hybrid approach in which ethical reasoning and empirical observation interrelate successfully in machine behavior. In this article we identify two problems about this interrelation that have been overlooked by AI discussants and designers. The first problem is that many AI designers commit inadvertently a version of what has been called by moral philosophers the "naturalistic fallacy," that is, they attempt to derive an "ought" from an "is." We illustrate when and why this occurs. The second problem is that AI designers adopt training routines that fail fully to simulate human ethical reasoning in the integration of ethical principles and facts. Using concepts of quantified modal logic, we proceed to offer an approach that promises to simulate ethical reasoning in humans by connecting ethical principles on the one hand and propositions about states of affairs on the other.

Mimetic vs Anchored Value Alignment in Artificial Intelligence

Oct 25, 2018

Abstract:"Value alignment" (VA) is considered as one of the top priorities in AI research. Much of the existing research focuses on the "A" part and not the "V" part of "value alignment." This paper corrects that neglect by emphasizing the "value" side of VA and analyzes VA from the vantage point of requirements in value theory, in particular, of avoiding the "naturalistic fallacy"--a major epistemic caveat. The paper begins by isolating two distinct forms of VA: "mimetic" and "anchored." Then it discusses which VA approach better avoids the naturalistic fallacy. The discussion reveals stumbling blocks for VA approaches that neglect implications of the naturalistic fallacy. Such problems are more serious in mimetic VA since the mimetic process imitates human behavior that may or may not rise to the level of correct ethical behavior. Anchored VA, including hybrid VA, in contrast, holds more promise for future VA since it anchors alignment by normative concepts of intrinsic value.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge