Thomas Courtat

Compression of Recurrent Neural Networks using Matrix Factorization

Oct 19, 2023Abstract:Compressing neural networks is a key step when deploying models for real-time or embedded applications. Factorizing the model's matrices using low-rank approximations is a promising method for achieving compression. While it is possible to set the rank before training, this approach is neither flexible nor optimal. In this work, we propose a post-training rank-selection method called Rank-Tuning that selects a different rank for each matrix. Used in combination with training adaptations, our method achieves high compression rates with no or little performance degradation. Our numerical experiments on signal processing tasks show that we can compress recurrent neural networks up to 14x with at most 1.4% relative performance reduction.

A light neural network for modulation detection under impairments

Mar 27, 2020

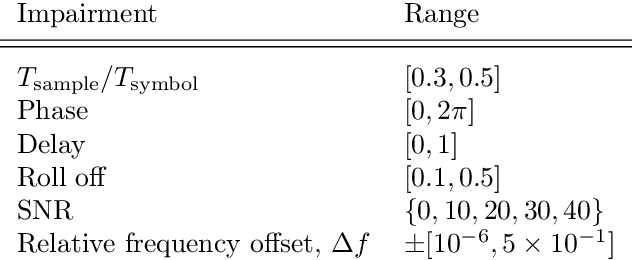

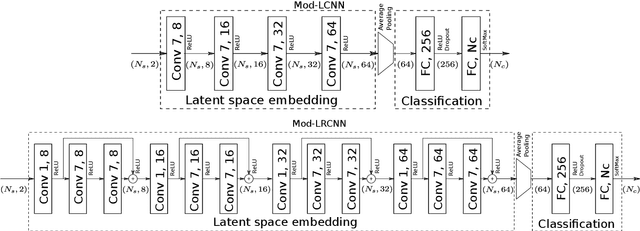

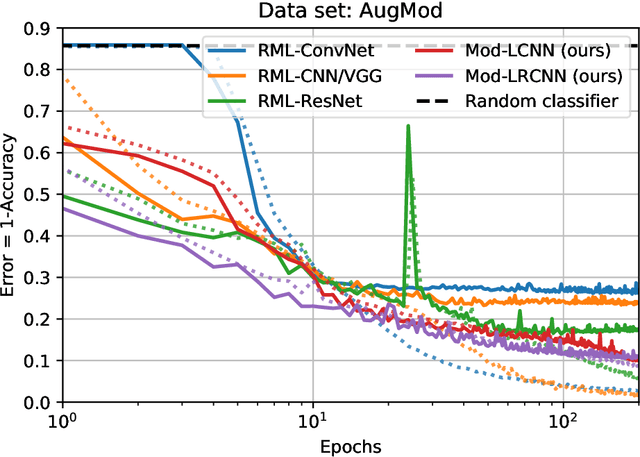

Abstract:We present a neural network architecture able to efficiently detect modulation techniques in a portion of I/Q signals. This network is lighter by up to two orders of magnitude than other architectures working on the same or similar tasks. Moreover, the number of parameters does not depend on the signal duration, which allows processing stream of data, and results in a signal-length invariant network. In addition, we develop a custom simulator able to model the different impairments the propagation channel and the demodulator can bring to the recorded I/Q signal: random phase shifts, delays, roll-off, sampling rates, and frequency offsets. We benefit from this data set to train our neural network to be invariant to impairments and quantify its accuracy at disentangling between modulations under realistic real-life conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge