Themistoklis Prodromakis

An Adiabatic Capacitive Artificial Neuron with RRAM-based Threshold Detection for Energy-Efficient Neuromorphic Computing

Feb 02, 2022

Abstract:In the quest for low power, bio-inspired computation both memristive and memcapacitive-based Artificial Neural Networks (ANN) have been the subjects of increasing focus for hardware implementation of neuromorphic computing. One step further, regenerative capacitive neural networks, which call for the use of adiabatic computing, offer a tantalising route towards even lower energy consumption, especially when combined with `memimpedace' elements. Here, we present an artificial neuron featuring adiabatic synapse capacitors to produce membrane potentials for the somas of neurons; the latter implemented via dynamic latched comparators augmented with Resistive Random-Access Memory (RRAM) devices. Our initial 4-bit adiabatic capacitive neuron proof-of-concept example shows 90% synaptic energy saving. At 4 synapses/soma we already witness an overall 35% energy reduction. Furthermore, the impact of process and temperature on the 4-bit adiabatic synapse shows a maximum energy variation of 30% at 100 degree Celsius across the corners without any functionality loss. Finally, the efficacy of our adiabatic approach to ANN is tested for 512 & 1024 synapse/neuron for worst and best case synapse loading conditions and variable equalising capacitance's quantifying the expected trade-off between equalisation capacitance and range of optimal power-clock frequencies vs. loading (i.e. the percentage of active synapses).

A system of different layers of abstraction for artificial intelligence

Jul 22, 2019

Abstract:The field of artificial intelligence (AI) represents an enormous endeavour of humankind that is currently transforming our societies down to their very foundations. Its task, building truly intelligent systems, is underpinned by a vast array of subfields ranging from the development of new electronic components to mathematical formulations of highly abstract and complex reasoning. This breadth of subfields renders it often difficult to understand how they all fit together into a bigger picture and hides the multi-faceted, multi-layered conceptual structure that in a sense can be said to be what AI truly is. In this perspective we propose a system of five levels/layers of abstraction that underpin many AI implementations. We further posit that each layer is subject to a complexity-performance trade-off whilst different layers are interlocked with one another in a control-complexity trade-off. This overview provides a conceptual map that can help to identify how and where innovation should be targeted in order to achieve different levels of functionality, assure them for safety, optimise performance under various operating constraints and map the opportunity space for social and economic exploitation.

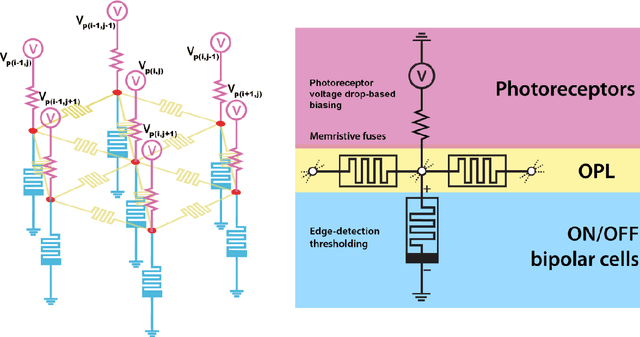

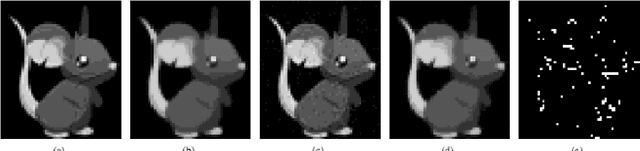

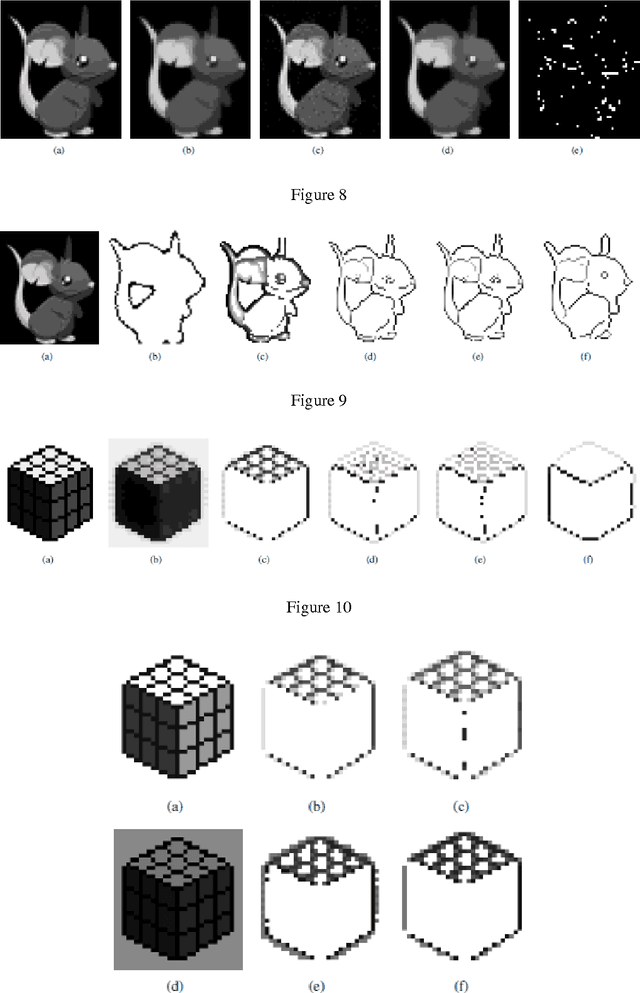

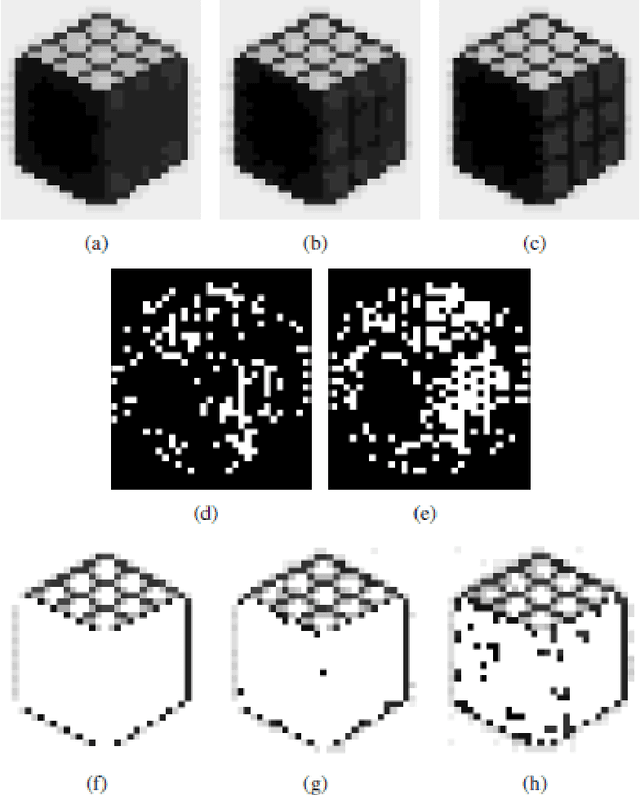

A Biomimetic Model of the Outer Plexiform Layer by Incorporating Memristive Devices

Dec 03, 2011

Abstract:In this paper we present a biorealistic model for the first part of the early vision processing by incorporating memristive nanodevices. The architecture of the proposed network is based on the organisation and functioning of the outer plexiform layer (OPL) in the vertebrate retina. We demonstrate that memristive devices are indeed a valuable building block for neuromorphic architectures, as their highly non-linear and adaptive response could be exploited for establishing ultra-dense networks with similar dynamics to their biological counterparts. We particularly show that hexagonal memristive grids can be employed for faithfully emulating the smoothing-effect occurring at the OPL for enhancing the dynamic range of the system. In addition, we employ a memristor-based thresholding scheme for detecting the edges of grayscale images, while the proposed system is also evaluated for its adaptation and fault tolerance capacity against different light or noise conditions as well as distinct device yields.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge