Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Thanima Pilantanakitti

Action Recognition in the Frequency Domain

Sep 02, 2014Figures and Tables:

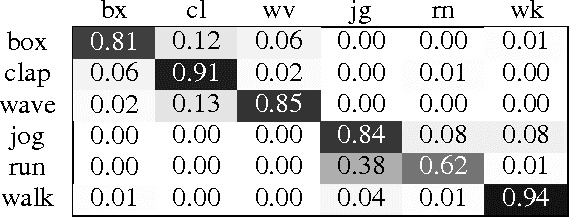

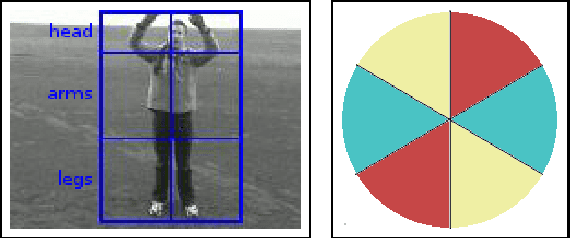

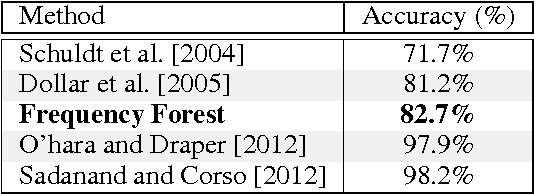

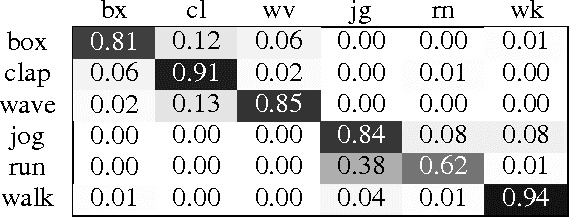

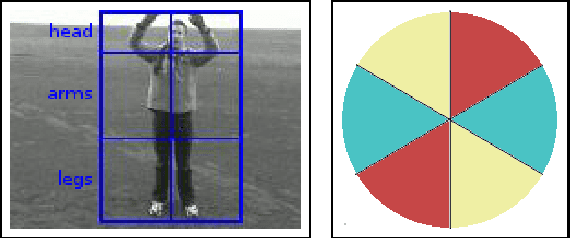

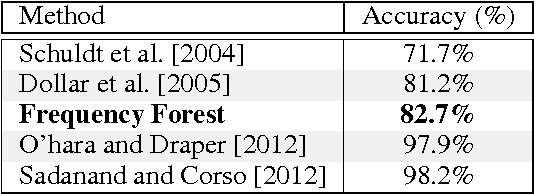

Abstract:In this paper, we describe a simple strategy for mitigating variability in temporal data series by shifting focus onto long-term, frequency domain features that are less susceptible to variability. We apply this method to the human action recognition task and demonstrate how working in the frequency domain can yield good recognition features for commonly used optical flow and articulated pose features, which are highly sensitive to small differences in motion, viewpoint, dynamic backgrounds, occlusion and other sources of variability. We show how these frequency-based features can be used in combination with a simple forest classifier to achieve good and robust results on the popular KTH Actions dataset.

* Keywords: Artificial Intelligence, Computer Vision, Action

Recognition

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge