Théis Bazin

Spectrogram Inpainting for Interactive Generation of Instrument Sounds

Apr 15, 2021

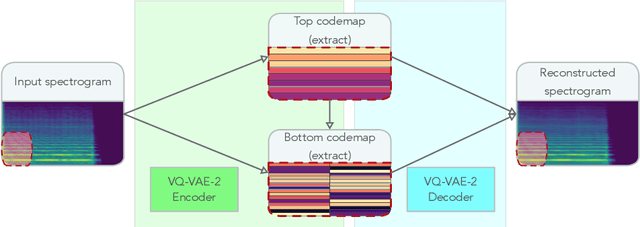

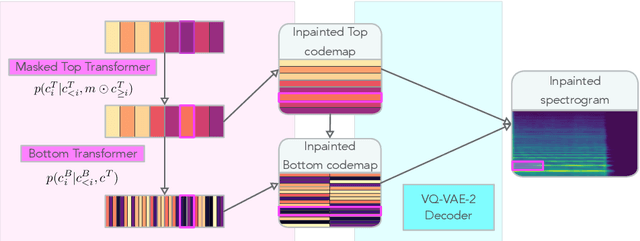

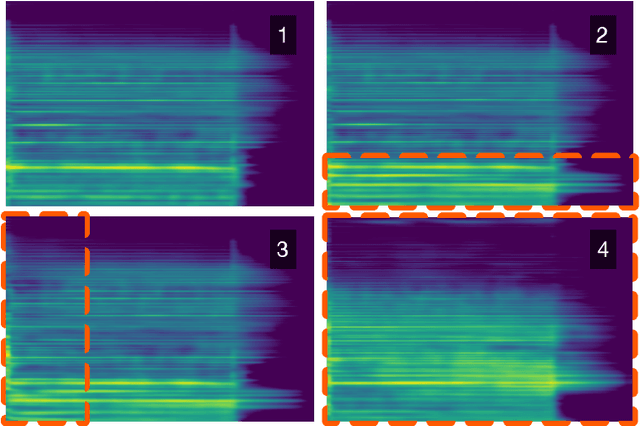

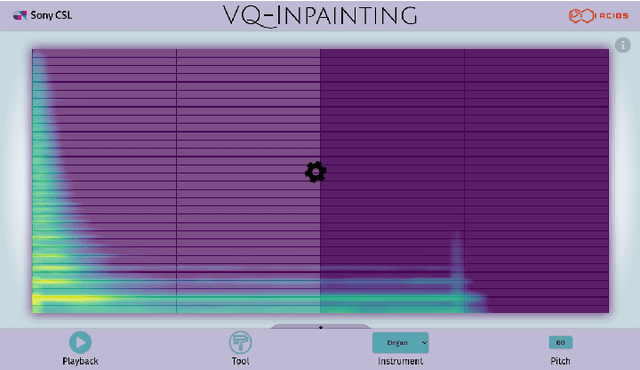

Abstract:Modern approaches to sound synthesis using deep neural networks are hard to control, especially when fine-grained conditioning information is not available, hindering their adoption by musicians. In this paper, we cast the generation of individual instrumental notes as an inpainting-based task, introducing novel and unique ways to iteratively shape sounds. To this end, we propose a two-step approach: first, we adapt the VQ-VAE-2 image generation architecture to spectrograms in order to convert real-valued spectrograms into compact discrete codemaps, we then implement token-masked Transformers for the inpainting-based generation of these codemaps. We apply the proposed architecture on the NSynth dataset on masked resampling tasks. Most crucially, we open-source an interactive web interface to transform sounds by inpainting, for artists and practitioners alike, opening up to new, creative uses.

* 8 pages + references + appendices. 4 figures. Published as a conference paper at the The 2020 Joint Conference on AI Music Creativity, October 19-23, 2020, organized and hosted virtually by the Royal Institute of Technology (KTH), Stockholm, Sweden

NONOTO: A Model-agnostic Web Interface for Interactive Music Composition by Inpainting

Jul 23, 2019

Abstract:Inpainting-based generative modeling allows for stimulating human-machine interactions by letting users perform stylistically coherent local editions to an object using a statistical model. We present NONOTO, a new interface for interactive music generation based on inpainting models. It is aimed both at researchers, by offering a simple and flexible API allowing them to connect their own models with the interface, and at musicians by providing industry-standard features such as audio playback, real-time MIDI output and straightforward synchronization with DAWs using Ableton Link.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge