Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Terry Windeatt

A unifying approach on bias and variance analysis for classification

Jan 12, 2021Figures and Tables:

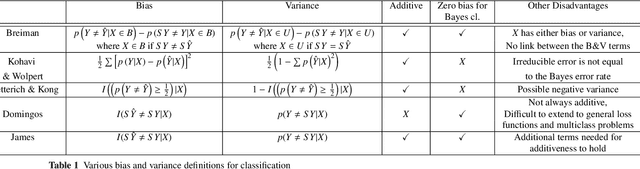

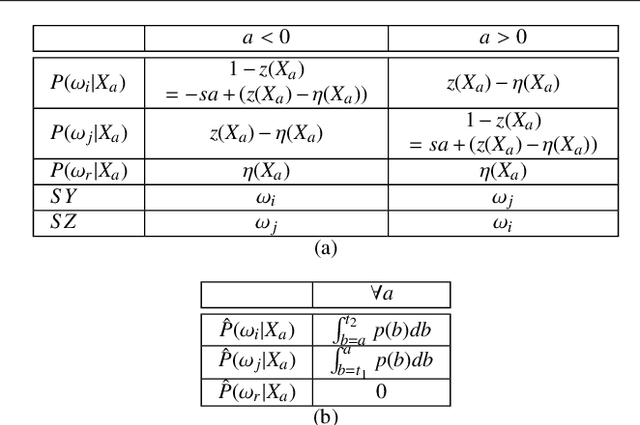

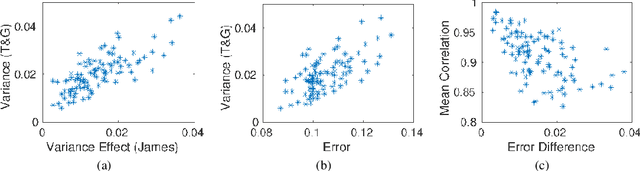

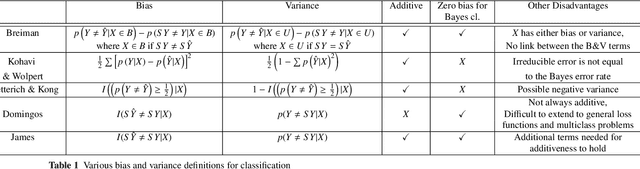

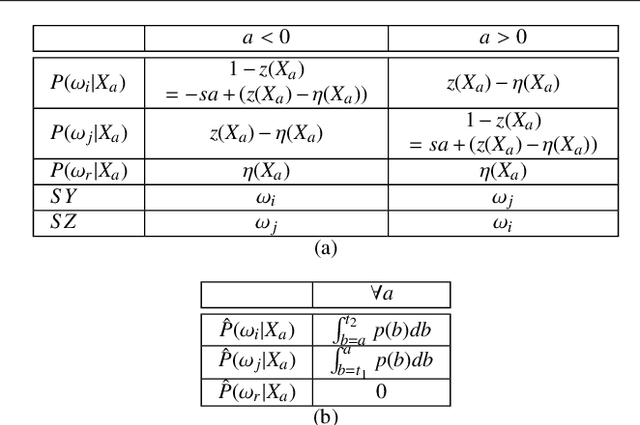

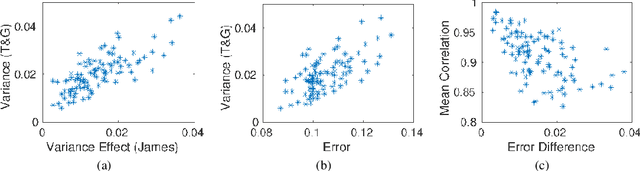

Abstract:Standard bias and variance (B&V) terminologies were originally defined for the regression setting and their extensions to classification have led to several different models / definitions in the literature. In this paper, we aim to provide the link between the commonly used frameworks of Tumer & Ghosh (T&G) and James. By unifying the two approaches, we relate the B&V defined for the 0/1 loss to the standard B&V of the boundary distributions given for the squared error loss. The closed form relationships provide a deeper understanding of classification performance, and their use is demonstrated in two case studies.

* 17 pages, 3 figures

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge