Tathagata Banerjee

Predicting Mood Disorder Symptoms with Remotely Collected Videos Using an Interpretable Multimodal Dynamic Attention Fusion Network

Sep 07, 2021

Abstract:We developed a novel, interpretable multimodal classification method to identify symptoms of mood disorders viz. depression, anxiety and anhedonia using audio, video and text collected from a smartphone application. We used CNN-based unimodal encoders to learn dynamic embeddings for each modality and then combined these through a transformer encoder. We applied these methods to a novel dataset - collected by a smartphone application - on 3002 participants across up to three recording sessions. Our method demonstrated better multimodal classification performance compared to existing methods that employed static embeddings. Lastly, we used SHapley Additive exPlanations (SHAP) to prioritize important features in our model that could serve as potential digital markers.

A Generalizable Method for Automated Quality Control of Functional Neuroimaging Datasets

Dec 20, 2019

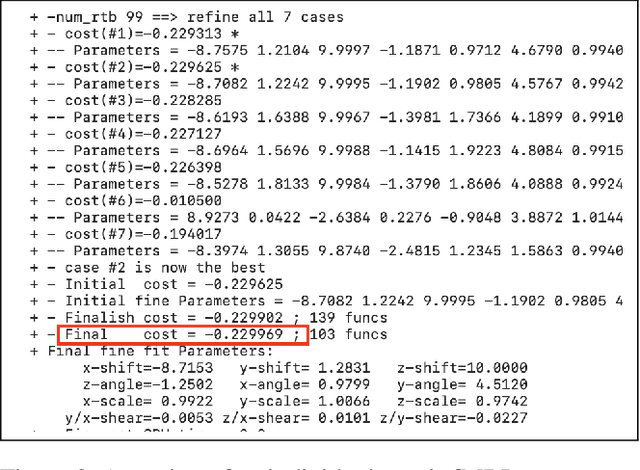

Abstract:Over the last twenty five years, advances in the collection and analysis of fMRI data have enabled new insights into the brain basis of human health and disease. Individual behavioral variation can now be visualized at a neural level as patterns of connectivity among brain regions. Functional brain imaging is enhancing our understanding of clinical psychiatric disorders by revealing ties between regional and network abnormalities and psychiatric symptoms. Initial success in this arena has recently motivated collection of larger datasets which are needed to leverage fMRI to generate brain-based biomarkers to support development of precision medicines. Despite methodological advances and enhanced computational power, evaluating the quality of fMRI scans remains a critical step in the analytical framework. Before analysis can be performed, expert reviewers visually inspect raw scans and preprocessed derivatives to determine viability of the data. This Quality Control (QC) process is labor intensive, and the inability to automate at large scale has proven to be a limiting factor in clinical neuroscience fMRI research. We present a novel method for automating the QC of fMRI scans. We train machine learning classifiers using features derived from brain MR images to predict the "quality" of those images, based on the ground truth of an expert's opinion. We emphasize the importance of these classifiers' ability to generalize their predictions across data from different studies. To address this, we propose a novel approach entitled "FMRI preprocessing Log mining for Automated, Generalizable Quality Control" (FLAG-QC), in which features derived from mining runtime logs are used to train the classifier. We show that classifiers trained on FLAG-QC features perform much better (AUC=0.79) than previously proposed feature sets (AUC=0.56) when testing their ability to generalize across studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge