Tanay Rastogi

Workplace Location Choice Model based on Deep Neural Network

Oct 02, 2025Abstract:Discrete choice models (DCMs) have long been used to analyze workplace location decisions, but they face challenges in accurately mirroring individual decision-making processes. This paper presents a deep neural network (DNN) method for modeling workplace location choices, which aims to better understand complex decision patterns and provides better results than traditional discrete choice models (DCMs). The study demonstrates that DNNs show significant potential as a robust alternative to DCMs in this domain. While both models effectively replicate the impact of job opportunities on workplace location choices, the DNN outperforms the DCM in certain aspects. However, the DCM better aligns with data when assessing the influence of individual attributes on workplace distance. Notably, DCMs excel at shorter distances, while DNNs perform comparably to both data and DCMs for longer distances. These findings underscore the importance of selecting the appropriate model based on specific application requirements in workplace location choice analysis.

Target Population Synthesis using CT-GAN

Oct 01, 2025Abstract:Agent-based models used in scenario planning for transportation and urban planning usually require detailed population information from the base as well as target scenarios. These populations are usually provided by synthesizing fake agents through deterministic population synthesis methods. However, these deterministic population synthesis methods face several challenges, such as handling high-dimensional data, scalability, and zero-cell issues, particularly when generating populations for target scenarios. This research looks into how a deep generative model called Conditional Tabular Generative Adversarial Network (CT-GAN) can be used to create target populations either directly from a collection of marginal constraints or through a hybrid method that combines CT-GAN with Fitness-based Synthesis Combinatorial Optimization (FBS-CO). The research evaluates the proposed population synthesis models against travel survey and zonal-level aggregated population data. Results indicate that the stand-alone CT-GAN model performs the best when compared with FBS-CO and the hybrid model. CT-GAN by itself can create realistic-looking groups that match single-variable distributions, but it struggles to maintain relationships between multiple variables. However, the hybrid model demonstrates improved performance compared to FBS-CO by leveraging CT-GAN ability to generate a descriptive base population, which is then refined using FBS-CO to align with target-year marginals. This study demonstrates that CT-GAN represents an effective methodology for target populations and highlights how deep generative models can be successfully integrated with conventional synthesis techniques to enhance their performance.

Population Synthesis using Incomplete Information

Oct 01, 2025Abstract:This paper presents a population synthesis model that utilizes the Wasserstein Generative-Adversarial Network (WGAN) for training on incomplete microsamples. By using a mask matrix to represent missing values, the study proposes a WGAN training algorithm that lets the model learn from a training dataset that has some missing information. The proposed method aims to address the challenge of missing information in microsamples on one or more attributes due to privacy concerns or data collection constraints. The paper contrasts WGAN models trained on incomplete microsamples with those trained on complete microsamples, creating a synthetic population. We conducted a series of evaluations of the proposed method using a Swedish national travel survey. We validate the efficacy of the proposed method by generating synthetic populations from all the models and comparing them to the actual population dataset. The results from the experiments showed that the proposed methodology successfully generates synthetic data that closely resembles a model trained with complete data as well as the actual population. The paper contributes to the field by providing a robust solution for population synthesis with incomplete data, opening avenues for future research, and highlighting the potential of deep generative models in advancing population synthesis capabilities.

* Presented at 25th Euro Working Group on Transportation (EWGT) Meeting

Model-based traffic state estimation for link traffic using moving cameras

Sep 11, 2023

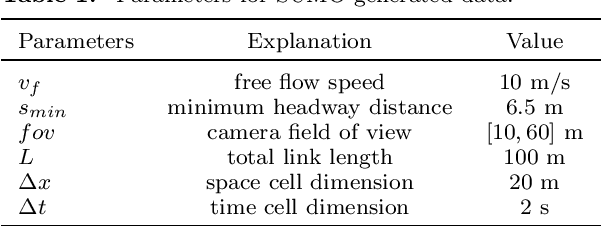

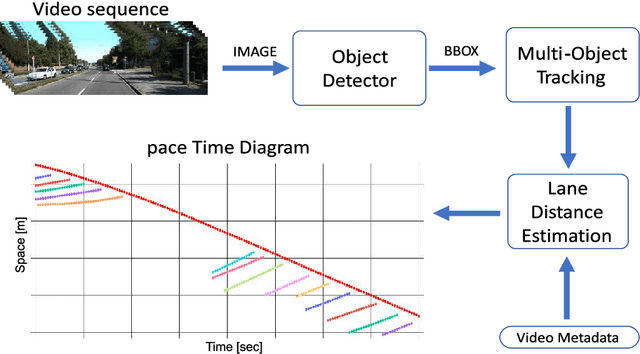

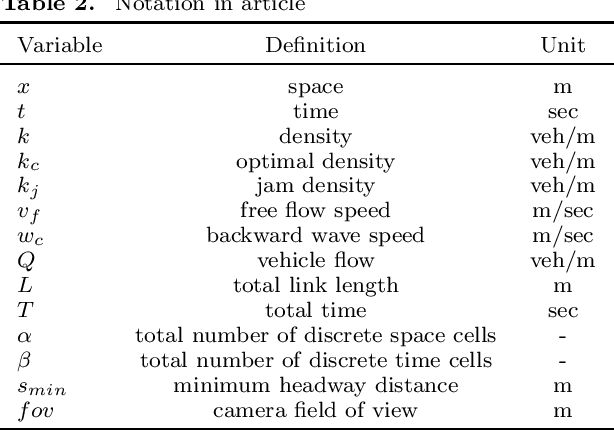

Abstract:Traffic State Estimation (TSE) is the process of inferring traffic conditions based on partially observed data using prior knowledge of traffic patterns. The type of input data used has a significant impact on the accuracy and methodology of TSE. Traditional TSE methods have relied on data from either stationary sensors like loop detectors or mobile sensors such as GPS-equipped floating cars. However, both approaches have their limitations. This paper proposes a method for estimating traffic states on a road link using vehicle trajectories obtained from cameras mounted on moving vehicles. It involves combining data from multiple moving cameras to construct time-space diagrams and using them to estimate parameters for the link's fundamental diagram (FD) and densities in unobserved regions of space-time. The Cell Transmission Model (CTM) is utilized in conjunction with a Genetic Algorithm (GA) to optimize the FD parameters and boundary conditions necessary for accurate estimation. To evaluate the effectiveness of the proposed methodology, simulated traffic data generated by the SUMO traffic simulator was employed incorporating 140 different space-time diagrams with varying lane density and speed. The evaluation of the simulated data demonstrates the effectiveness of the proposed approach, as it achieves a low root mean square error (RMSE) value of 0.0079 veh/m and is comparable to other CTM-based methods. In conclusion, the proposed TSE method opens new avenues for the estimation of traffic state using an innovative data collection method that uses vehicle trajectories collected from on-board cameras.

Automated Construction of Time-Space Diagrams for Traffic Analysis Using Street-View Video Sequence

Aug 11, 2023

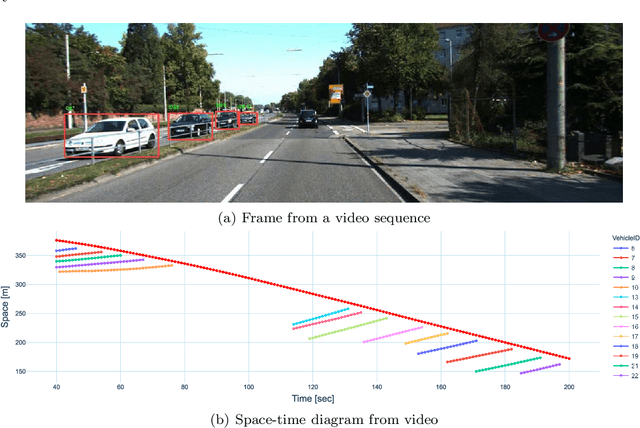

Abstract:Time-space diagrams are essential tools for analyzing traffic patterns and optimizing transportation infrastructure and traffic management strategies. Traditional data collection methods for these diagrams have limitations in terms of temporal and spatial coverage. Recent advancements in camera technology have overcome these limitations and provided extensive urban data. In this study, we propose an innovative approach to constructing time-space diagrams by utilizing street-view video sequences captured by cameras mounted on moving vehicles. Using the state-of-the-art YOLOv5, StrongSORT, and photogrammetry techniques for distance calculation, we can infer vehicle trajectories from the video data and generate time-space diagrams. To evaluate the effectiveness of our proposed method, we utilized datasets from the KITTI computer vision benchmark suite. The evaluation results demonstrate that our approach can generate trajectories from video data, although there are some errors that can be mitigated by improving the performance of the detector, tracker, and distance calculation components. In conclusion, the utilization of street-view video sequences captured by cameras mounted on moving vehicles, combined with state-of-the-art computer vision techniques, has immense potential for constructing comprehensive time-space diagrams. These diagrams offer valuable insights into traffic patterns and contribute to the design of transportation infrastructure and traffic management strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge