Syed Talha Bukhari

E1D3 U-Net for Brain Tumor Segmentation: Submission to the RSNA-ASNR-MICCAI BraTS 2021 Challenge

Oct 06, 2021

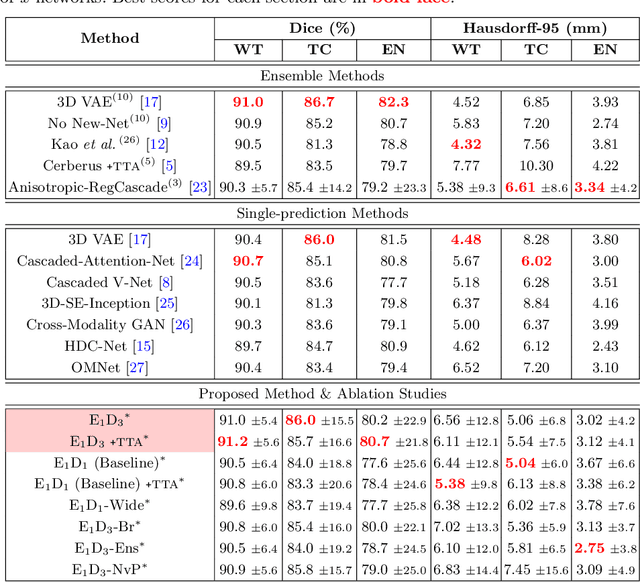

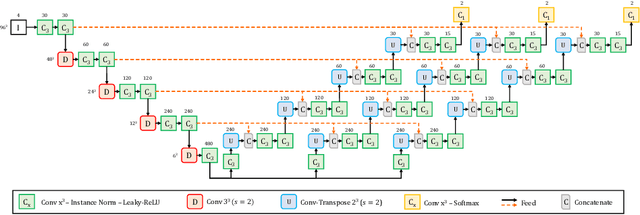

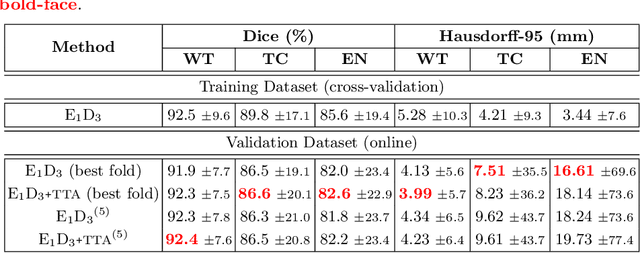

Abstract:Convolutional Neural Networks (CNNs) have demonstrated state-of-the-art performance in medical image segmentation tasks. A common feature in most top-performing CNNs is an encoder-decoder architecture inspired by the U-Net. For multi-region brain tumor segmentation, 3D U-Net architecture and its variants provide the most competitive segmentation performances. In this work, we propose an interesting extension of the standard 3D U-Net architecture, specialized for brain tumor segmentation. The proposed network, called E1D3 U-Net, is a one-encoder, three-decoder fully-convolutional neural network architecture where each decoder segments one of the hierarchical regions of interest: whole tumor, tumor core, and enhancing core. On the BraTS 2018 validation (unseen) dataset, E1D3 U-Net demonstrates single-prediction performance comparable with most state-of-the-art networks in brain tumor segmentation, with reasonable computational requirements and without ensembling. As a submission to the RSNA-ASNR-MICCAI BraTS 2021 challenge, we also evaluate our proposal on the BraTS 2021 dataset. E1D3 U-Net showcases the flexibility in the standard 3D U-Net architecture which we exploit for the task of brain tumor segmentation.

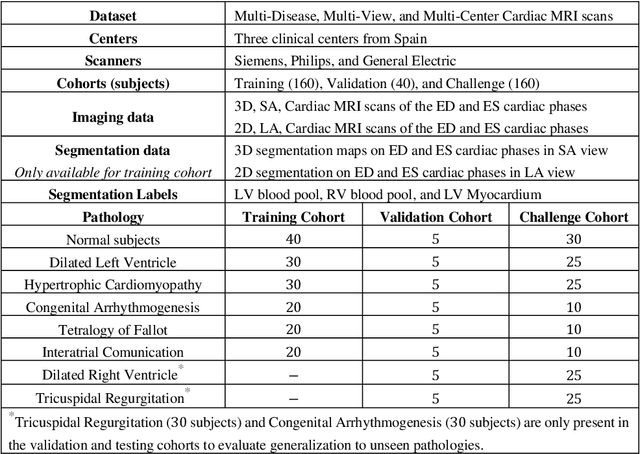

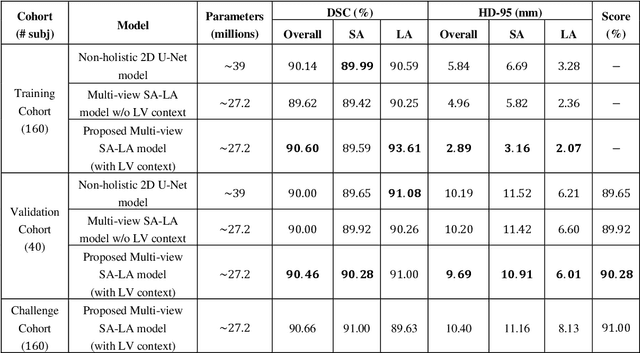

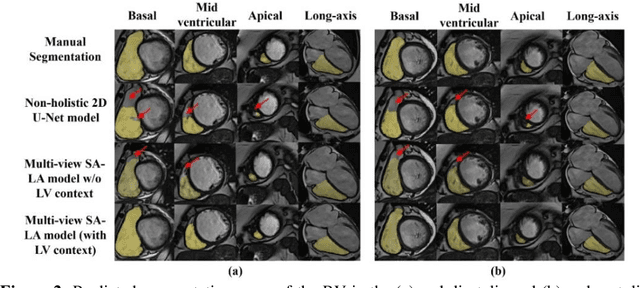

Multi-view SA-LA Net: A framework for simultaneous segmentation of RV on multi-view cardiac MR Images

Oct 01, 2021

Abstract:We proposed a multi-view SA-LA model for simultaneous segmentation of RV on the short-axis (SA) and long-axis (LA) cardiac MR images. The multi-view SA-LA model is a multi-encoder, multi-decoder U-Net architecture based on the U-Net model. One encoder-decoder pair segments the RV on SA images and the other pair on LA images. Multi-view SA-LA model assembles an extremely rich set of synergistic features, at the root of the encoder branch, by combining feature maps learned from matched SA and LA cardiac MR images. Segmentation performance is further enhanced by: (1) incorporating spatial context of LV as a prior and (2) performing deep supervision in the last three layers of the decoder branch. Multi-view SA-LA model was extensively evaluated on the MICCAI 2021 Multi- Disease, Multi-View, and Multi- Centre RV Segmentation Challenge dataset (M&Ms-2021). M&Ms-2021 dataset consists of multi-phase, multi-view cardiac MR images of 360 subjects acquired at four clinical centers with three different vendors. On the challenge cohort (160 subjects), the proposed multi-view SA-LA model achieved a Dice Score of 91% and Hausdorff distance of 11.2 mm on short-axis images and a Dice Score of 89.6% and Hausdorff distance of 8.1 mm on long-axis images. Moreover, multi-view SA-LA model exhibited strong generalization to unseen RV related pathologies including Dilated Right Ventricle (DSC: SA 91.41%, LA 89.63%) and Tricuspidal Regurgitation (DSC: SA 91.40%, LA 90.40%) with low variance (std_DSC: SA <5%, LA<6%).

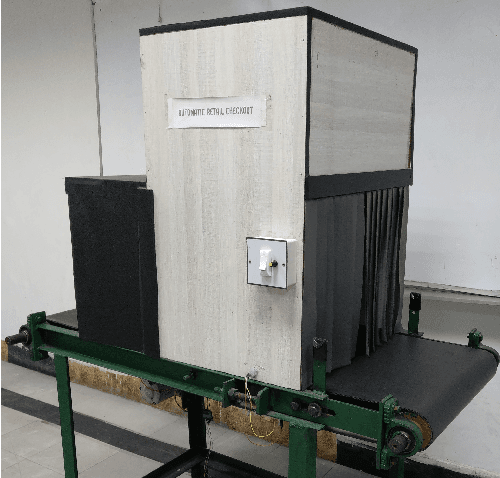

ARC: A Vision-based Automatic Retail Checkout System

Apr 07, 2021

Abstract:Retail checkout systems employed at supermarkets primarily rely on barcode scanners, with some utilizing QR codes, to identify the items being purchased. These methods are time-consuming in practice, require a certain level of human supervision, and involve waiting in long queues. In this regard, we propose a system, that we call ARC, which aims at making the process of check-out at retail store counters faster, autonomous, and more convenient, while reducing dependency on a human operator. The approach makes use of a computer vision-based system, with a Convolutional Neural Network at its core, which scans objects placed beneath a webcam for identification. To evaluate the proposed system, we curated an image dataset of one-hundred local retail items of various categories. Within the given assumptions and considerations, the system achieves a reasonable test-time accuracy, pointing towards an ambitious future for the proposed setup. The project code and the dataset are made publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge