Suleiman Ali Khan

SAGE: Structured Attribute Value Generation for Billion-Scale Product Catalogs

Sep 12, 2023Abstract:We introduce SAGE; a Generative LLM for inferring attribute values for products across world-wide e-Commerce catalogs. We introduce a novel formulation of the attribute-value prediction problem as a Seq2Seq summarization task, across languages, product types and target attributes. Our novel modeling approach lifts the restriction of predicting attribute values within a pre-specified set of choices, as well as, the requirement that the sought attribute values need to be explicitly mentioned in the text. SAGE can infer attribute values even when such values are mentioned implicitly using periphrastic language, or not-at-all-as is the case for common-sense defaults. Additionally, SAGE is capable of predicting whether an attribute is inapplicable for the product at hand, or non-obtainable from the available information. SAGE is the first method able to tackle all aspects of the attribute-value-prediction task as they arise in practical settings in e-Commerce catalogs. A comprehensive set of experiments demonstrates the effectiveness of the proposed approach, as well as, its superiority against state-of-the-art competing alternatives. Moreover, our experiments highlight SAGE's ability to tackle the task of predicting attribute values in zero-shot setting; thereby, opening up opportunities for significantly reducing the overall number of labeled examples required for training.

A generalized deep learning model for multi-disease Chest X-Ray diagnostics

Oct 17, 2020

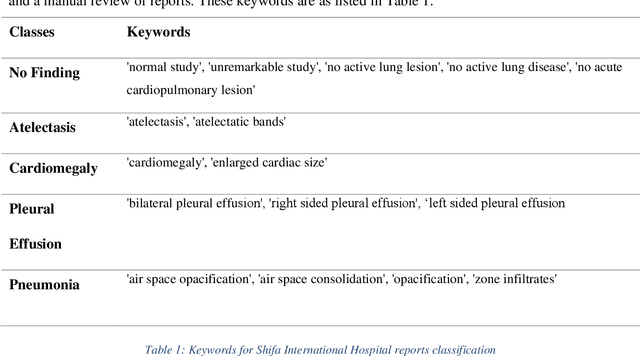

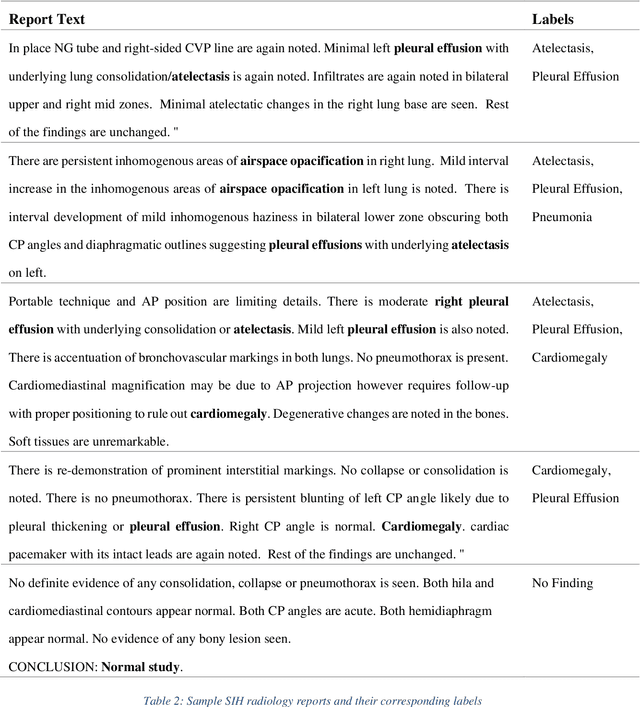

Abstract:We investigate the generalizability of deep convolutional neural network (CNN) on the task of disease classification from chest x-rays collected over multiple sites. We systematically train the model using datasets from three independent sites with different patient populations: National Institute of Health (NIH), Stanford University Medical Centre (CheXpert), and Shifa International Hospital (SIH). We formulate a sequential training approach and demonstrate that the model produces generalized prediction performance using held out test sets from the three sites. Our model generalizes better when trained on multiple datasets, with the CheXpert-Shifa-NET model performing significantly better (p-values < 0.05) than the models trained on individual datasets for 3 out of the 4 distinct disease classes. The code for training the model will be made available open source at: www.github.com/link-to-code at the time of publication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge