Sudhir Agarwal

LLM+Reasoning+Planning for supporting incomplete user queries in presence of APIs

May 21, 2024

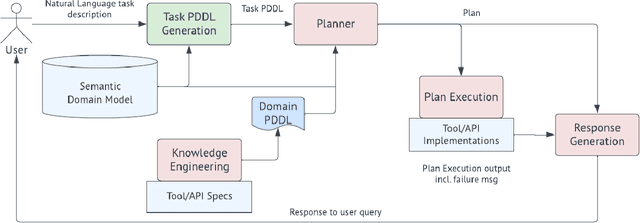

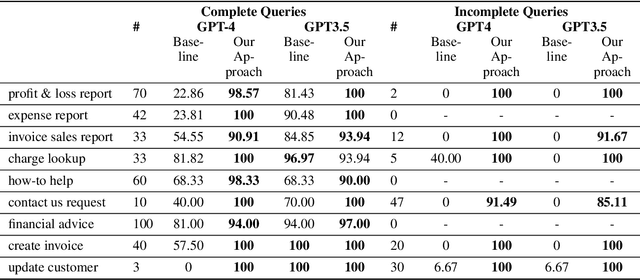

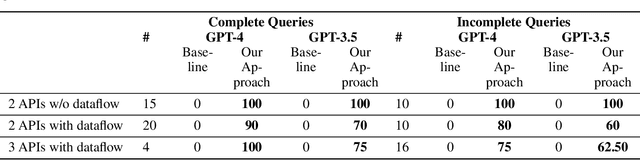

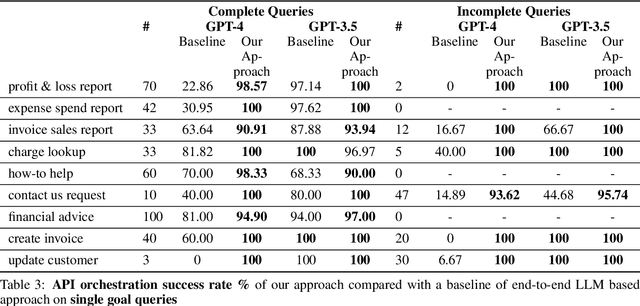

Abstract:Recent availability of Large Language Models (LLMs) has led to the development of numerous LLM-based approaches aimed at providing natural language interfaces for various end-user tasks. These end-user tasks in turn can typically be accomplished by orchestrating a given set of APIs. In practice, natural language task requests (user queries) are often incomplete, i.e., they may not contain all the information required by the APIs. While LLMs excel at natural language processing (NLP) tasks, they frequently hallucinate on missing information or struggle with orchestrating the APIs. The key idea behind our proposed approach is to leverage logical reasoning and classical AI planning along with an LLM for accurately answering user queries including identification and gathering of any missing information in these queries. Our approach uses an LLM and ASP (Answer Set Programming) solver to translate a user query to a representation in Planning Domain Definition Language (PDDL) via an intermediate representation in ASP. We introduce a special API "get_info_api" for gathering missing information. We model all the APIs as PDDL actions in a way that supports dataflow between the APIs. Our approach then uses a classical AI planner to generate an orchestration of API calls (including calls to get_info_api) to answer the user query. Our evaluation results show that our approach significantly outperforms a pure LLM based approach by achieving over 95\% success rate in most cases on a dataset containing complete and incomplete single goal and multi-goal queries where the multi-goal queries may or may not require dataflow among the APIs.

TIC: Translate-Infer-Compile for accurate 'text to plan' using LLMs and logical intermediate representations

Feb 09, 2024Abstract:We study the problem of generating plans for given natural language planning task requests. On one hand, LLMs excel at natural language processing but do not perform well on planning. On the other hand, classical planning tools excel at planning tasks but require input in a structured language such as the Planning Domain Definition Language (PDDL). We leverage the strengths of both the techniques by using an LLM for generating the PDDL representation (task PDDL) of planning task requests followed by using a classical planner for computing a plan. Unlike previous approaches that use LLMs for generating task PDDLs directly, our approach comprises of (a) translate: using an LLM only for generating a logically interpretable intermediate representation of natural language task descriptions, (b) infer: deriving additional logically dependent information from the intermediate representation using a logic reasoner (currently, Answer Set Programming solver), and (c) compile: generating the target task PDDL from the base and inferred information. We observe that using an LLM to only output the intermediate representation significantly reduces LLM errors. Consequently, TIC approach achieves, for at least one LLM, high accuracy on task PDDL generation for all seven domains of our evaluation dataset.

A Scalable Technique for Weak-Supervised Learning with Domain Constraints

Jan 12, 2023Abstract:We propose a novel scalable end-to-end pipeline that uses symbolic domain knowledge as constraints for learning a neural network for classifying unlabeled data in a weak-supervised manner. Our approach is particularly well-suited for settings where the data consists of distinct groups (classes) that lends itself to clustering-friendly representation learning and the domain constraints can be reformulated for use of efficient mathematical optimization techniques by considering multiple training examples at once. We evaluate our approach on a variant of the MNIST image classification problem where a training example consists of image sequences and the sum of the numbers represented by the sequences, and show that our approach scales significantly better than previous approaches that rely on computing all constraint satisfying combinations for each training example.

Enabling Semantic Analysis of User Browsing Patterns in the Web of Data

Apr 17, 2012

Abstract:A useful step towards better interpretation and analysis of the usage patterns is to formalize the semantics of the resources that users are accessing in the Web. We focus on this problem and present an approach for the semantic formalization of usage logs, which lays the basis for eective techniques of querying expressive usage patterns. We also present a query answering approach, which is useful to nd in the logs expressive patterns of usage behavior via formulation of semantic and temporal-based constraints. We have processed over 30 thousand user browsing sessions extracted from usage logs of DBPedia and Semantic Web Dog Food. All these events are formalized semantically using respective domain ontologies and RDF representations of the Web resources being accessed. We show the eectiveness of our approach through experimental results, providing in this way an exploratory analysis of the way users browse theWeb of Data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge