Subhrasankar Chatterjee

DreamCatcher: Revealing the Language of the Brain with fMRI using GPT Embedding

Jun 16, 2023

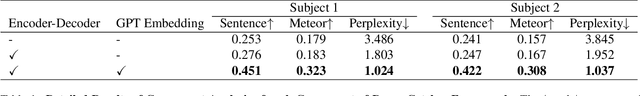

Abstract:The human brain possesses remarkable abilities in visual processing, including image recognition and scene summarization. Efforts have been made to understand the cognitive capacities of the visual brain, but a comprehensive understanding of the underlying mechanisms still needs to be discovered. Advancements in brain decoding techniques have led to sophisticated approaches like fMRI-to-Image reconstruction, which has implications for cognitive neuroscience and medical imaging. However, challenges persist in fMRI-to-image reconstruction, such as incorporating global context and contextual information. In this article, we propose fMRI captioning, where captions are generated based on fMRI data to gain insight into the neural correlates of visual perception. This research presents DreamCatcher, a novel framework for fMRI captioning. DreamCatcher consists of the Representation Space Encoder (RSE) and the RevEmbedding Decoder, which transform fMRI vectors into a latent space and generate captions, respectively. We evaluated the framework through visualization, dataset training, and testing on subjects, demonstrating strong performance. fMRI-based captioning has diverse applications, including understanding neural mechanisms, Human-Computer Interaction, and enhancing learning and training processes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge