Subhajit Goswami

LASER: A new method for locally adaptive nonparametric regression

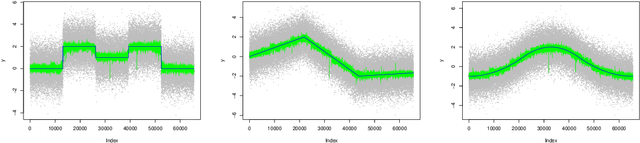

Dec 27, 2024Abstract:In this article, we introduce \textsf{LASER} (Locally Adaptive Smoothing Estimator for Regression), a computationally efficient locally adaptive nonparametric regression method that performs variable bandwidth local polynomial regression. We prove that it adapts (near-)optimally to the local H\"{o}lder exponent of the underlying regression function \texttt{simultaneously} at all points in its domain. Furthermore, we show that there is a single ideal choice of a global tuning parameter under which the above mentioned local adaptivity holds. Despite the vast literature on nonparametric regression, instances of practicable methods with provable guarantees of such a strong notion of local adaptivity are rare. The proposed method achieves excellent performance across a broad range of numerical experiments in comparison to popular alternative locally adaptive methods.

Spatially Adaptive Online Prediction of Piecewise Regular Functions

Mar 30, 2022

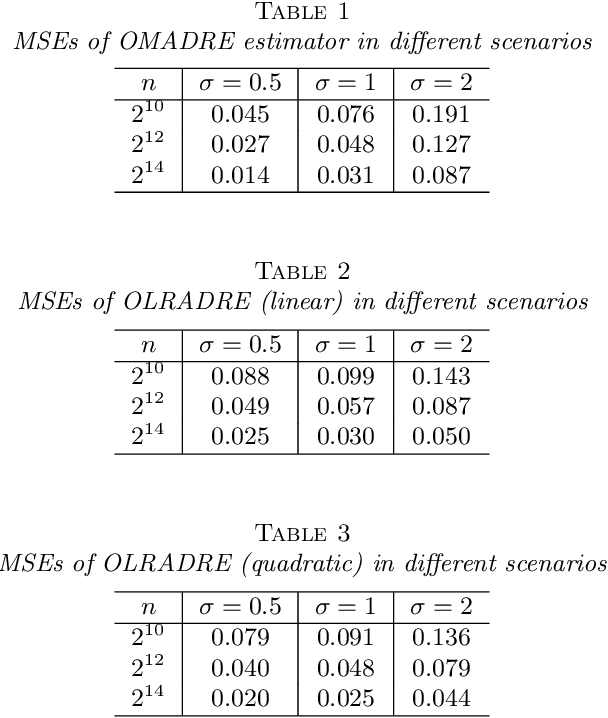

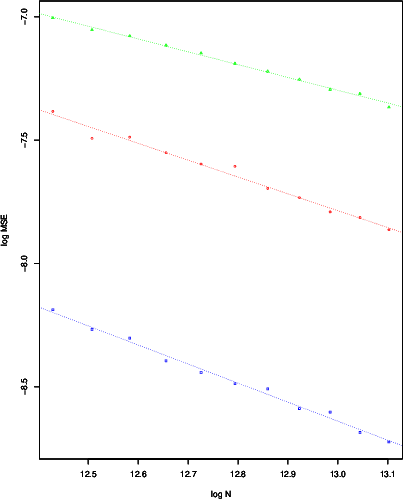

Abstract:We consider the problem of estimating piecewise regular functions in an online setting, i.e., the data arrive sequentially and at any round our task is to predict the value of the true function at the next revealed point using the available data from past predictions. We propose a suitably modified version of a recently developed online learning algorithm called the sleeping experts aggregation algorithm. We show that this estimator satisfies oracle risk bounds simultaneously for all local regions of the domain. As concrete instantiations of the expert aggregation algorithm proposed here, we study an online mean aggregation and an online linear regression aggregation algorithm where experts correspond to the set of dyadic subrectangles of the domain. The resulting algorithms are near linear time computable in the sample size. We specifically focus on the performance of these online algorithms in the context of estimating piecewise polynomial and bounded variation function classes in the fixed design setup. The simultaneous oracle risk bounds we obtain for these estimators in this context provide new and improved (in certain aspects) guarantees even in the batch setting and are not available for the state of the art batch learning estimators.

Adaptive Estimation of Multivariate Piecewise Polynomials and Bounded Variation Functions by Optimal Decision Trees

Dec 14, 2019

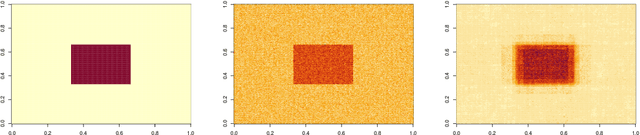

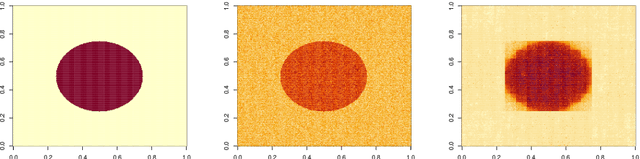

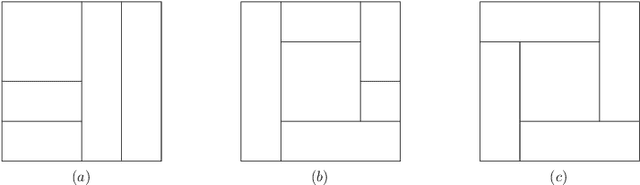

Abstract:Proposed by \cite{donoho1997cart}, Dyadic CART is a nonparametric regression method which computes a globally optimal dyadic decision tree and fits piecewise constant functions in two dimensions. In this article we define and study Dyadic CART and a closely related estimator, namely Optimal Regression Tree (ORT), in the context of estimating piecewise smooth functions in general dimensions. More precisely, these optimal decision tree estimators fit piecewise polynomials of any given degree. Like Dyadic CART in two dimensions, we reason that these estimators can also be computed in polynomial time in the sample size via dynamic programming. We prove oracle inequalities for the finite sample risk of Dyadic CART and ORT which imply tight {risk} bounds for several function classes of interest. Firstly, they imply that the finite sample risk of ORT of {\em order} $r \geq 0$ is always bounded by $C k \frac{\log N}{N}$ ($N$ is the sample size) whenever the regression function is piecewise polynomial of degree $r$ on some reasonably regular axis aligned rectangular partition of the domain with at most $k$ rectangles. Beyond the univariate case, such guarantees are scarcely available in the literature for computationally efficient estimators. Secondly, our oracle inequalities uncover minimax rate optimality and adaptivity of the Dyadic CART estimator for function spaces with bounded variation. We consider two function spaces of recent interest where multivariate total variation denoising and univariate trend filtering are the state of the art methods. We show that Dyadic CART enjoys certain advantages over these estimators while still maintaining all their known guarantees.

New Risk Bounds for 2D Total Variation Denoising

Feb 23, 2019

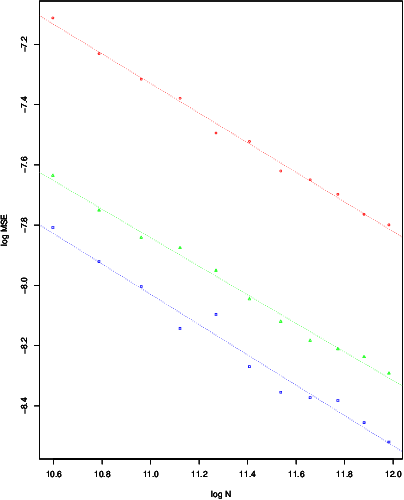

Abstract:2D Total Variation Denoising (TVD) is a widely used technique for image denoising. It is also an important non parametric regression method for estimating functions with heterogenous smoothness. Recent results have shown the TVD estimator to be nearly minimax rate optimal for the class of functions with bounded variation. In this paper, we complement these worst case guarantees by investigating the adaptivity of the TVD estimator to functions which are piecewise constant on axis aligned rectangles. We rigorously show that, when the truth is piecewise constant, the ideally tuned TVD estimator performs better than in the worst case. We also study the issue of choosing the tuning parameter. In particular, we propose a fully data driven version of the TVD estimator which enjoys similar worst case risk guarantees as the ideally tuned TVD estimator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge