Su Pang

TransCAR: Transformer-based Camera-And-Radar Fusion for 3D Object Detection

Apr 30, 2023Abstract:Despite radar's popularity in the automotive industry, for fusion-based 3D object detection, most existing works focus on LiDAR and camera fusion. In this paper, we propose TransCAR, a Transformer-based Camera-And-Radar fusion solution for 3D object detection. Our TransCAR consists of two modules. The first module learns 2D features from surround-view camera images and then uses a sparse set of 3D object queries to index into these 2D features. The vision-updated queries then interact with each other via transformer self-attention layer. The second module learns radar features from multiple radar scans and then applies transformer decoder to learn the interactions between radar features and vision-updated queries. The cross-attention layer within the transformer decoder can adaptively learn the soft-association between the radar features and vision-updated queries instead of hard-association based on sensor calibration only. Finally, our model estimates a bounding box per query using set-to-set Hungarian loss, which enables the method to avoid non-maximum suppression. TransCAR improves the velocity estimation using the radar scans without temporal information. The superior experimental results of our TransCAR on the challenging nuScenes datasets illustrate that our TransCAR outperforms state-of-the-art Camera-Radar fusion-based 3D object detection approaches.

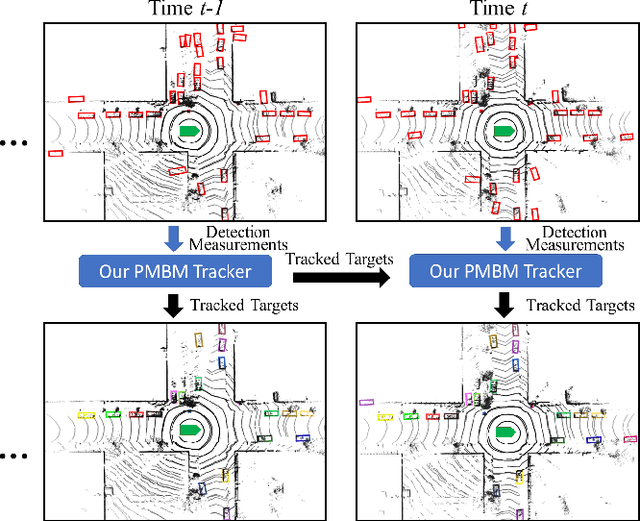

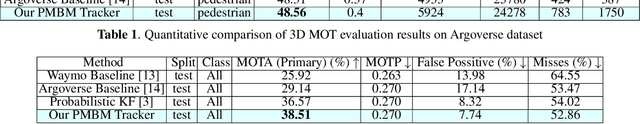

Multi-Object Tracking using Poisson Multi-Bernoulli Mixture Filtering for Autonomous Vehicles

Mar 13, 2021

Abstract:The ability of an autonomous vehicle to perform 3D tracking is essential for safe planing and navigation in cluttered environments. The main challenges for multi-object tracking (MOT) in autonomous driving applications reside in the inherent uncertainties regarding the number of objects, when and where the objects may appear and disappear, and uncertainties regarding objects' states. Random finite set (RFS) based approaches can naturally model these uncertainties accurately and elegantly, and they have been widely used in radar-based tracking applications. In this work, we developed an RFS-based MOT framework for 3D LiDAR data. In partiuclar, we propose a Poisson multi-Bernoulli mixture (PMBM) filter to solve the amodal MOT problem for autonomous driving applications. To the best of our knowledge, this represents a first attempt for employing an RFS-based approach in conjunction with 3D LiDAR data for MOT applications with comprehensive validation using challenging datasets made available by industry leaders. The superior experimental results of our PMBM tracker on public Waymo and Argoverse datasets clearly illustrate that an RFS-based tracker outperforms many state-of-the-art deep learning-based and Kalman filter-based methods, and consequently, these results indicate a great potential for further exploration of RFS-based frameworks for 3D MOT applications.

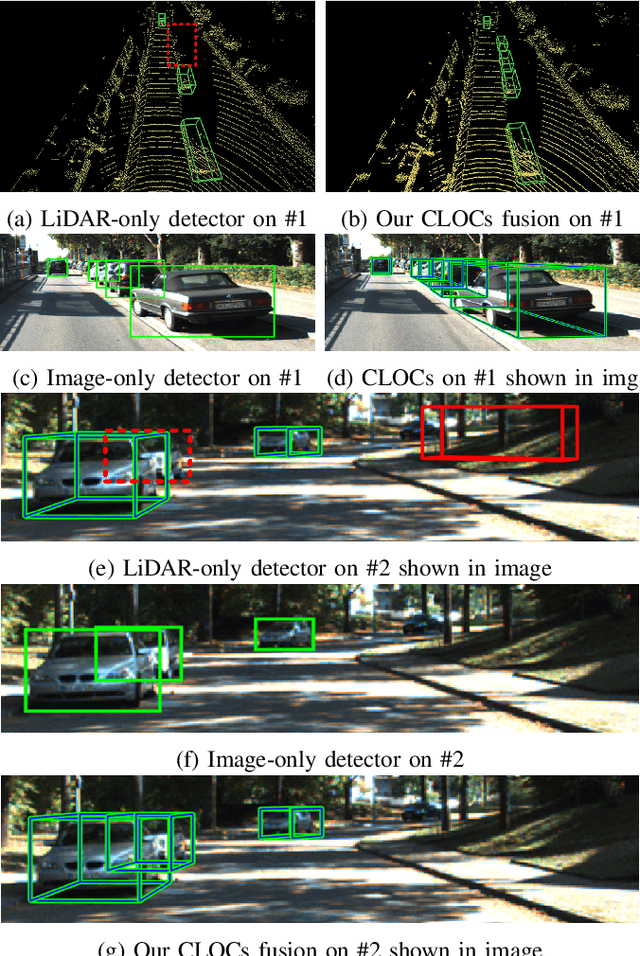

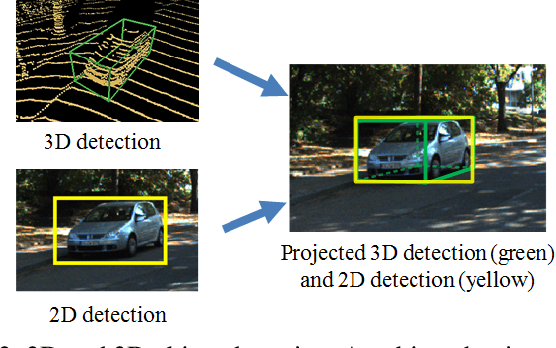

CLOCs: Camera-LiDAR Object Candidates Fusion for 3D Object Detection

Sep 02, 2020

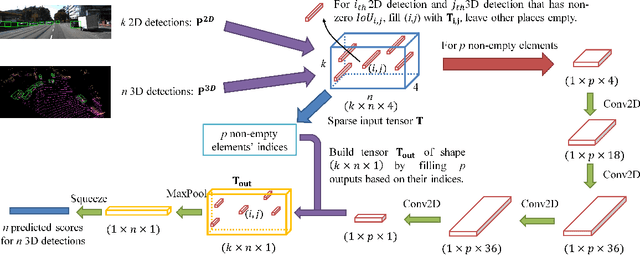

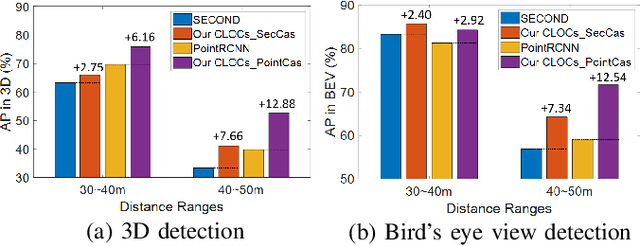

Abstract:There have been significant advances in neural networks for both 3D object detection using LiDAR and 2D object detection using video. However, it has been surprisingly difficult to train networks to effectively use both modalities in a way that demonstrates gain over single-modality networks. In this paper, we propose a novel Camera-LiDAR Object Candidates (CLOCs) fusion network. CLOCs fusion provides a low-complexity multi-modal fusion framework that significantly improves the performance of single-modality detectors. CLOCs operates on the combined output candidates before Non-Maximum Suppression (NMS) of any 2D and any 3D detector, and is trained to leverage their geometric and semantic consistencies to produce more accurate final 3D and 2D detection results. Our experimental evaluation on the challenging KITTI object detection benchmark, including 3D and bird's eye view metrics, shows significant improvements, especially at long distance, over the state-of-the-art fusion based methods. At time of submission, CLOCs ranks the highest among all the fusion-based methods in the official KITTI leaderboard. We will release our code upon acceptance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge