Steven Elsworth

ABBA: Adaptive Brownian bridge-based symbolic aggregation of time series

Mar 27, 2020

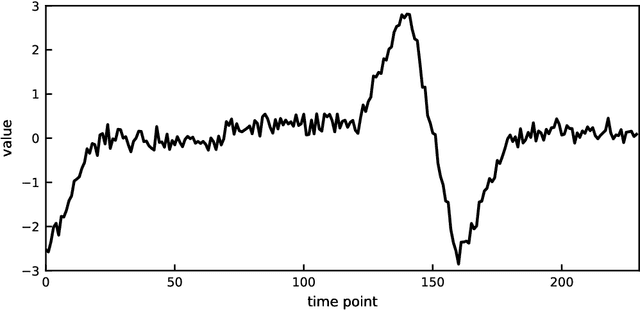

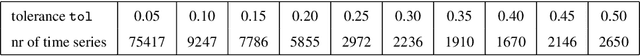

Abstract:A new symbolic representation of time series, called ABBA, is introduced. It is based on an adaptive polygonal chain approximation of the time series into a sequence of tuples, followed by a mean-based clustering to obtain the symbolic representation. We show that the reconstruction error of this representation can be modelled as a random walk with pinned start and end points, a so-called Brownian bridge. This insight allows us to make ABBA essentially parameter-free, except for the approximation tolerance which must be chosen. Extensive comparisons with the SAX and 1d-SAX representations are included in the form of performance profiles, showing that ABBA is able to better preserve the essential shape information of time series compared to other approaches. Advantages and applications of ABBA are discussed, including its in-built differencing property and use for anomaly detection, and Python implementations provided.

Time Series Forecasting Using LSTM Networks: A Symbolic Approach

Mar 12, 2020

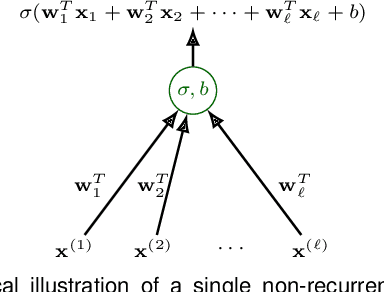

Abstract:Machine learning methods trained on raw numerical time series data exhibit fundamental limitations such as a high sensitivity to the hyper parameters and even to the initialization of random weights. A combination of a recurrent neural network with a dimension-reducing symbolic representation is proposed and applied for the purpose of time series forecasting. It is shown that the symbolic representation can help to alleviate some of the aforementioned problems and, in addition, might allow for faster training without sacrificing the forecast performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge