Steven Davenport

Deep CardioSound-An Ensembled Deep Learning Model for Heart Sound MultiLabelling

Apr 20, 2022

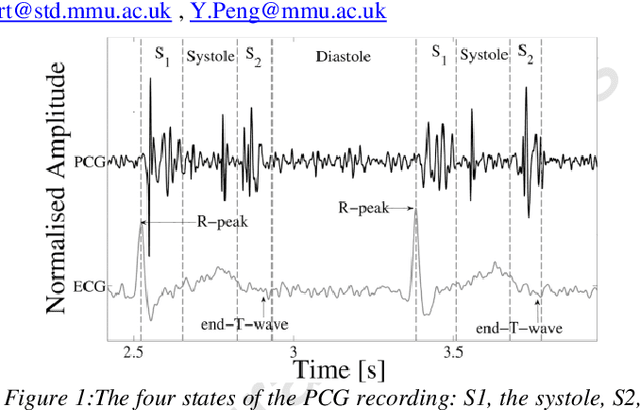

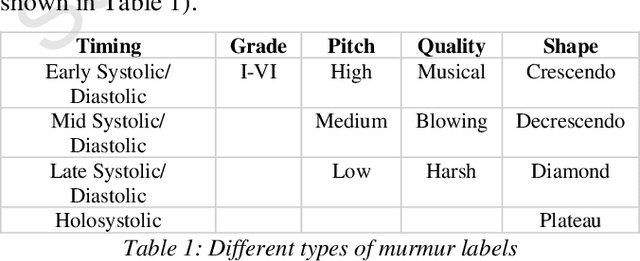

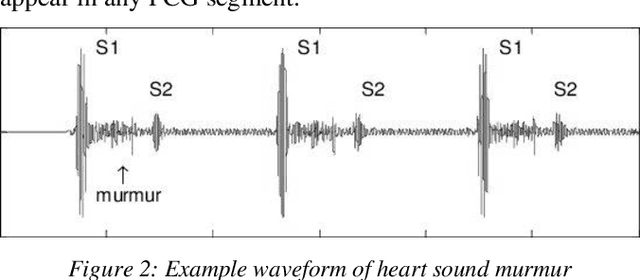

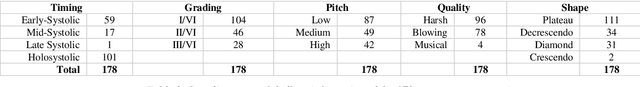

Abstract:Heart sound diagnosis and classification play an essential role in detecting cardiovascular disorders, especially when the remote diagnosis becomes standard clinical practice. Most of the current work is designed for single category based heard sound classification tasks. To further extend the landscape of the automatic heart sound diagnosis landscape, this work proposes a deep multilabel learning model that can automatically annotate heart sound recordings with labels from different label groups, including murmur's timing, pitch, grading, quality, and shape. Our experiment results show that the proposed method has achieved outstanding performance on the holdout data for the multi-labelling task with sensitivity=0.990, specificity=0.999, F1=0.990 at the segments level, and an overall accuracy=0.969 at the patient's recording level.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge