Stephan Scheele

CAIPI in Practice: Towards Explainable Interactive Medical Image Classification

Apr 06, 2022

Abstract:Would you trust physicians if they cannot explain their decisions to you? Medical diagnostics using machine learning gained enormously in importance within the last decade. However, without further enhancements many state-of-the-art machine learning methods are not suitable for medical application. The most important reasons are insufficient data set quality and the black-box behavior of machine learning algorithms such as Deep Learning models. Consequently, end-users cannot correct the model's decisions and the corresponding explanations. The latter is crucial for the trustworthiness of machine learning in the medical domain. The research field explainable interactive machine learning searches for methods that address both shortcomings. This paper extends the explainable and interactive CAIPI algorithm and provides an interface to simplify human-in-the-loop approaches for image classification. The interface enables the end-user (1) to investigate and (2) to correct the model's prediction and explanation, and (3) to influence the data set quality. After CAIPI optimization with only a single counterexample per iteration, the model achieves an accuracy of $97.48\%$ on the Medical MNIST and $95.02\%$ on the Fashion MNIST. This accuracy is approximately equal to state-of-the-art Deep Learning optimization procedures. Besides, CAIPI reduces the labeling effort by approximately $80\%$.

An Interactive Explanatory AI System for Industrial Quality Control

Mar 17, 2022Abstract:Machine learning based image classification algorithms, such as deep neural network approaches, will be increasingly employed in critical settings such as quality control in industry, where transparency and comprehensibility of decisions are crucial. Therefore, we aim to extend the defect detection task towards an interactive human-in-the-loop approach that allows us to integrate rich background knowledge and the inference of complex relationships going beyond traditional purely data-driven approaches. We propose an approach for an interactive support system for classifications in an industrial quality control setting that combines the advantages of both (explainable) knowledge-driven and data-driven machine learning methods, in particular inductive logic programming and convolutional neural networks, with human expertise and control. The resulting system can assist domain experts with decisions, provide transparent explanations for results, and integrate feedback from users; thus reducing workload for humans while both respecting their expertise and without removing their agency or accountability.

Explanation as a process: user-centric construction of multi-level and multi-modal explanations

Oct 07, 2021

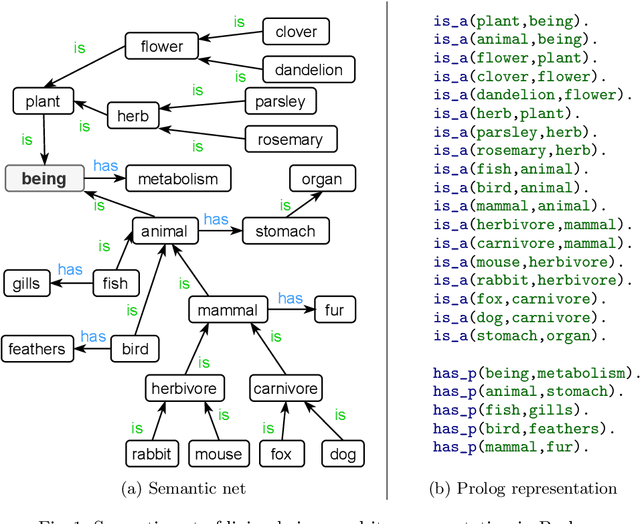

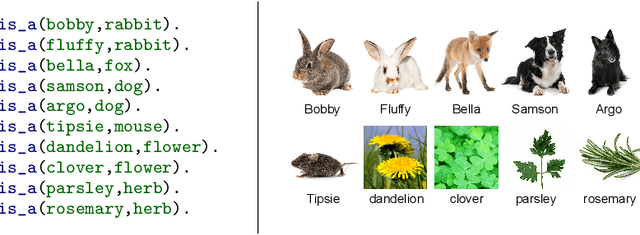

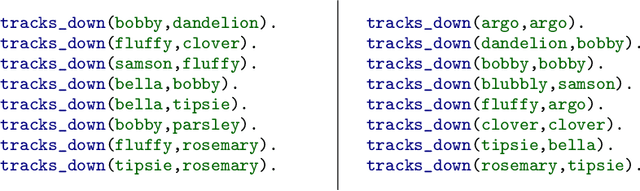

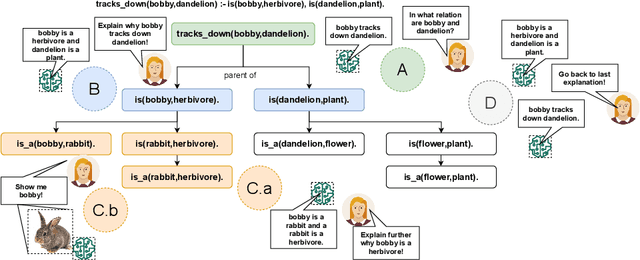

Abstract:In the last years, XAI research has mainly been concerned with developing new technical approaches to explain deep learning models. Just recent research has started to acknowledge the need to tailor explanations to different contexts and requirements of stakeholders. Explanations must not only suit developers of models, but also domain experts as well as end users. Thus, in order to satisfy different stakeholders, explanation methods need to be combined. While multi-modal explanations have been used to make model predictions more transparent, less research has focused on treating explanation as a process, where users can ask for information according to the level of understanding gained at a certain point in time. Consequently, an opportunity to explore explanations on different levels of abstraction should be provided besides multi-modal explanations. We present a process-based approach that combines multi-level and multi-modal explanations. The user can ask for textual explanations or visualizations through conversational interaction in a drill-down manner. We use Inductive Logic Programming, an interpretable machine learning approach, to learn a comprehensible model. Further, we present an algorithm that creates an explanatory tree for each example for which a classifier decision is to be explained. The explanatory tree can be navigated by the user to get answers of different levels of detail. We provide a proof-of-concept implementation for concepts induced from a semantic net about living beings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge