Stefanos Ganotakis

Bite-Weight Estimation Using Commercial Ear Buds

Aug 02, 2021

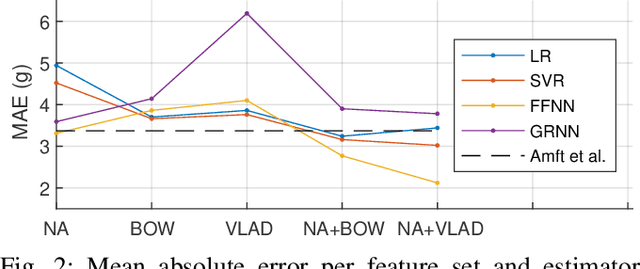

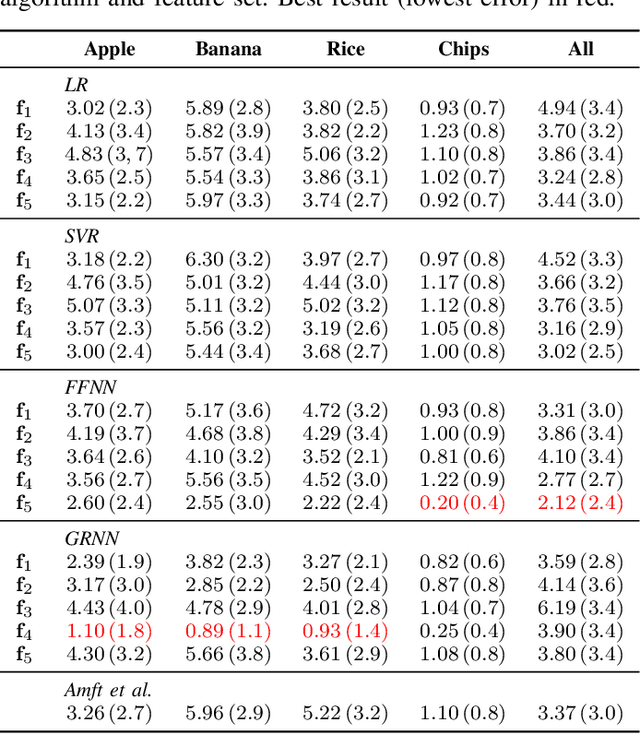

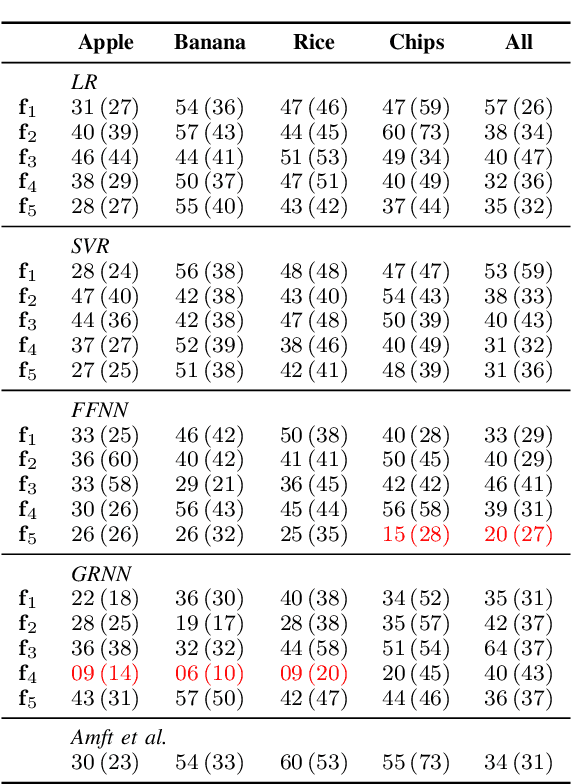

Abstract:While automatic tracking and measuring of our physical activity is a well established domain, not only in research but also in commercial products and every-day life-style, automatic measurement of eating behavior is significantly more limited. Despite the abundance of methods and algorithms that are available in bibliography, commercial solutions are mostly limited to digital logging applications for smart-phones. One factor that limits the adoption of such solutions is that they usually require specialized hardware or sensors. Based on this, we evaluate the potential for estimating the weight of consumed food (per bite) based only on the audio signal that is captured by commercial ear buds (Samsung Galaxy Buds). Specifically, we examine a combination of features (both audio and non-audio features) and trainable estimators (linear regression, support vector regression, and neural-network based estimators) and evaluate on an in-house dataset of 8 participants and 4 food types. Results indicate good potential for this approach: our best results yield mean absolute error of less than 1 g for 3 out of 4 food types when training food-specific models, and 2.1 g when training on all food types together, both of which improve over an existing literature approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge