Stefano Discetti

Genetically-inspired convective heat transfer enhancement in a turbulent boundary layer

Apr 26, 2023Abstract:The convective heat transfer in a turbulent boundary layer (TBL) on a flat plate is enhanced using an artificial intelligence approach based on linear genetic algorithms control (LGAC). The actuator is a set of six slot jets in crossflow aligned with the freestream. An open-loop optimal periodic forcing is defined by the carrier frequency, the duty cycle and the phase difference between actuators as control parameters. The control laws are optimised with respect to the unperturbed TBL and to the actuation with a steady jet. The cost function includes the wall convective heat transfer rate and the cost of the actuation. The performance of the controller is assessed by infrared thermography and characterised also with particle image velocimetry measurements. The optimal controller yields a slightly asymmetric flow field. The LGAC algorithm converges to the same frequency and duty cycle for all the actuators. It is noted that such frequency is strikingly equal to the inverse of the characteristic travel time of large-scale turbulent structures advected within the near-wall region. The phase difference between multiple jet actuation has shown to be very relevant and the main driver of flow asymmetry. The results pinpoint the potential of machine learning control in unravelling unexplored controllers within the actuation space. Our study furthermore demonstrates the viability of employing sophisticated measurement techniques together with advanced algorithms in an experimental investigation.

Super-resolution GANs of randomly-seeded fields

Feb 23, 2022

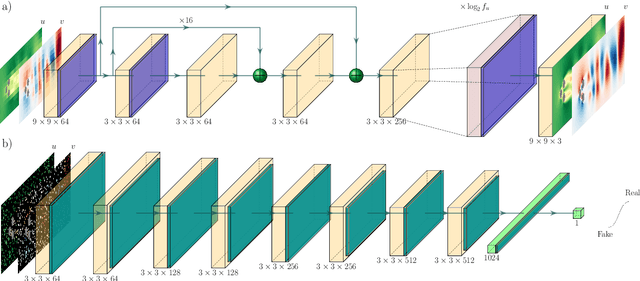

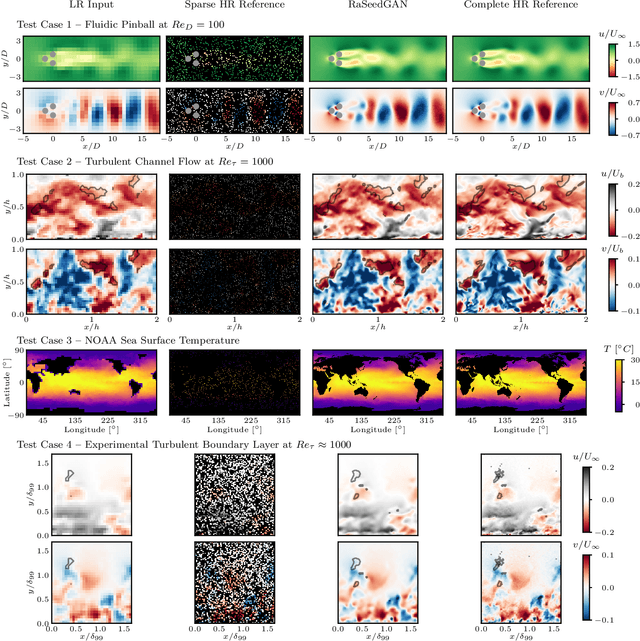

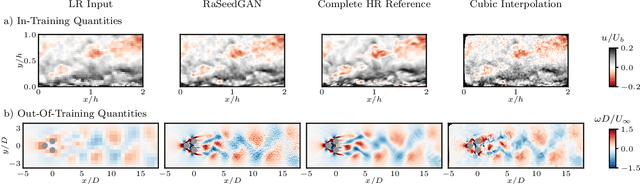

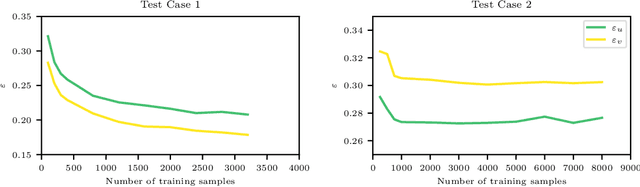

Abstract:Reconstruction of field quantities from sparse measurements is a problem arising in a broad spectrum of applications. This task is particularly challenging when mapping between point sparse measurements and field quantities shall be performed in an unsupervised manner. Further complexity is added for moving sensors and/or random on-off status. Under such conditions, the most straightforward solution is to interpolate the scattered data onto a regular grid. However, the spatial resolution achieved with this approach is ultimately limited by the mean spacing between the sparse measurements. In this work, we propose a novel super-resolution generative adversarial network (GAN) framework to estimate field quantities from random sparse sensors without needing any full-resolution field for training. The algorithm exploits random sampling to provide incomplete views of the high-resolution underlying distributions. It is hereby referred to as RAndomly-SEEDed super-resolution GAN (RaSeedGAN). The proposed technique is tested on synthetic databases of fluid flow simulations, ocean surface temperature distributions measurements, and particle image velocimetry data of a zero-pressure-gradient turbulent boundary layer. The results show an excellent performance of the proposed methodology even in cases with a high level of gappyness (>50\%) or noise conditions. To our knowledge, this is the first super-resolution GANs algorithm for full-field estimation from randomly-seeded fields with no need of a full-field high-resolution representation during training nor of a library of training examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge