Rodrigo Castellanos

Multi-fidelity aerodynamic data fusion by autoencoder transfer learning

Dec 15, 2025

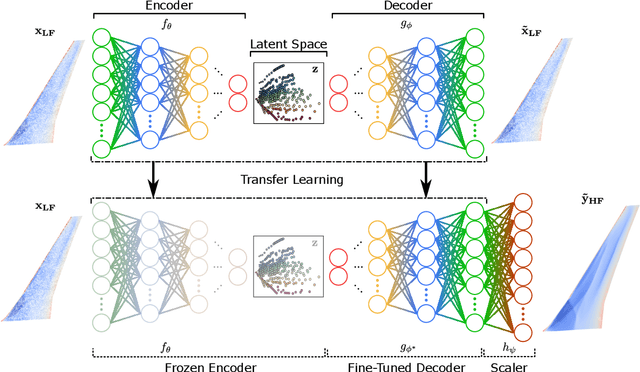

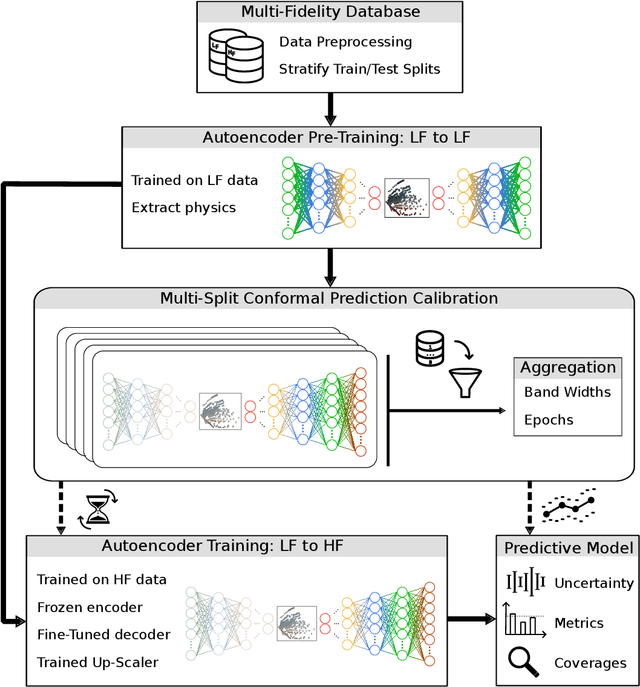

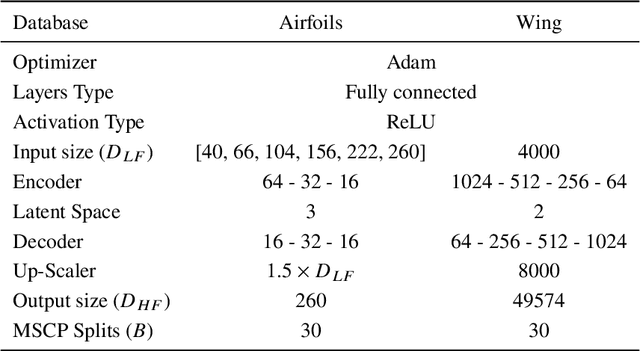

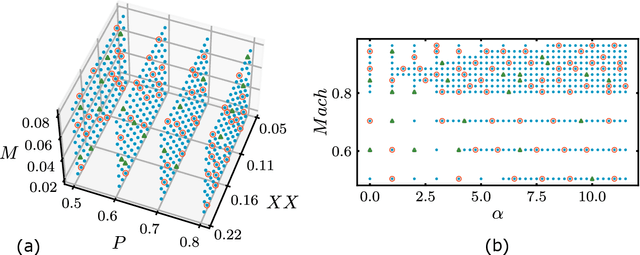

Abstract:Accurate aerodynamic prediction often relies on high-fidelity simulations; however, their prohibitive computational costs severely limit their applicability in data-driven modeling. This limitation motivates the development of multi-fidelity strategies that leverage inexpensive low-fidelity information without compromising accuracy. Addressing this challenge, this work presents a multi-fidelity deep learning framework that combines autoencoder-based transfer learning with a newly developed Multi-Split Conformal Prediction (MSCP) strategy to achieve uncertainty-aware aerodynamic data fusion under extreme data scarcity. The methodology leverages abundant Low-Fidelity (LF) data to learn a compact latent physics representation, which acts as a frozen knowledge base for a decoder that is subsequently fine-tuned using scarce HF samples. Tested on surface-pressure distributions for NACA airfoils (2D) and a transonic wing (3D) databases, the model successfully corrects LF deviations and achieves high-accuracy pressure predictions using minimal HF training data. Furthermore, the MSCP framework produces robust, actionable uncertainty bands with pointwise coverage exceeding 95%. By combining extreme data efficiency with uncertainty quantification, this work offers a scalable and reliable solution for aerodynamic regression in data-scarce environments.

Reducing base drag on road vehicles using pulsed jets optimized by hybrid genetic algorithms

Oct 30, 2025Abstract:Aerodynamic drag on flat-backed vehicles like vans and trucks is dominated by a low-pressure wake, whose control is critical for reducing fuel consumption. This paper presents an experimental study at $Re_W\approx 78,300$ on active flow control using four pulsed jets at the rear edges of a bluff body model. A hybrid genetic algorithm, combining a global search with a local gradient-based optimizer, was used to determine the optimal jet actuation parameters in an experiment-in-the-loop setup. The cost function was designed to achieve a net energy saving by simultaneously minimizing aerodynamic drag and penalizing the actuation's energy consumption. The optimization campaign successfully identified a control strategy that yields a drag reduction of approximately 10%. The optimal control law features a strong, low-frequency actuation from the bottom jet, which targets the main vortex shedding, while the top and lateral jets address higher-frequency, less energetic phenomena. Particle Image Velocimetry analysis reveals a significant upward shift and stabilization of the wake, leading to substantial pressure recovery on the model's lower base. Ultimately, this work demonstrates that a model-free optimization approach can successfully identify non-intuitive, multi-faceted actuation strategies that yield significant and energetically efficient drag reduction.

Genetic Optimization of a Software-Defined GNSS Receiver

Oct 25, 2025

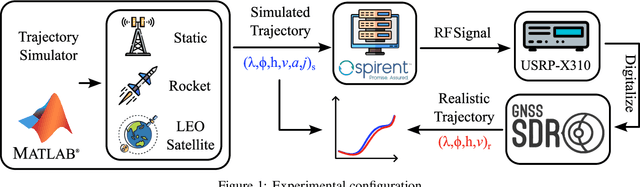

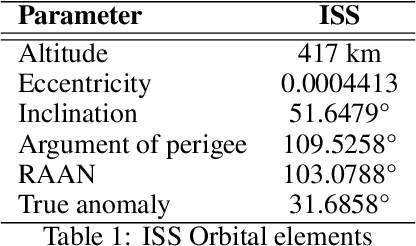

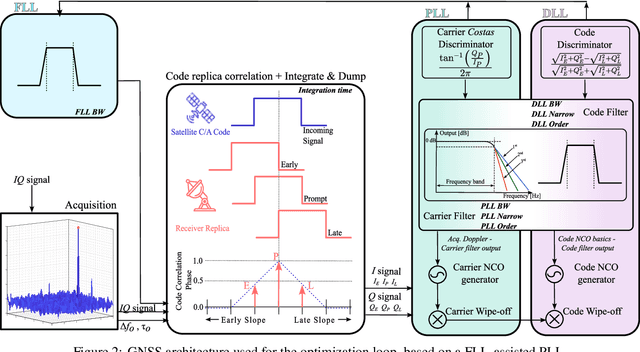

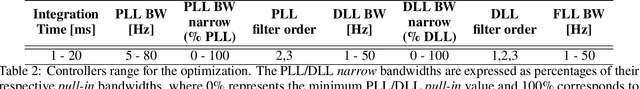

Abstract:Commercial off-the-shelf (COTS) Global Navigation Satellite System (GNSS) receivers face significant limitations under high-dynamic conditions, particularly in high-acceleration environments such as those experienced by launch vehicles. These performance degradations, often observed as discontinuities in the navigation solution, arise from the inability of traditional tracking loop bandwidths to cope with rapid variations in synchronization parameters. Software-Defined Radio (SDR) receivers overcome these constraints by enabling flexible reconfiguration of tracking loops; however, manual tuning involves a complex, multidimensional search and seldom ensures optimal performance. This work introduces a genetic algorithm-based optimization framework that autonomously explores the receiver configuration space to determine optimal loop parameters for phase, frequency, and delay tracking. The approach is validated within an SDR environment using realistically simulated GPS L1 signals for three representative dynamic regimes -guided rocket flight, Low Earth Orbit (LEO) satellite, and static receiver-processed with the open-source GNSS-SDR architecture. Results demonstrate that evolutionary optimization enables SDR receivers to maintain robust and accurate Position, Velocity, and Time (PVT) solutions across diverse dynamic conditions. The optimized configurations yielded maximum position and velocity errors of approximately 6 m and 0.08 m/s for the static case, 12 m and 2.5 m/s for the rocket case, and 5 m and 0.2 m/s for the LEO case.

Fast and robust parametric and functional learning with Hybrid Genetic Optimisation (HyGO)

Oct 10, 2025Abstract:The Hybrid Genetic Optimisation framework (HyGO) is introduced to meet the pressing need for efficient and unified optimisation frameworks that support both parametric and functional learning in complex engineering problems. Evolutionary algorithms are widely employed as derivative-free global optimisation methods but often suffer from slow convergence rates, especially during late-stage learning. HyGO integrates the global exploration capabilities of evolutionary algorithms with accelerated local search for robust solution refinement. The key enabler is a two-stage strategy that balances exploration and exploitation. For parametric problems, HyGO alternates between a genetic algorithm and targeted improvement through a degradation-proof Dowhill Simplex Method (DSM). For function optimisation tasks, HyGO rotates between genetic programming and DSM. Validation is performed on (a) parametric optimisation benchmarks, where HyGO demonstrates faster and more robust convergence than standard genetic algorithms, and (b) function optimisation tasks, including control of a damped Landau oscillator. Practical relevance is showcased through aerodynamic drag reduction of an Ahmed body via Reynolds-Averaged Navier-Stokes simulations, achieving consistently interpretable results and reductions exceeding 20% by controlled jet injection in the back of the body for flow reattachment and separation bubble reduction. Overall, HyGO emerges as a versatile hybrid optimisation framework suitable for a broad spectrum of engineering and scientific problems involving parametric and functional learning.

Towards aerodynamic surrogate modeling based on $β$-variational autoencoders

Aug 09, 2024Abstract:Surrogate models combining dimensionality reduction and regression techniques are essential to reduce the need for costly high-fidelity CFD data. New approaches using $\beta$-Variational Autoencoder ($\beta$-VAE) architectures have shown promise in obtaining high-quality low-dimensional representations of high-dimensional flow data while enabling physical interpretation of their latent spaces. We propose a surrogate model based on latent space regression to predict pressure distributions on a transonic wing given the flight conditions: Mach number and angle of attack. The $\beta$-VAE model, enhanced with Principal Component Analysis (PCA), maps high-dimensional data to a low-dimensional latent space, showing a direct correlation with flight conditions. Regularization through $\beta$ requires careful tuning to improve the overall performance, while PCA pre-processing aids in constructing an effective latent space, improving autoencoder training and performance. Gaussian Process Regression is used to predict latent space variables from flight conditions, showing robust behavior independent of $\beta$, and the decoder reconstructs the high-dimensional pressure field data. This pipeline provides insight into unexplored flight conditions. Additionally, a fine-tuning process of the decoder further refines the model, reducing dependency on $\beta$ and enhancing accuracy. The structured latent space, robust regression performance, and significant improvements from fine-tuning collectively create a highly accurate and efficient surrogate model. Our methodology demonstrates the effectiveness of $\beta$-VAEs for aerodynamic surrogate modeling, offering a rapid, cost-effective, and reliable alternative for aerodynamic data prediction.

Genetically-inspired convective heat transfer enhancement in a turbulent boundary layer

Apr 26, 2023Abstract:The convective heat transfer in a turbulent boundary layer (TBL) on a flat plate is enhanced using an artificial intelligence approach based on linear genetic algorithms control (LGAC). The actuator is a set of six slot jets in crossflow aligned with the freestream. An open-loop optimal periodic forcing is defined by the carrier frequency, the duty cycle and the phase difference between actuators as control parameters. The control laws are optimised with respect to the unperturbed TBL and to the actuation with a steady jet. The cost function includes the wall convective heat transfer rate and the cost of the actuation. The performance of the controller is assessed by infrared thermography and characterised also with particle image velocimetry measurements. The optimal controller yields a slightly asymmetric flow field. The LGAC algorithm converges to the same frequency and duty cycle for all the actuators. It is noted that such frequency is strikingly equal to the inverse of the characteristic travel time of large-scale turbulent structures advected within the near-wall region. The phase difference between multiple jet actuation has shown to be very relevant and the main driver of flow asymmetry. The results pinpoint the potential of machine learning control in unravelling unexplored controllers within the actuation space. Our study furthermore demonstrates the viability of employing sophisticated measurement techniques together with advanced algorithms in an experimental investigation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge