Stefanie Wucherer

Semantic 3D scene segmentation for robotic assembly process execution

Mar 20, 2023

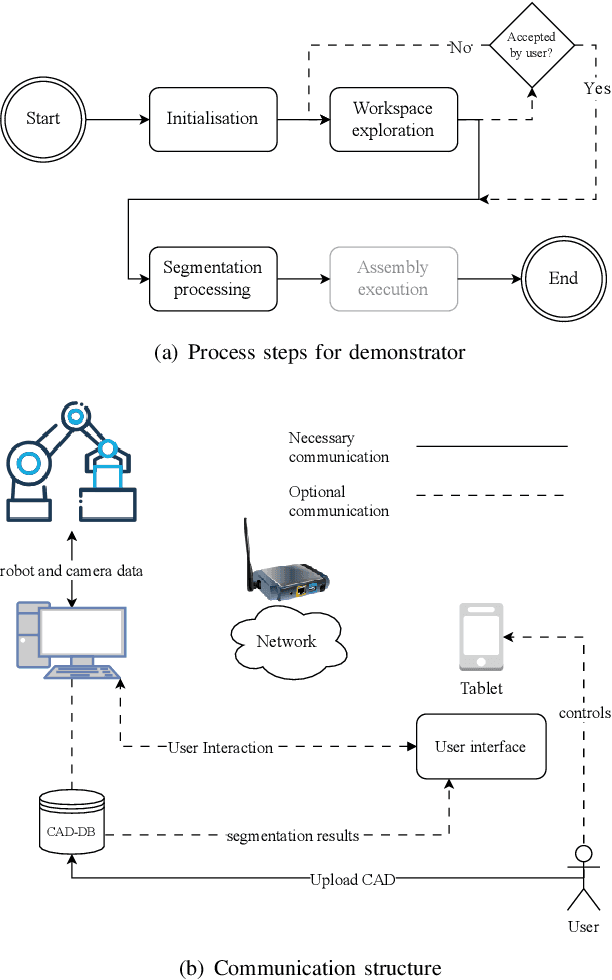

Abstract:Adapting robot programmes to changes in the environment is a well-known industry problem, and it is the reason why many tedious tasks are not automated in small and medium-sized enterprises (SMEs). A semantic world model of a robot's previously unknown environment created from point clouds is one way for these companies to automate assembly tasks that are typically performed by humans. The semantic segmentation of point clouds for robot manipulators or cobots in industrial environments has received little attention due to a lack of suitable datasets. This paper describes a pipeline for creating synthetic point clouds for specific use cases in order to train a model for point cloud semantic segmentation. We show that models trained with our data achieve high per-class accuracy (> 90%) for semantic point cloud segmentation on unseen real-world data. Our approach is applicable not only to the 3D camera used in training data generation but also to other depth cameras based on different technologies. The application tested in this work is a industry-related peg-in-the-hole process. With our approach the necessity of user assistance during a robot's commissioning can be reduced to a minimum.

Learning to Predict Grip Quality from Simulation: Establishing a Digital Twin to Generate Simulated Data for a Grip Stability Metric

Feb 06, 2023Abstract:A robust grip is key to successful manipulation and joining of work pieces involved in any industrial assembly process. Stability of a grip depends on geometric and physical properties of the object as well as the gripper itself. Current state-of-the-art algorithms can usually predict if a grip would fail. However, they are not able to predict the force at which the gripped object starts to slip, which is critical as the object might be subjected to external forces, e.g. when joining it with another object. This research project aims to develop a AI-based approach for a grip metric based on tactile sensor data capturing the physical interactions between gripper and object. Thus, the maximum force that can be applied to the object before it begins to slip should be predicted before manipulating the object. The RGB image of the contact surface between the object and gripper jaws obtained from GelSight tactile sensors during the initial phase of the grip should serve as a training input for the grip metric. To generate such a data set, a pull experiment is designed using a UR 5 robot. Performing these experiments in real life to populate the data set is time consuming since different object classes, geometries, material properties and surface textures need to be considered to enhance the robustness of the prediction algorithm. Hence, a simulation model of the experimental setup has been developed to both speed up and automate the data generation process. In this paper, the design of this digital twin and the accuracy of the synthetic data are presented. State-of-the-art image comparison algorithms show that the simulated RGB images of the contact surface match the experimental data. In addition, the maximum pull forces can be reproduced for different object classes and grip scenarios. As a result, the synthetically generated data can be further used to train the neural grip metric network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge