Spencer Sheen

A Channel-Pruned and Weight-Binarized Convolutional Neural Network for Keyword Spotting

Sep 12, 2019

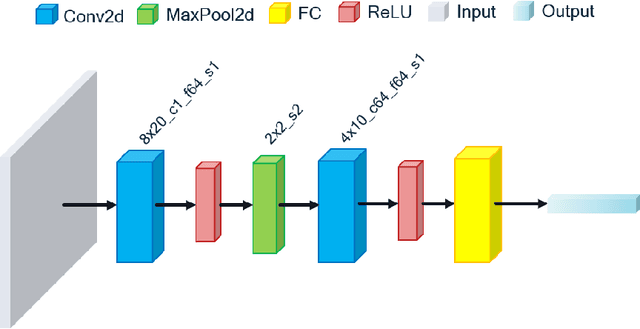

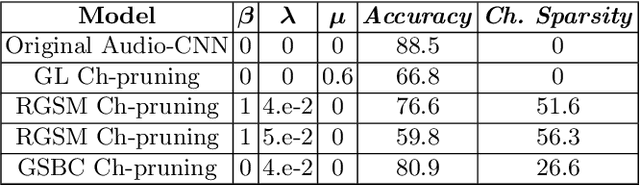

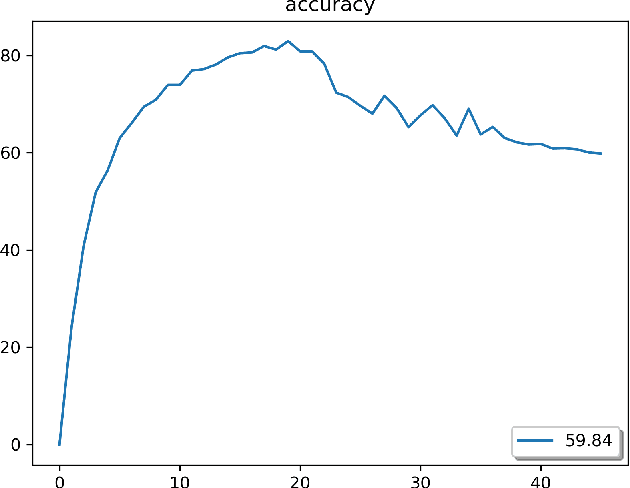

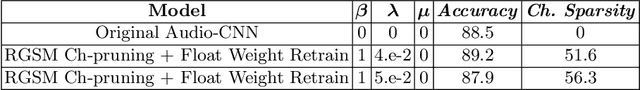

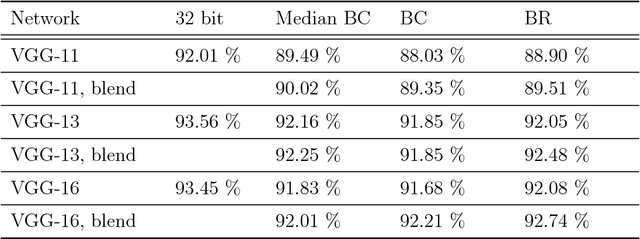

Abstract:We study channel number reduction in combination with weight binarization (1-bit weight precision) to trim a convolutional neural network for a keyword spotting (classification) task. We adopt a group-wise splitting method based on the group Lasso penalty to achieve over 50% channel sparsity while maintaining the network performance within 0.25% accuracy loss. We show an effective three-stage procedure to balance accuracy and sparsity in network training.

Median Binary-Connect Method and a Binary Convolutional Neural Nework for Word Recognition

Nov 07, 2018

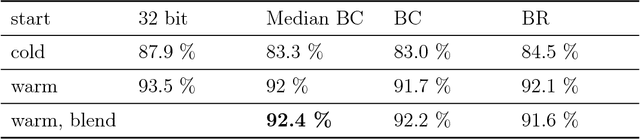

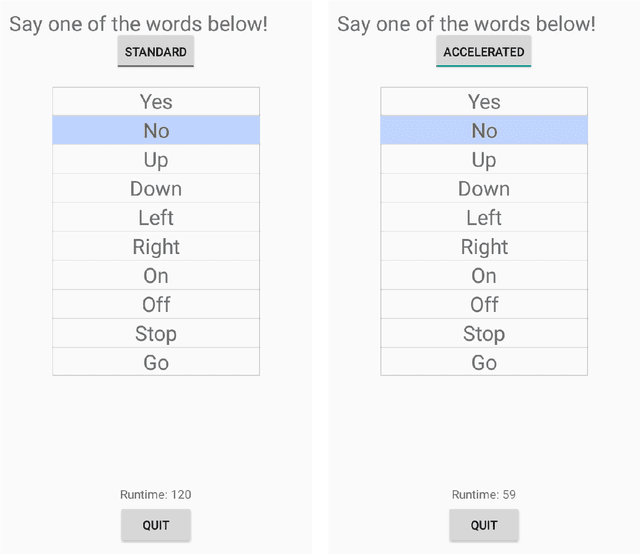

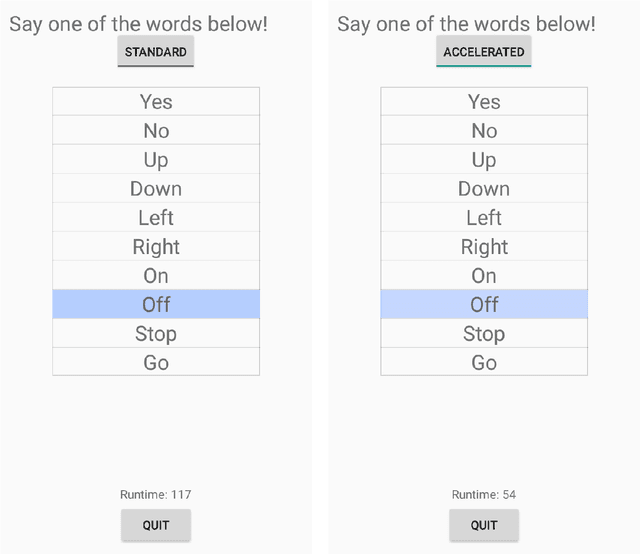

Abstract:We propose and study a new projection formula for training binary weight convolutional neural networks. The projection formula measures the error in approximating a full precision (32 bit) vector by a 1-bit vector in the l_1 norm instead of the standard l_2 norm. The l_1 projector is in closed analytical form and involves a median computation instead of an arithmatic average in the l_2 projector. Experiments on 10 keywords classification show that the l_1 (median) BinaryConnect (BC) method outperforms the regular BC, regardless of cold or warm start. The binary network trained by median BC and a recent blending technique reaches test accuracy 92.4%, which is 1.1% lower than the full-precision network accuracy 93.5%. On Android phone app, the trained binary network doubles the speed of full-precision network in spoken keywords recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge